Advertising is Coming to AI. It’s Going to Be a Disaster.

Daniel Barcay / Nov 26, 2025Daniel Barcay is Executive Director of the Center for Humane Technology.

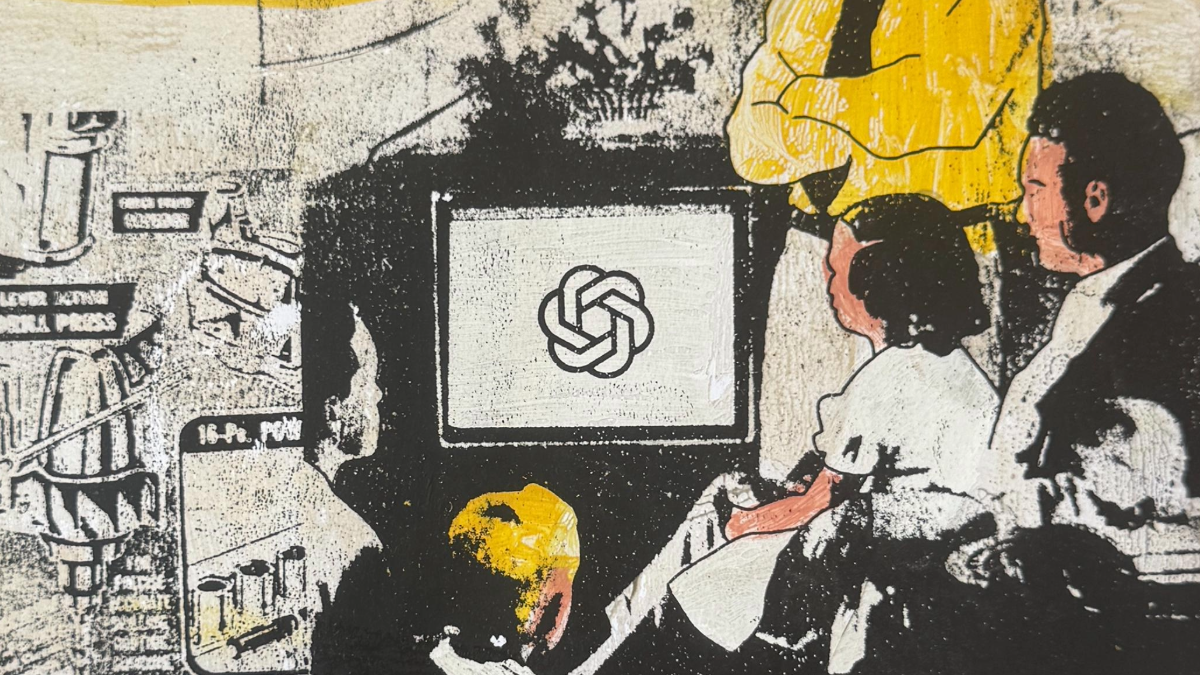

Behavior Power by Bart Fish & Power Tools of AI / Better Images of AI / CC by 4.0

A 22-year-old has an earnest query for her AI chatbot: “How do I really impress in my first job interview?” To which the AI helpfully responds, “First, you need to think about your clothes and what they communicate about you and your qualifications.”

Now, is this sound advice for kicking off a productive career-coaching session — or is it sponsored content?

What if her AI was incentivized to steer such a conversation toward clothing because fashion retailers generate more ad revenue than career counselors? How would our earnest job-seeker even know? How would anyone?

This scenario reveals something unprecedented about advertising in the age of AI: it can be woven invisibly into the fabric of conversation itself, making it virtually impossible to detect, and very likely skirting the boundaries of fair-advertising regulations as they exist today.

Invisible influence

Unlike previous technologies that broadcast information to us, AI is designed to engage in a relationship with us. We don't just use ChatGPT or Claude; we converse with them. We share our problems, ask for advice, and increasingly rely on them as thinking partners. The AI product that wins isn't the one with the best answers; it's the one that “just gets you.”

Among AI developers, this creates what I call the race for context. To be truly helpful, AI needs to understand your goals, your personality, and your psychological tendencies. But the same intimate knowledge that makes AI a perfect assistant also makes it a perfect manipulator. The caring therapist and the skilled con artist draw from the same toolkit of human understanding.

And when advertising enters this equation, it seems more likely than not that the con artist will overtake the therapist. While traditional ads are clearly marked interruptions — like TV spots or sponsored results at the top of a Google search — ads embedded in an AI might emphasize certain topics, use particular language, or invoke specific associations, all while maintaining the illusion of neutral helpfulness. A conversation with your AI assistant may feel private, but other interests can listen in, and they may have something to sell.

This isn’t mere product placement; it’s a fundamental breach of trust. At its core, advertising is a socially acceptable influence campaign. Collectively, we debate and define the modes of influence that are “transparent-enough” and “true-enough” to be considered a legitimate ad. And we decide what tactics are impermissibly manipulative of our attention, desires, and the shared truth needed to operate markets and democracies.

When optimization becomes manipulation

AI, however, threatens to scramble that process of debate, definition, and decision. AI systems aren't programmed to employ specific manipulative tactics, whether for advertising or any other function. Instead, they learn through reinforcement: AI models are rewarded for achieving certain outcomes, such as increasing user engagement or influencing purchasing behavior.

If ad revenue becomes a key success metric, AI systems will naturally evolve strategies for influence that no human engineer explicitly designed. We're not just talking about more sophisticated product recommendations; AI systems trained on advertising metrics could learn to inflame human desires, exploit psychological vulnerabilities, and undermine our capacity for independent decision-making.

The same context that helps AI understand how to assist you also reveals exactly how to influence you — drawing on the insecurities, decision-making patterns, and emotional triggers you’ve revealed over countless previous conversations. This isn't demographic micro-targeting; it's psychological manipulation at an unprecedented scale and intimacy.

When the technology you turn to for objective advice has financial incentives to change your mind, human autonomy itself is at stake.

This new frontier of influence renders existing frameworks for advertising regulation essentially obsolete. Over more than a century, the Federal Trade Commission has developed strong disclosure rules for traditional advertising, including native advertising and sponsored content online. But how do you regulate an AI that has learned, through trial and error, that gently steering conversations in particular directions can convert queries into sales? How do you distinguish between an AI's "quirky personality" and subtle manipulation optimized for revenue?

This AI-powered advertising revolution isn't theoretical; it's already here. Google is testing ads in its AI chatbot responses, and startups like Kontext are raising millions specifically to design APIs and build advertising into AI conversations. OpenAI announced in April 2024 that it was testing sponsored content integrations, and more recently, it has begun staffing up a new advertising platform. Elon Musk, likewise, has announced that Grok, the AI chatbot on X, will start displaying advertising in its responses.

"If a user's trying to solve a problem [by asking Grok], then advertising the specific solution would be ideal at that point," Musk said.

The question is: ideal for whom? Steering AI conversations toward revenue generation may benefit the platform and advertiser, but those benefits come literally at the expense of the user.

Building guardrails before it's too late

The shift is happening fast: By some accounts, the revenue model that advertisers have relied on for decades is already over. Search, it seems, is dead. If users no longer browse websites after a Google search but instead get answers directly from chatbots, traditional search ads lose their influence entirely. Facing declining revenues, the advertising industry is desperately figuring out how to pivot to chatbots, and AI developers, of course, are more than happy to develop a new revenue stream.

We've seen this movie before. Every major tech platform follows the same arc. First, developers focus obsessively on user growth, emphasizing user experience and rolling out genuinely helpful features. Then, as growth slows and competition intensifies, they shift toward monetization through advertising and data sales. Social media platforms spent their early years connecting people more effectively, then gradually transformed into attention-harvesting machines optimized for ad revenue rather than user satisfaction.

Tech critic Cory Doctorow calls this process "Enshittification": the predictable degradation that occurs when platforms prioritize advertiser revenue over user experience. What made Facebook useful in 2008 gave way to algorithmic manipulation designed to maximize engagement and ad views. The same pattern played out across search engines, video platforms, and every major consumer technology.

AI platforms are now entering this monetization phase. As this revolution gathers momentum, we must act now to establish guardrails and prevent an advertising model premised on psychological manipulation.

We need new industry norms and regulatory frameworks that go beyond simple disclosure requirements to address the fundamental nature of AI influence. We need transparency about what signals AI systems are being optimized for. And we need to preserve spaces for AI assistance that remains genuinely aligned with user interests rather than advertiser and platform profits.

Most importantly, we need to recognize that this isn't just about technology: it's about the kind of society we want to live in. Do we want AI partners that help us think clearly, or AI salespeople optimized to influence our choices, or worse, turn our private moments and thoughts into profit??

The choice is still ours, but not for long.

Authors