Confronting the Threat of Deepfakes in Politics

Maggie Engler, Numa Dhamani / Nov 10, 2023Numa Dhamaniis an engineer and researcher working at the intersection of technology and society. Maggie Engler is an engineer and researcher currently working on safety for large language models at Inflection AI.

On July 17, 2023, Never Back Down, a political action committee (PAC) supporting Ron DeSantis, created an ad attacking former President Donald Trump. The ad accuses Trump of targeting Iowa Governor Kim Reynolds, but a person familiar with the ad confirmed that Trump’s voice criticizing Reynolds was AI-generated, where the content appears to be based on a post by Trump on Truth Social. The ad ran statewide in Iowa the following day with at least a $1 million ad buy.

This is not an isolated incident — deepfakes have been circulating on the internet for several years now, and it is particularly concerning how deepfakes are, and will be, weaponized in politics. Last month, AI-generated audio recordings of politicians were released days before a tight election in Slovakia discussing election fraud. Deepfakes — AI-generated images, video, and audio are certainly a threat to democratic processes worldwide, but just how real of a threat are they?

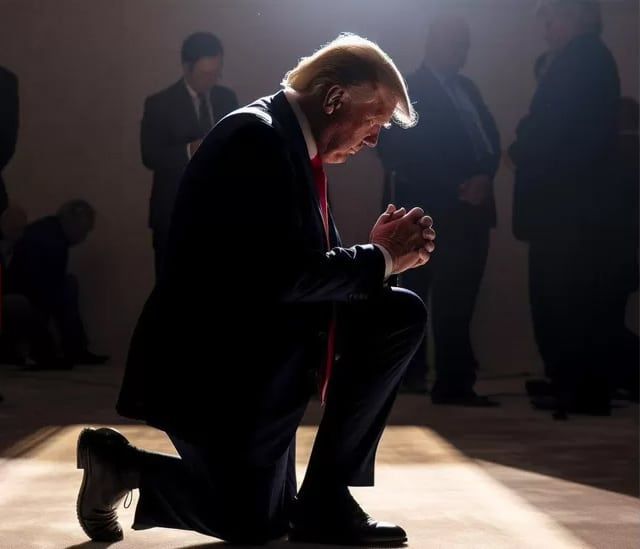

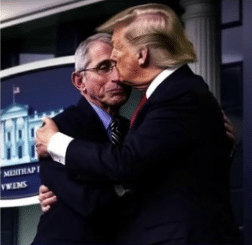

Next year, more than 2 billion voters will head to the polls in a record-breaking number of elections around the world, including in the United States, India, and the European Union. Deepfakes are already emerging in the lead up to the 2024 U.S. presidential election, from Trump himself circulating a fake image of himself kneeling in prayer on Truth Social to a DeSantis campaign video showing Trump embracing Dr. Anthony Fauci, former Chief Medical Advisor to the President of United States.

The most obvious concern with deepfakes is the ability to create audio or video recordings of candidates saying things that they never said. But perhaps a bigger concern revolves around the ability to distinguish the authenticity of a candidate’s statement. During the 2016 U.S. presidential election, a 2005 Access Hollywood tape captured Trump saying that he could grope women without their consent. Trump initially apologized, but then later suggested that it was fake news. In a world where the public does not know if an audio or video of a candidate is real or fake, will individuals caught in a lie or scandal now blame it on a deepfake?

Left: Former President Donald Trump posted an image of himself on Truth Social, but upon a closer look, Trump’s fingers are misformed indicating that the image is AI-generated. Right: A DeSantis campaign video shows manipulated images at 00:24-00:30 seconds of Donald Trump embracing Dr. Fauci. (source)

Detecting Deepfakes

AI models that can generate new images and videos have existed for years, but within the last two years, the technology has become both much more realistic and much more accessible to the general public with the release of text-to-image tools like Stable Diffusion, Midjourney, and DALL-E.

Dr. Hany Farid, a professor at the University of California, Berkeley, and a digital forensics expert, is maintaining Deepfakes in the 2024 Presidential Election webpage containing a dozen or so known examples. Farid is one of the world's leading experts in the detection of deepfakes and has been thinking about the problem of digital forensics for decades. He was one of the developers, in collaboration with Microsoft, of a hashing technique called PhotoDNA that has been used since 2007 to help remove digital child sexual abuse material (CSAM), commonly referred to as child pornography.

PhotoDNA creates a unique signature of each image that can be used to detect and remove it even after modifications like cropping. PhotoDNA, and similar solutions, can be extraordinarily effective for traditional photographs, but they fall short in today’s world where new, synthetic images can be generated in an instant.

Farid and his colleagues, as well as others in the academic community, have dedicated significant time and effort to building models that can differentiate between "real" images and those that were created with AI. Image generation models have historically had a few quirks that can serve as dead giveaways — for a long time, AI was notably poor at people's hands, for example — and classifiers can pick up on these different failure modes in order to assess the probability that a given image is AI-generated.

“Humans shaking hands” generated by OpenAI’s DALL-E 2.

Unfortunately, deepfake detection is a classic adversarial problem: the image generation models are constantly getting better, with each of their weaknesses being remedied over time. To build any deepfake detection model, the training data would include real images and synthetic images from different image generation models. One early model may generate images that look almost real, but are a little fuzzy. A new image generation model then comes along, and generates images that also look almost real, but have a little bit too sharp of edges. The detection model trained to identify synthetic images from the first model wouldn't necessarily transfer well to detect images from the second, because it's looking for too-fuzzy images instead of too-sharp.

The same basic principles apply to other forms of media, such as audio and video. A 2023 paper from Farid's group at UC Berkeley describes a series of detection strategies for discerning real voices from AI-generated voices that rely on "statistical and semantic inconsistencies" to predict which voices are fake. Such techniques are known as "passive" since they can be performed after the content has been generated, but have a shorter shelf life as models improve. This limitation is one of the reasons that many practitioners have turned their focus towards "active" techniques, which embed a watermark or extract a fingerprint from synthetic content at the point it is created. Digital fingerprints are cryptographic hashes, like those used in PhotoDNA, that can be used to track a single piece of synthetic content across the web. Watermarking is a conceptually similar statistical process that is used to demarcate AI-generated content, at the time of synthesis, by introducing typically imperceptible amounts of noise. Academics have also proposed other provenance-based methods to track the source of images and other media as they spread across the web. Such tools have high barriers to adoption because they require coordination of image creators as well as buy-in from the public.

Regulating Deepfakes

Unfortunately, technical mitigations alone are moving too slowly to address the imminent threat of deepfakes in the political and electoral arenas. In addition, when it comes to political ads, the First Amendment to the U.S. Constitution protects lies. Thus it is not illegal for candidates to simply lie in their paid ads, which makes it difficult to regulate deepfakes. Many proposed laws may be hard to enforce or not broad enough to cover enough use cases. For example, California Governor Gavin Newsom signed the Anti-Deepfake Bill into law in 2019, which attempted to ban political deepfakes, but it was ultimately ineffective because it required intent. Proving that the person who created a deepfake intended to be deceptive is never straightforward and often nearly impossible. The law also only applied to deepfake content distributed within 60 days of an election and was only applicable to individuals affected in the state of California.

While several states have attempted to regulate deepfakes, there is no federal legislation to address the potential threats of deepfake technology within the United States. In July 2023, the White House secured voluntary commitments from seven leading technology companies — Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI — where one of the commitments stated, “...ensure users to know when content is AI generated, such as a watermarking system.” For example, these companies can insert digital watermarks with the idea that when a deepfake is spotted in the wild, there is a reasonable chance to extract digital watermarks to be able to detect what is real and what is fake. In October 2023, President Biden issued a landmark Executive Order stating that the Department of Commerce will develop guidance for watermarking to be able to clearly label AI-generated content. This, however, has yet to be seen in practice.

On the other side of the Atlantic, the European Union AI Act is on track to become the world’s first comprehensive law to govern the development and use of AI technology. The EU AI Act has similar transparency requirements for generative AI systems — through the use of watermarking or fingerprinting technology, AI developers will be required to disclose that the content is AI-generated. However, the EU AI Act is not expected to come into force until the end of 2025 or early 2026.

In this context, it can seem like all hope is lost for countering deepfakes in politics, and for the 2024 election cycle, it very well may be. Deepfake detection after the point of creation is imperfect at best, and watermarking techniques have yet to be implemented at scale. While both passive and active techniques for deepfake detection can be successful in individual cases, they are not yet robust standalone solutions. However, there are a few concrete actions that regulators, civil society, and the public at large can take to prepare better for future election cycles.

Albeit somewhat belatedly, the United States government is taking the threat of deepfakes seriously, with the National Security Agency and several federal agencies issuing guidance on it in September. Although the government should not interfere with the rights of candidates to express themselves, they can and should place limitations on the generation and dissemination of misleading deepfakes. California's state law against the distribution of "materially deceptive audio or visual media" of a candidate for elective office may serve as a model for this type of action. Nonprofit organizations have trained observers to spot deepfakes ahead of one of the biggest global election years in history; these efforts in digital literacy are of great importance. We know that the likelihood of encountering synthetic content on social media is virtually certain, and we all share the burden of consuming and sharing content responsibly. Technical controls such as detection and watermarking, regulatory controls, and digital literacy efforts should all be viewed as components of a robust detection toolkit in order to protect the democratic process from manipulation.

- - -

Dhamani and Engler co-authored Introduction to Generative AI, to be published by Manning Publications. Introduction to Generative AI illustrates how LLMs could live up to their potential while building awareness of the limitations of these models. It also discusses the broader economic, legal, and ethical issues that surround them, as well as recommendations for responsible development and use, and paths forward.

Authors