Deplatforming Accounts After the January 6th Insurrection at the US Capitol Reduced Misinformation on Twitter

Gabby Miller, Justin Hendrix / Jun 5, 2024

A tweet by former President Donald Trump appears on screen during a House Select Committee hearing to Investigate the January 6th Attack on the US Capitol, in the Cannon House Office Building on Capitol Hill in Washington, DC on June 9, 2022. (Photo by BRENDAN SMIALOWSKI/AFP via Getty Images)

After the January 6, 2021 insurrection at the US Capitol, major social media platforms including Twitter, Facebook, and YouTube took significant action to remove content and sanction accounts spreading false claims about the 2020 election in order to prevent further political violence. In the days following the attack, these platforms suspended the account of former President Donald Trump and deplatformed tens of thousands of other accounts deemed to be in violation of their terms of service.

Prior research on deplatforming has yielded contradictory findings and indicated its effects are complex. But there is evidence supporting the position that deplatforming accounts in violation of platform policies can be effective in reducing various online harms, even if the individuals that are deplatformed flock to alternative platforms.

New research published in the science and technology journal Nature adds to this body of evidence. The paper, titled “Post-January 6th deplatforming reduced the reach of misinformation on Twitter,” finds that in the days following the January 6th insurrection, Twitter’s decision to quickly deplatform 70,000 accounts trafficking in misinformation measurably reduced the overall circulation of misinformation on the platform. It did so in multiple ways, including by reducing the amount of misinformation shared by the accounts themselves and thus reshares by followers of those accounts, and by precipitating the voluntary exit from the platform of other users who regularly spread false claims, such as QAnon followers.

Asked about the paper’s results, Anika Collier Navaroli, a former Twitter senior policy official who testified before the US House Select Committee that investigated the January 6 attack on the Capitol, told Tech Policy Press that she was “not shocked” by the paper’s findings. “In many ways it’s graphs and data that repeat what many folks like myself have been saying since 2020,” she said. “Trust and Safety teams are mediating global political speech with huge consequences with a few policies and a couple of people.” Navaroli added it’s “honestly heartbreaking” that the meaningful but dangerous work trust and safety teams carry out has been “so significantly scaled back” ahead of the 2024 elections.

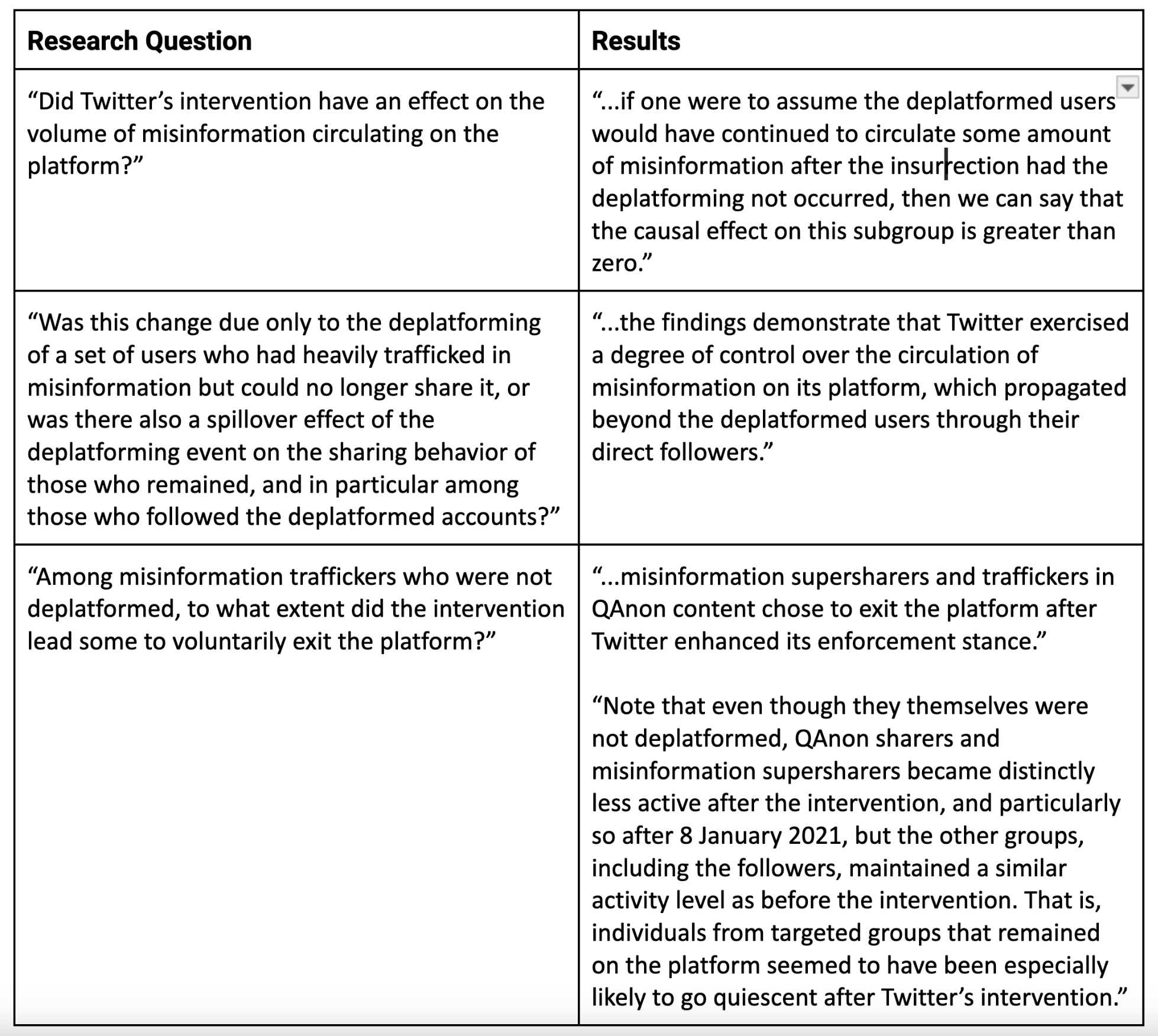

Deplatforming, as defined by the paper’s authors, is one of the primary ways social media platforms can moderate content. The researchers evaluated its effectiveness in reducing the spread of misinformation on Twitter by studying data related to more than 500,000 Twitter users who were active in the election cycle between June 2020 and February 2021. The study centered around a series of three questions, including whether Twitter’s deplatforming interventions affected the volume of circulating misinformation; whether the volume change was due only to the deplatformed users or if there was a spillover effect onto remaining users with shared behaviors; and to what extent misinformation traffickers who weren’t deplatformed voluntarily exited Twitter.

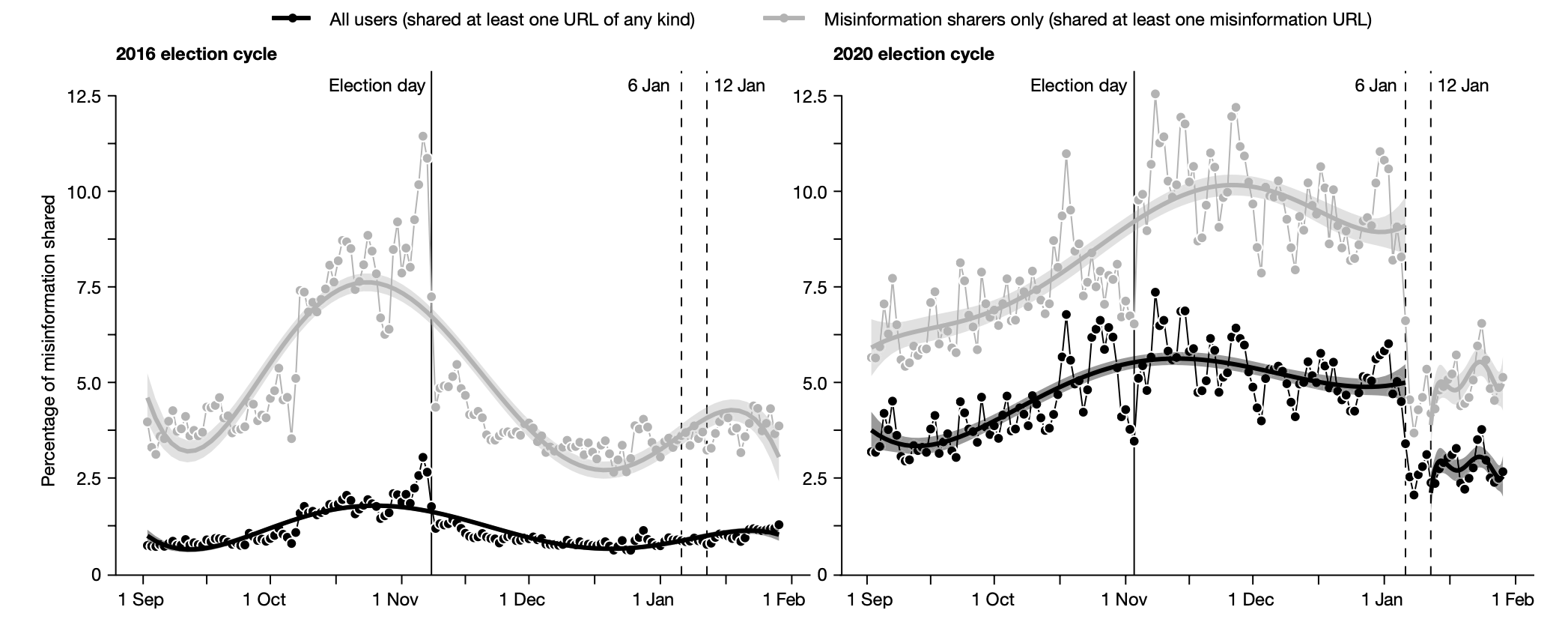

To answer these questions, the researchers used data acquired during the two most recent US election cycles to compare the misinformation sharing rates on Twitter. The 2020 election share rate among all Twitter users was five times higher than in the 2016 elections, and about twice as high among misinformation sharers. Following the November 2020 elections, and up until January 6th, the rates of misinformation remained “relatively high,” which is in direct contrast with the 2016 elections, where misinformation “declined immediately following Trump’s victory.” These results suggest “a marked change in the misinformation ecosystem on Twitter over time,” according to the paper.

A graph depicting misinformation sharing on Twitter during the 2016 and 2020 US election cycles, with a notable effect following the intervention after January 6, 2021. Source

Immediately following Twitter’s decision to deplatform misinformation sharers after January 6th, a sharp drop occurred in the daily percentage of posts containing ‘misinformation URLs’ among all tweets and retweets. This “significant reduction” in the spread of misinformation came as no surprise to the paper’s researchers given that Twitter targeted the “worst offenders” of its civic integrity policy for deplatforming. The intervention reduced the percentage of total amounts of misinformation, according to the paper. News reporting at the time revealed that, among the sanctioned accounts, Twitter's intervention targeted prominent conservative activists and influential QAnon adherents associated with spreading election misinformation.

“This is a methodologically strong paper showing that banning misinformation spreaders on Twitter reduced the sharing of misinformation by other users who remained on the platform,” wrote Manoel Ribeiro, a PhD candidate who studies the impact of content moderation at Switzerland's École polytechnique fédérale de Lausanne (EPFL), to Tech Policy Press. However, research considering this intervention on only one specific platform “paints an important yet incomplete picture of our information environment.” Other work has shown that users “consistently migrate to alternative platforms,” like Telegram or Gab, amid deplatforming interventions, according to Ribeiro. “It may be that the reduction in misinformation sharing on Twitter is compensated by an increase in misinformation sharing in other platforms.”

The report makes clear that the authors could not reliably identify the magnitude of the observed effects due to the complexity of the events surrounding the insurrection and the volume of the news reporting on it, and that the results may not be generalizable to other platforms or events. But while Twitter normally “benefitted enormously from Trump’s volatile tweets” by keeping the platform “at the center of public discourse,” they say, the events at the US Capitol marked a moment of crisis for the company. "January 6th is a moment that highlights the relationship between real-world violence and misinformation, as well as the attempt at purposeful control over political speech on Twitter by its leadership,” the paper reads. In th moment of crisis, perhaps the bluntest of content moderation tools appears to have had the intended effect.

“This study confirms the responsibility platforms have to safeguard democracies against disinformation and political violence," Daniel Kreiss, a professor at the Hussman School of Journalism and Media and a principal researcher at the Center for Information, Technology, and Public Life at UNC Chapel Hill, wrote to Tech Policy Press. "When democratic governments and parties fail, platforms should protect the legitimate and peaceful transfer – and holding – of power. As this study shows, their interventions have meaningful, pro-democratic effects on public life.”

This article was updated on June 6, 2024 to include comment from EPFL's Manoel Ribeiro.

Related Reading:

- The Final January 6th Report on the Role of Social Media

- Trump’s Reinstatement on Social Media Platforms and Coded Forms of Incitement

- Tech Platforms Must Do More to Avoid Contributing to Potential Political Violence

- Platforms are Abandoning U.S. Democracy

- Deplatforming Reduces Overall Attention to Online Figures, Says Longitudinal Study of 101 Influencers

Authors