How Your Utility Bills Are Subsidizing Power-Hungry AI

Sasha Luccioni, Yacine Jernite / Aug 6, 2025

COLUMBUS, OHIO - JULY 24, 2025: The COL4 AI-ready data center is located on a seven-acre campus at the convergence point of long-haul fiber and regional carrier fiber networks. COL4 spans 256,000 square feet with 50 MW of power across three data halls. (Photo by Eli Hiller/For The Washington Post via Getty Images)

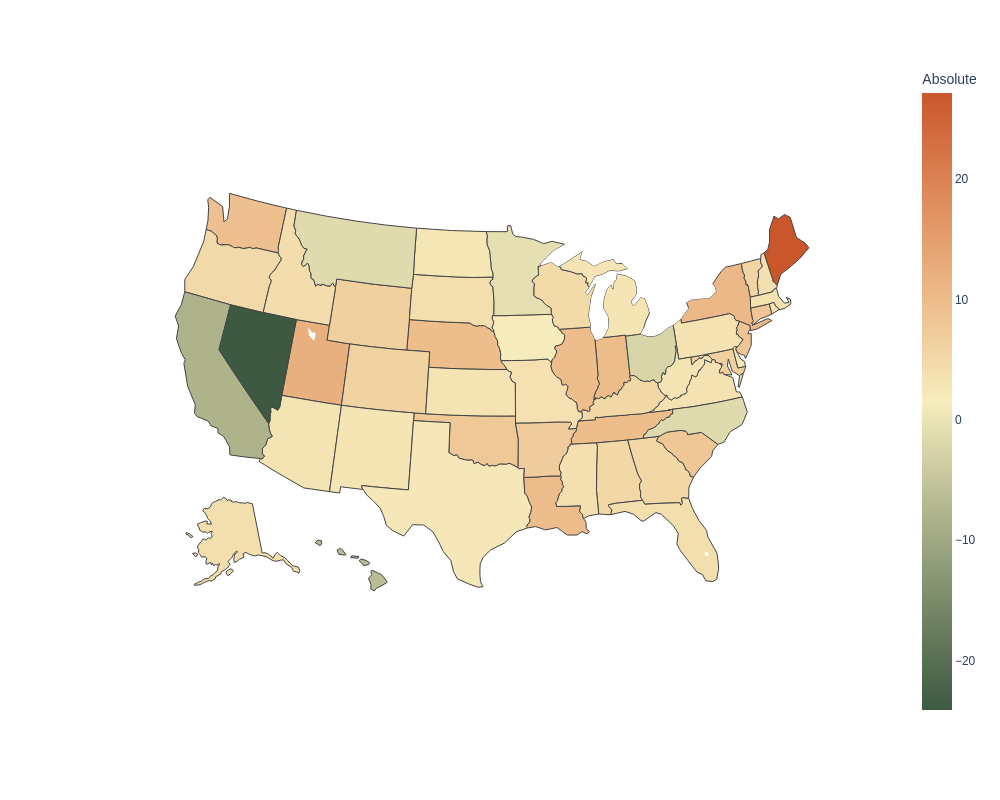

This summer, across the Eastern United States, home electricity bills have been rising. From Pittsburgh to Ohio, people are paying $10 to $27 more per month for electricity. The reason? The rising costs of powering data centers running AI. As providers of the largest and most compute-intensive AI models keep adding them into more and more aspects of our digital lives with little regard for efficiency (and without giving users much of a choice), they grow increasingly dependent on a growing share of the existing energy and natural resources, leading to rising costs for everyone else.

Source: EIA (2025)

AI data centers are expanding—but who’s paying for it?

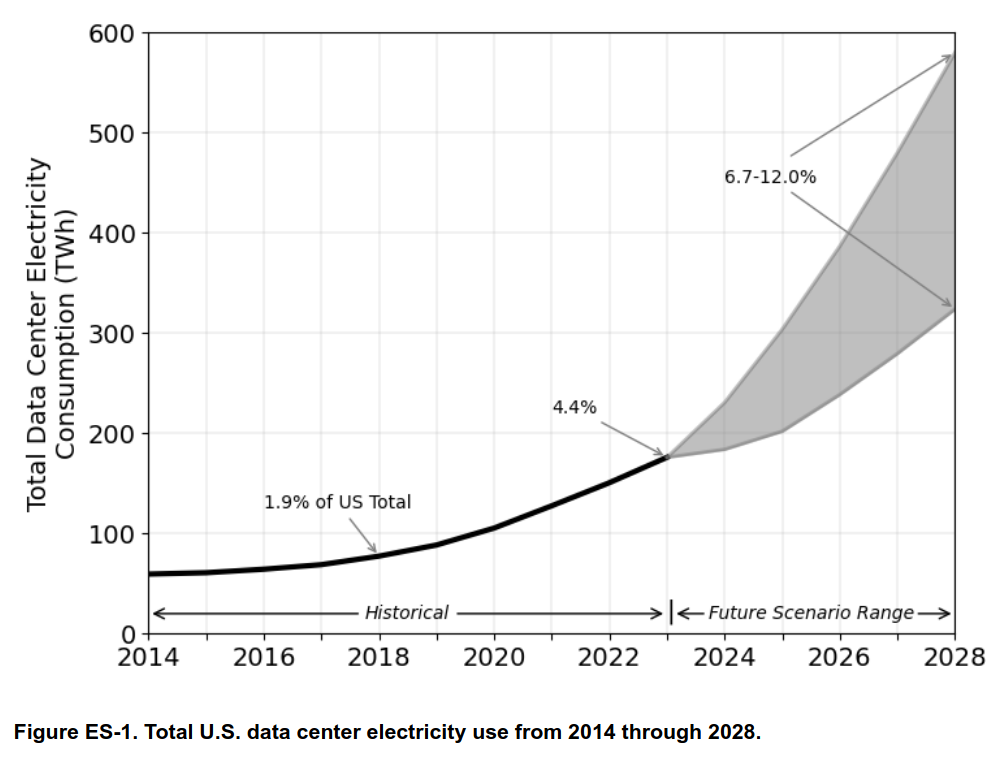

In particular, this means that average citizens living in states that host data centers bear the cost of these choices, even though they rarely reap any benefits themselves. This is because data centers are connected to the whole world via the Internet, but use energy locally, where they’re physically located. And unlike the apartments, offices, and buildings connected to a traditional energy grid, the energy use of AI data centers is highly concentrated; think as much as an entire metal smelting plant over a location the size of a small warehouse. For example, the state of Virginia is home to 35% of all known AI data centers worldwide, and together they use more than a quarter of the state’s electricity. And they’re expanding fast — in the last 7 years, global energy use by data centers has grown 12% a year, and it’s set to more than double by 2030, using as much electricity as the whole of Japan.

Source: Shehabi, A., Smith, S.J., Hubbard, A., Newkirk, A., Lei, N., Siddik, M.A.B., Holecek, B., Koomey, J., Masanet, E., Sartor, D. 2024. 2024 United States Data Center Energy Usage Report. Lawrence Berkeley National Laboratory, Berkeley, California. LBNL-2001637

The costs of this brash expansion of data centers for AI are reflected first and foremost in the energy bills of everyday consumers. In the United States, utility companies fund infrastructure projects by raising the costs of their services for their entire client base (who often have no choice in who provides them with electricity). These increased rates are then leveraged to expand the energy grid to connect new data centers to new and existing energy sources and build mechanisms to keep the grid balanced despite the increased ebb and flow of supply and demand, particularly in places like Virginia that have a high concentration of data centers. Also, on top of amortizing the base infrastructure cost, electricity prices fluctuate based on demand, which means that the cost of having your lights on or running your AC will rise when there is a high demand from data centers on the same grid.

These costs also come with dire impacts on the stability of an energy infrastructure that is already stretched to the breaking point by growing temperatures and extreme weather. In fact, last summer, a lightning storm caused a surge protector to fail near Fairfax, Virginia, which resulted in 200 data centers switching to local generators, causing the demand on the local energy grid to plummet drastically. This nearly caused a grid-wide blackout and, for the first time, made federal regulators recognize data centers as a new source of instability in power supplies, on top of natural disasters and accidents.

What do these data centers even do?

Needing more data centers in an increasingly digitized world is not surprising; it’s not even necessarily a bad thing, as long as we keep track of our ability to sustainably meet the increased demands on common resources, and have some commonly agreed standards for what constitutes a reasonable cost-benefit trade-off. Unfortunately, most current development and deployment of AI tends to skip this latter part entirely, due in great part to a general tendency of the providers to obfuscate the costs while overplaying the scale and distribution of the benefits making the latter particularly difficult.

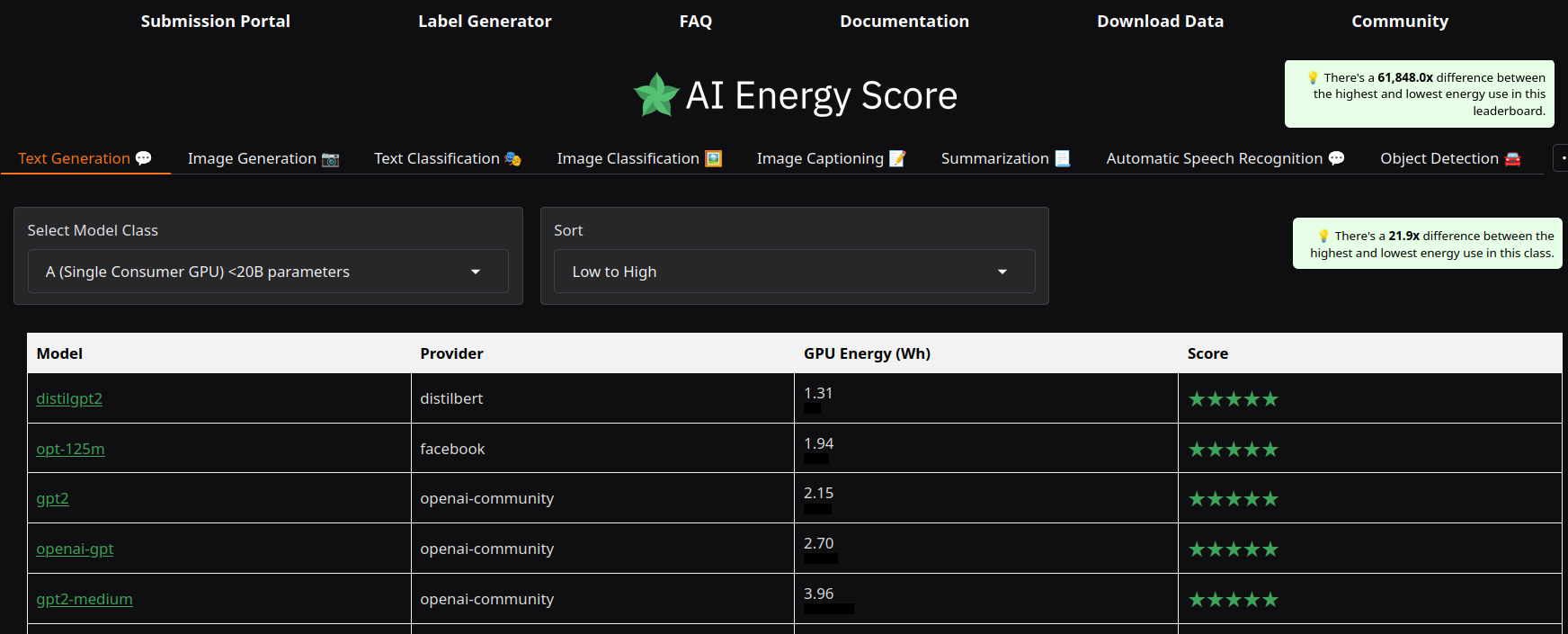

While we do still have a long way to go in better understanding how different aspects of these costs fit together, we do have some meaningful pieces we can start putting together. First, given a desired use of AI, efficiency considerations matter very much, often by several orders of magnitude — for tasks like image and video generation, specific development choices can make the same task up to 50 times more expensive. For applications built on large language models, we now have billion-parameter models (yes, these are the small ones) that can perform similarly to their trillion-parameter counterparts on a growing range of tasks, especially with a little extra adaptation work before deployment in a particular use case, using techniques like knowledge distillation.

Sadly, efficiency isn’t incentivized in the current state of AI — when a large company wants to deploy AI systems for billions of users, having a few instances of deploying a slightly less powerful model can present a huge reputational risk in a competitive market. This means that it’s simpler to just deploy the most powerful and expensive model everywhere — even if that means a 100 or 1000x compute and energy expenditure.

And currently, all companies want to be the first to introduce more and more generative AI “capabilities” into new and existing digital products and services, which use significantly more energy than previous generations that relied on simpler models and approaches. Work that we published last year found that the difference between task-specific models and multi-purpose models for tasks like extractive question answering can be up to 30-fold, which adds up, given the billions of users that use AI tools every day. And despite improvements in hardware and software efficiency, overall energy use is still rising.

How are US states and other countries handling this problem?

While recent decisions at the federal level in the US have been more aligned with a “full speed ahead” approach to data center infrastructure, states such as Ohio and Georgia are passing laws that would put the onus on data centers, not consumers, to pay the cost of new investments to expand the power grid. Countries such as the Netherlands and Ireland have gone a step further, putting moratoriums on the construction of new data centers in key regions until grid operators can stabilize existing grids and make sure that they don’t get overwhelmed.

Source: AI Energy Score Leaderboard

But we still need to rethink our relationship with multi-purpose, generative AI approaches. At a high level, we need to move away from treating AI as a centrally developed commodity, where developers need to push adoption across the board to justify rising cost in a vicious cycle that leads to ever costlier technology, and toward AI that is developed based on specific demands, using the right tool and the right model to solve real problems at reasonable cost. This would enable choosing smaller, task-specific models for tasks like question answering and classification, using and sharing open-source models to allow incremental progress and reuse of models by the community, and incentivize measuring and disclosing the energy consumption of models using approaches like AI Energy Score.

The next few years will be pivotal for determining the future of AI and its impact on energy grids worldwide. It’s important to keep efficiency and transparency at the heart of the decisions we make. A future when AI is more decentralized, efficient and community-driven can ensure that we are not collectively paying the price for the profits of a few.

Authors