Inside the Lobbying Frenzy Over California's AI Companion Bills

Cristiano Lima-Strong / Sep 23, 2025This reporting was supported by a grant from the Tarbell Center for AI Journalism.

California State Assemblymember Rebecca Bauer-Kahan (D), who introduced AB1064, partakes in votes at the State Capitol in Sacramento on Sept. 12. (Photo by Rich Pedroncelli)

SACRAMENTO, Calif. — California lawmakers this month passed dueling measures aimed at improving the safety of artificial intelligence companions, setting up a major showdown over what may become the nation’s most significant protections as fears over the products’ risks rise.

Debate over the bills, AB1064 and SB243, sparked a lobbying blitz in Sacramento in recent months as groups jockeyed to shape what some hope produces model legislation in the United States, particularly given California’s outsize role in setting rules for Silicon Valley.

That battle is now shifting to the governor’s mansion, where California Gov. Gavin Newsom (D) faces yet another high-profile test over AI regulation after siding with tech companies last year in vetoing the state’s most sweeping AI safety bill to date, SB1047.

Children’s safety advocates and critics of the tech giants have largely rallied around AB1064, the Leading Ethical AI Development (LEAD) for Kids Act. The bill would ban companies from offering AI companions to children unless they are “not foreseeably capable” of engaging in potentially harmful conduct, such as encouraging self-harm or engaging in sexual exchanges.

After initially opposing both measures, several tech industry groups have come to back SB243 as an alternative to AB1064, particularly after securing significant concessions that dialed back some of the Senate measure’s more stringent requirements. The bill would require companies to disclose to users when they are interacting with an AI companion, while creating added safeguards for minors.

Safety concerns about AI companions have ballooned in the wake of explosive reports and lawsuits alleging that AI companions and chatbots developed by OpenAI, Meta and others have helped young users to take their lives or engage in sexual role play.

Assemblymember Rebecca Bauer-Kahan (D), who introduced AB1064, said the outcry over those cases helped propel her legislation through the chamber on a bipartisan basis.

“It hits that issue straight on because what the bill does is it basically requires that if we're going to release companion chatbots to children, [that] they are safe by design, that they do not do the most harmful things,” she told Tech Policy Press in an interview.

The bill has faced steady opposition from business and tech industry groups, including the California Chamber of Commerce and tech trade associations such as TechNet, CCIA and the Chamber of Progress, which together represent many of Silicon Valley’s biggest firms. The groups argue that the bill would chill innovation and implicate many AI tools far beyond its intended scope.

Aodhan Downey, a state policy manager for CCIA, said in an interview that the measure’s “subjective” standards, including a restriction against AI companions “prioritizing validation of the user’s beliefs” over accuracy, could open companies up to a wave of litigation.

In an op-ed for the regional Times of San Diego that was circulated in the halls of the California legislature in the weeks leading up to the recent votes, Chamber of Progress CEO Adam Kovacevich argued that AB1064’s description of AI companions was far too broad and could have a chilling effect on the development of educational tools for children.

“You’d be hard-pressed to find an AI tool that doesn’t fit that definition,” wrote Kovacevich, whose group is funded by companies including Google, Apple and Amazon.

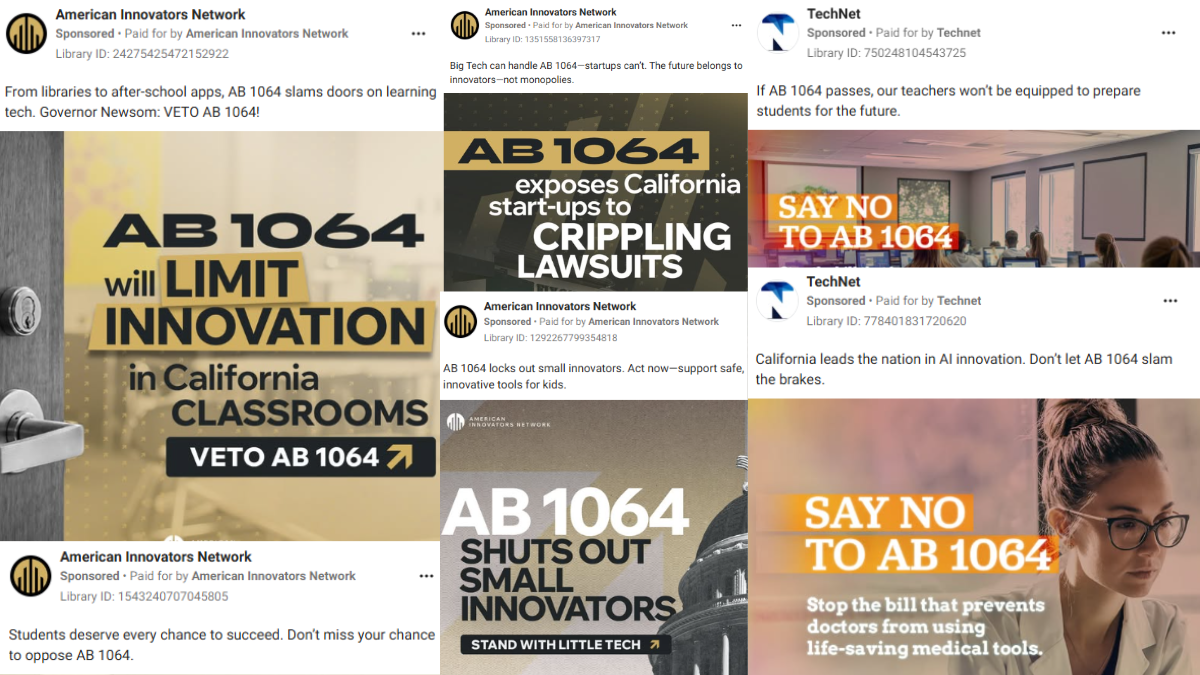

That argument featured prominently in ads criticizing the bill run by the American Innovators Network, a new coalition of AI startups and entrepreneurs spearheaded by venture capital firm Andreessen Horowitz and tech incubator Y Combinator.

A collage of ads taken out on Facebook and Instagram by the American Innovators Network and TechNet criticizing California's AB1064, including messages warning about its potential impact on students and teachers. (Screen grabs by Tech Policy Press of Meta's ad library.)

Over the past three months, the group has spent over $50,000 on more than 90 digital ads targeting California politics, according to a review of Meta’s political ads library.

Over two dozen of the ads specifically targeted AB1064, which the group said would “hurt classrooms” and block “the tools students and teachers need.” Several others more broadly warned against AI “red tape,” urging state lawmakers to “stand with Little Tech” and “innovators,” while dozens more took aim at another one of Bauer-Kahan’s AI bills.

TechNet has spent roughly $10,000 on over a dozen digital ads in California expressly opposing AB1064, with messages warning that it would “slam the brakes” on innovation and that if passed, “our teachers won’t be equipped to prepare students for the future.”

The Chamber of Progress and TechNet each registered nearly $200,000 in lobbying the California legislature the first half of this year, while CCIA spent $60,000 and the American Innovators Network doled out $40,000, according to a review of state disclosure filings. Each group was active on both SB243 and AB1064, among numerous other tech and AI bills.

Bauer-Kahan said the lobbying pressure from industry has been intense. “I've never had an entity hire new lobbyists to come on at the last minute to try to fight me,” she said.

Lobbying disclosures for the third quarter, when activity was likely at its peak, are not yet public.

The state lawmaker said she is hopeful that Newsom, who now has until Oct. 12 to decide whether to sign or veto the bill, will side with her and children’s safety advocates.

“The governor is first and foremost a dad, and I think his children are experiencing the same thing my children are experiencing with technology, so I am very optimistic he will lead from a place of caring for California's children,” said Bauer-Kahan.

She may have an ally in the governor’s mansion. Newsom's wife, First Partner Jennifer Siebel Newsom, spoke on a panel alongside Bauer-Kahan last month hosted by Common Sense Media and Tech Oversight California.

At the event, which was held to rally support for child online safety measures, Siebel Newsom said that regulation in the space was “essential,” because, “otherwise we’re going to lose more kids,” according to The Sacramento Bee.

While advocacy groups including Common Sense and Tech Oversight California initially supported SB243, the other contender vying for Gov. Gavin Newsom’s signature, several withdrew their support in recent weeks after the measure’s protections were pared back.

The latest iteration, which cleared both chambers in California on a bipartisan basis, would require companies to give users a “clear and conspicuous notification” when they are interacting with AI companions if there is reason to believe they could be misled.

It would also require that companies maintain a “protocol for preventing the production of suicidal ideation, suicide, or self-harm content to the user,” such as directing them to crises services or suicide hotlines, and that they take “reasonable measures” to prevent AI companions from directing minors to engage in sexual conduct.

"Fundamentally, we want something that provides real protections, something that's operational and something that can be in place this January,” State Sen. Steve Padilla (D), who introduced the bill, told Tech Policy Press.

California State Sen. Steve Padilla (D), left, who introduced SB243, speaks to a colleague during votes at the State Capitol in Sacramento on Sept. 12. (Photo by Rich Pedroncelli)

The Tech Oversight Project, the parent organization for Tech Oversight California, revoked its support for the bill just days before it hit the floor for final passage, as did Common Sense Media and the California branch of the American Academy of Pediatrics (AAP-CA), according to internal letters circulated in the California legislature and obtained by Tech Policy Press.

The Tech Oversight Project, which receives funding from philanthropic organizations including the Omidyar Network, said recent amendments “set the lowest possible bar” for companies to prevent harm to minors, introduced “industry-friendly exemptions” for products like video games and smart speakers, delayed implementation requirements and scrapped third-party audits. (The Omidyar Network provides grant funding to Tech Policy Press.)

AAP-CA, meanwhile, wrote to lawmakers that it was “extremely concerned” that the bill was so weakened that it could “mislead parents and users into believing they are safeguarded when they are not,” and that it could “establish a harmful precedent for other states.”

Unless lawmakers restored key protections including the third-party audits, the group wrote, “SB243 risks becoming an empty gesture rather than meaningful policy.”

Julie Scelfo, founder and executive director of the advocacy group Mothers Against Media Addiction (MAMA), said in an emailed statement that they are also no longer in support of SB243 due to “disappointing and typical dirty tricks” by tech groups to dilute the bill.

In the first half of this year, Common Sense registered over $94,000 in lobbying on AB1064 and other bills, while Tech Oversight California spent $1,500, including on AB1064 and SB243.

Industry groups have praised Padilla for working with them to tighten the bill’s language and focus more on AI disclosures and mandatory reporting when users engage in suicidal ideation. In his op-ed, Kovacevich called the measure “practical, targeted, and workable.”

CCIA’s Downey said the bill has landed on “more of a reasonable and tailored approach” than 1064.

“I, of course, would want the strongest possible protections we could have, but at the end of the day, we live in a democracy, we have a process,” said Padilla. “You need a legislature to move the bill, you need the governor to sign it in order to have any protections at all.”

While some tech industry groups have embraced SB243 over AB1064, some continue to openly oppose both. Robert Boykin, TechNet's executive director for California and the Southwest, said in a statement that AB1064 "imposes vague and unworkable restrictions,” and while SB243 “establishes clearer rules,” the group continues to have “concerns with its approach.”

In letters sent to Newsom over the last two weeks, obtained by Tech Policy Press, the American Innovators Network asked him to veto both measures, saying AB1064 would “establish sweeping liability that will punish responsible innovators” and that SB243 “opens the door to speculative lawsuits” that smaller tech companies would find difficult to absorb, chilling investments in California.

Newsom has not publicly taken a stance on either measure, but recent amendments to SB243 were made in consultation with his office, according to two people briefed on the matter, who spoke on condition of anonymity to discuss the private deliberations.

“We have been working very collaboratively with the governor's office. Obviously, that's an important party to this piece, to actually get a bill and to get protections in place,” Padilla said.

Newsom’s office declined to comment on AB1064 and SB243. The governor has typically remained mum until he issues a final decision on pending legislation.

California state senators participate in votes as their legislative session winds to a close at the State Capitol in Sacramento on Sept. 12. (Photo by Rich Pedroncelli)

While the two bills take distinct approaches on AI companions, Newsom could opt to sign both into law, a prospect both Padilla and Bauer-Kahan welcomed.

Padilla’s bill includes protections for minors, but it largely focuses on the broader use of AI companions by all consumers, while Bauer-Kahan’s focuses expressly on those under 18.

“There's no reason why these two pieces of legislation can't operate in harmony,” said Padilla.

“Children deserve stronger protections, but everyone deserves some level of protection,” said Bauer-Kahan.

Both lawmakers supported each other’s bills when they reached their respective chambers.

But with industry groups and consumer advocates largely taking opposing stances on the two bills, Newsom is already facing pressure to pick a side.

“It's very disappointing what happened to 243, but the choice for Governor Newsom is clear,” said Common Sense Media founder and CEO Jim Steyer. “We hope and expect that Governor Newsom will sign 1064, which is the bill that matters.”

California would not be the first state to enact new laws on AI companions.

In May, New York Gov. Kathy Hochul (D) signed into law a measure requiring that AI companions deploy a safety protocol when users discuss suicide, such as directing them to crisis services, and notify users when they are interacting with AI, similarly to SB243. The measure passed as part of the state budget and drew less fanfare than California’s bills. Children's safety advocates have argued it would be more protective than California’s SB243.

In Washington, federal lawmakers have also signaled a desire to legislate on the issue in the wake of recent reporting on AI harms, including during a Senate hearing last week with the parents of children whose deaths were linked to exchanges with AI chatbots.

Enforcers at the Federal Trade Commission have also taken up the issue, launching an inquiry into AI companions earlier this month and ordering seven major tech companies including OpenAI, Meta and Google to fork over information about their safety practices.

Given Congress’s glacial pace on tech legislation and California’s track-record of racing past other states in setting standards for the Silicon Valley giants that it is home to, many advocates see the ongoing battle over AI companion legislation in the state as pivotal.

“California essentially leads the nation and quite frankly is the national standard, and when the law passes in California, it becomes the law of the United States,” said Steyer.

Dozens of advocacy groups including Steyer’s Common Sense penned a letter to Newsom on Thursday urging him to sign AB1064, writing, “While Congress is beginning to explore this issue, the children of California cannot afford to wait any longer for protection.”

While many of those at the negotiating table have disagreed on the exact path forward, the lawmakers leading the push echoed that sense of urgency.

Padilla said lawmakers “missed a window” to set protections for social media when it first became widespread, and they cannot repeat that mistake now with AI companions.

“I would say that this particular technology is even more important, it's even more impactful, I think not since the industrial revolution, so we have to move, we have to act,” Padilla said.

Authors