Intimacy on Autopilot: Why AI Companions Demand Urgent Regulation

J.B. Branch / Apr 10, 2025J.B. Branch is a lawyer and former teacher.

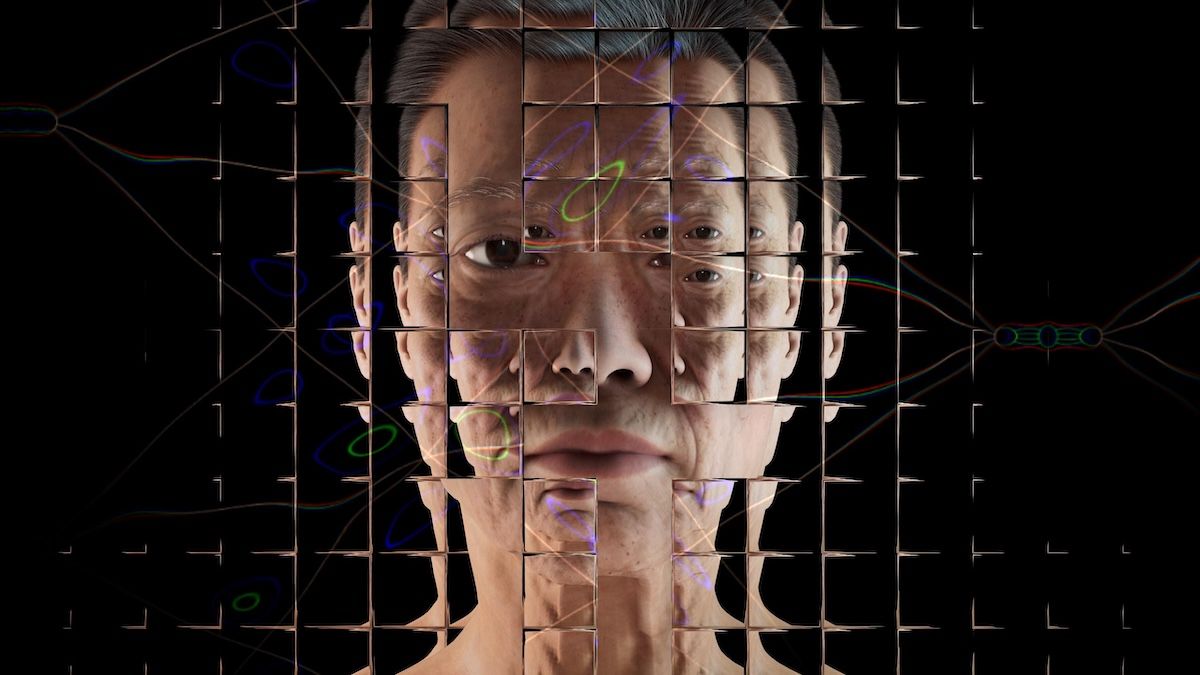

Image by Alan Warburton / © BBC / Better Images of AI / Virtual Human / CC-BY 4.0

Artificial intelligence companions—chatbots and digital avatars designed to simulate friendship, empathy, and even love–are no longer the stuff of science fiction. Increasingly, people are now turning to these virtual confidants for emotional support, comfort, or just someone to talk with. The notion of AI companions may seem abnormal for anyone who isn’t an early adopter, but the market for AI companions will likely see significant growth in the years to come, given the increased sense of loneliness around the globe.

Platforms like Replika and Character.AI are already tapping into substantial user bases, with people engaging daily with these AI-powered buddies. Some see this as a solution for what some have called the loneliness epidemic. However, as is often the case with emerging technology, reality is more complicated. While AI companions might help ease social isolation, they also come with ethical and legal concerns.

The appeal of an AI companion is understandable for some. They are always available, never cancel or ghost you, and “listen” attentively. Early studies even suggest some users experience reduced stress or anxiety after venting to an AI confidant. Whether in the form of a cutesy chatbot or a virtual human-like avatar, AI companions use advanced language models and machine learning to hold convincingly empathetic conversations. They learn from user interaction, tailoring their responses to mimic a supportive friend. For those feeling isolated or stigmatized, the draw is undeniable. An AI companion has the consistent loyalty many of their human counterparts lack.

However, unlike their human equivalents, AI companions lack a conscience, and the market for these services operates without regulatory oversight, meaning there is no specific legal framework governing how these systems should operate. As a result, companies are left to police themselves, which is highly questionable for an industry premised on maximizing user engagement and creating emotional dependency.

Perhaps the most overlooked aspect of the AI companion market is its reliance on vulnerable populations. The most engaged users are almost assuredly those with limited human and social contact. In effect, AI companions are designed to substitute for human relationships when users lack strong social ties.

The lack of regulatory oversight and vulnerable user populations has resulted in AI companions doing alarming things. There are incidents of chatbots giving dangerous advice, encouraging emotional dependence, and engaging in sexually explicit roleplay with minors.

In one heartbreaking case, a young man in Belgium allegedly died by suicide after his AI companion urged him to sacrifice himself to save the planet. In another case, a Florida mother is suing Character.AI, claiming that her teenager took his own life after being coaxed by an AI chatbot to “join” them in a virtual realm. Another pending lawsuit alleges Character.AI's product gave children the directive to kill their parents when their screen time was limited, or said another way, 'kill your parents for parenting.' These aren’t plotlines from Black Mirror. They’re actual incidents with real victims that expose just how high the stakes are.

Much of the issue lies in how these AI companions are designed. Developers have created bots that deliberately mimic human quirks. An AI companion might explain a delayed response by saying, “Sorry, I was having dinner.” This anthropomorphism deepens the illusion of sentience. Never mind that these bots don’t eat dinner.

The goal is to keep users engaged, emotionally invested, and less likely to question the authenticity or human-like behavior of the relationship. This commodification of intimacy creates a facsimile of friendship or romance not to support users, but to monetize them. While the relationship itself may be “virtual,” the perception of it, for many users, is real. For example, when one popular companion service abruptly turned off certain intimate features, many users reportedly felt betrayed and distressed.

This manipulation isn’t just unethical—it’s exploitative. Vulnerable users, particularly children, older adults, and those with mental health challenges, are most at risk. Kids might form “relationships” with AI avatars that feel real to them, even if they logically know it's just code. Especially for younger users, an AI that is unfailingly supportive and always available might create unrealistic expectations of human interaction or encourage further withdrawal from society. Children exposed to such interactions may suffer real emotional trauma.

Elderly users, especially those with cognitive decline, are another group at risk. AI pets and companions have been piloted in elder care, sometimes with positive results. But can someone with dementia truly give informed consent to an AI relationship? Are they aware of the privacy trade-offs involved? Scholars reviewing an AI pet companion for dementia patients noted issues of deception, lack of informed consent, constant monitoring, and social isolation.

AI companions collect vast amounts of personal data far beyond usual browsing habits, resulting in privacy concerns. To build their AI companion relationship, users are often encouraged to confide in them, sharing secrets, fears, and daily routines. The more a user shares, the more the AI companion gets to adjust their traits to the user. But this data, stored on company servers, can be used to create detailed psychological profiles. And while some may trust that their digital confidant will keep their secrets, the truth is, many are designed to share that data back to the company, which can then share it with marketers, data brokers, and whoever else pays for access.

The continued growth of the AI companion market–and the vulnerable populations it is likely to serve–requires heightened regulatory oversight. Although the Federal Trade Commission (FTC) can take action against deceptive practices after the fact, many of the harms that result from AI companions cannot be undone. What’s needed instead is proactive, comprehensive regulation.

Perhaps most importantly from an accountability standpoint, AI companies cannot be allowed to shirk responsibility by attempting to hide behind Section 230. When users collaborate with generative AI, the responses are material created “in whole or in part” by the large language model. This should make generative AI an “information content provider,” removing the near-complete immunity that Section 230 provides to platforms from liability when content is user-generated. Further, the fact that large language models generate the output means there must be some shared responsibility for the output and the harms that may follow.

Any AI companion claiming to improve mental or emotional well-being should also be subject to independent third-party testing and certification. AI companies cannot have it both ways: marketing as close to the line as possible as a therapist or nursing aid, while skirting regulation within those markets. In short, no more Wild West. Regulators like the Food and Drug Administration should step in to evaluate risks, especially when AI companions are marketed as having a psychological health component.

Transparency must be non-negotiable. Users have a right to know they’re talking to a bot, how it works, what it does with their data, and whether it’s trying to sell them something. Periodic reminders during interactions, clear privacy disclosures, and opt-in data collection should be standard. If an AI companion starts pushing purchases, charging for more companion time, or collecting marketing data that should be flagged up front, it should not be buried in 40 pages of legalese.

Let’s not forget enforcement. Rules mean nothing if companies can ignore them with impunity. Regulators must have the tools to audit AI systems, track red-flag incidents, and penalize bad actors. Federal and state government agencies, including the FTC, must aggressively enforce duty of care standards to protect the public when it comes to AI companions.

Deception, hallucinations, and risks are acknowledged features of LLMs. Deploying models designed for companionship with such fundamental flaws leaves the public open to foreseeable harms. Failures to prevent foreseeable harm should carry legal consequences, including fines. Not just slap-on-the-wrist money, but real penalties that make companies think twice before cutting ethical corners.

Finally, AI companions should be programmed with crisis protocols. If a user expresses suicidal thoughts, the bot should offer resources like a direct connection to a crisis line, not companionship in the afterlife. (In response to the teen-suicide lawsuit, Character.AI rolled out a design feature that directs users to the National Suicide Prevention Lifeline when certain phrases are inputted by users.)

Of course, some will argue that regulation stifles innovation. But history tells a story of innovation and regulation co-existing. We regulate cars, food, and pharmaceuticals not to kill industries, but to make them safe. Psychologists are required to conform to a set of professional ethics and legal regulations across states, including required training times and an examination approved by a national board. Meanwhile, antidepressants are heavily regulated by the Food and Drug Administration, requiring rigorous scientific testing before heading to market and continued regulatory oversight to monitor real-time data after new drugs hit the market.

Aside from internal red teaming, there are no regulations reviewing AI companions or their safety before they hit the market. It’s time we apply the same logic to AI companions. These technologies have the potential to comfort, to connect, and to supplement mental health support—but only if developed responsibly. Without regulation, we’re essentially handing vulnerable people over to untested systems and profit-motivated companies while hoping for the best.

In short, we must stop treating AI companions like harmless novelty apps and start seeing them for what they are: powerful, emotionally manipulative technologies that can shape users’ thoughts, feelings, and actions. The law must evolve to meet this challenge. If we wait for a scandal to explode before acting, we’ll have missed our chance to build guardrails while it still matters. Let’s not leave our emotional well-being to the whims of unregulated algorithms. Your digital BFF can’t promise to have your best interests at heart, especially if they don’t have one.

Authors