Journalism Meets AI: Shaping the Future with Smart Policies

Amy S. Mitchell / Dec 13, 2023

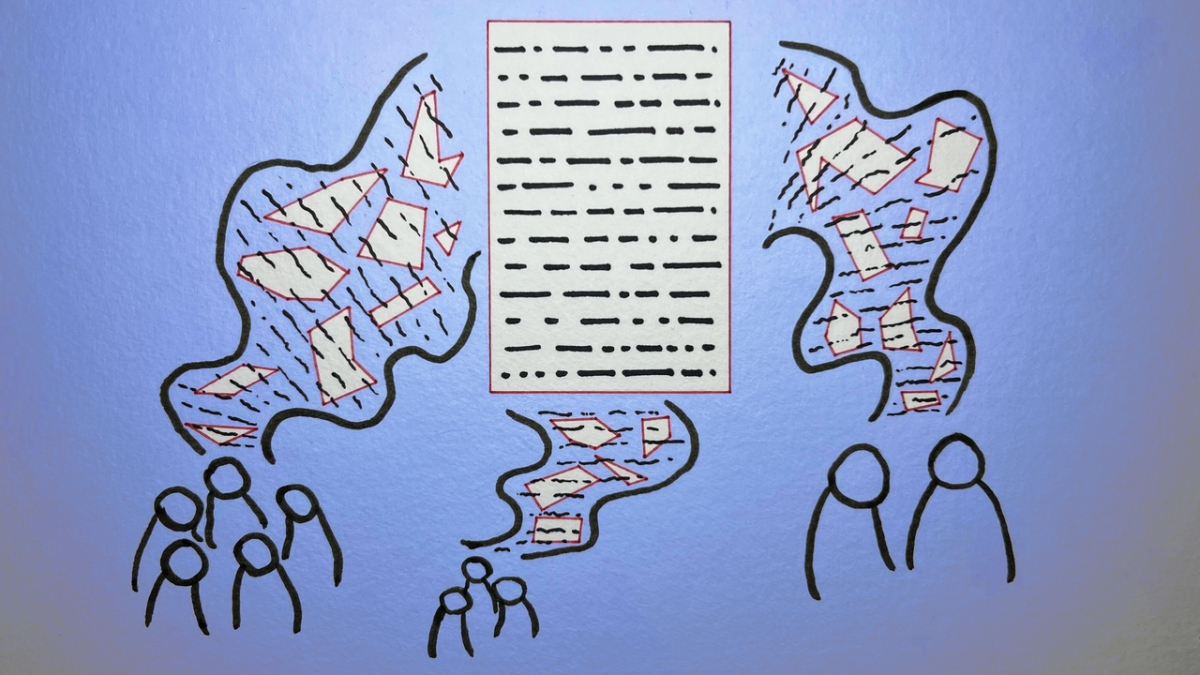

Yasmine Boudiaf & LOTI / Better Images of AI / Data Processing / CC-BY 4.0

Amid the various discussions and proposed policies aimed at managing AI’s use and influence in society, journalism and the delivery of fact-based news to the public can get overlooked. These need to be front and center. As we’ve seen in so many different scenarios these last few years, a clear understanding of current issues and events is critical to a functioning society. Artificial intelligence in all its forms – whether generative or other – has the ability to greatly aid and greatly harm an independent news media and access to fact-based news.

Determining policy and legal parameters for news-related AI practices, categorizing AI types and addressing copyright challenges pose significant challenges. Rapidly changing technologies make legislation even more complicated to develop and adopt but also make it all the more important that any proposal, especially involving copyright, is adaptive and considers the evolving nature of AI technologies.

Experts convened by the Center for News, Technology & Innovation (CNTI). Courtesy CNTI

A recent gathering of globally-oriented cross-industry experts convened by the Center for News, Technology & Innovation (CNTI) from organizations including the Associated Press, Axios, Núcleo Jornalismo and Microsoft shed light on key principles crucial for shaping effective policies governing AI in journalism. As technological innovation intersects with societal impact, it is imperative to work together, using evidence and research, to chart a course that ensures the responsible, equitable, and transparent use of AI.

Some of the takeaways from these technology and journalism experts included:

Better Clarity in Articulation and Categorization:

Experts highlighted the need for clear definitions and categories in AI. The lack of conceptual clarity surrounding AI continues to pose a significant challenge. For effective policy, it is essential there are precise definitions and differentiating between various AI technologies. Any proposed solution won’t rely on a single, exhaustive definition of AI, but rather an overarching understanding built upon existing definitions.

Specificity in Policy for Contextual Evaluation:

The consensus among the experts emphasized that whether AI use is beneficial or harmful depends on its context and degree of use. Effective policy must, therefore, be specific and context-sensitive. Determining the optimal level of transparency requires careful consideration, especially when applied to areas like hiring processes or modeling trillions of tokens in large language models.

Building on Prior Policy:

Acknowledging that policy development is a layered process, the experts stressed the importance of considering new policies in the context of existing ones. Striking a balance between clear definitions and overarching principles that endure technological developments emerged as a challenge. The core principle of a free and independent press, in a comprehensive sense, was highlighted as a lens through which AI use in journalism should be examined.

Addressing Disparities:

The discussion emphasized the need to address global disparities in the uses and benefits of AI, particularly generative AI, as a public good. Recognizing that certain regions lack representation in AI models and that generative AI models often replicate social biases, the experts called for policies that democratize access to AI tools.

Thorough Consideration of Inputs and Outputs:

The experts delved into copyright law and its implications for AI in journalism. Attention was drawn to the need for balanced attention to AI inputs (e.g. training data, queries) and outputs (AI-generated content, public dissemination ,etc.). Potential policy approaches were outlined, ranging from considering all AI-generated output as in the public domain to short-term protections for AI-generated content.

The conversation expanded to encompass broader considerations of liability in AI policymaking. Transparency from AI model developers was highlighted as crucial for establishing trust in AI tools and their outputs.

Experts convened by the Center for News, Technology & Innovation (CNTI). Courtesy CNTI.

In navigating the AI landscape in journalism, adherence to these principles will pave the way for responsible and inclusive policies. Collaboration among policymakers, publishers, technology developers and academics will be key to addressing risks and opportunities.

This is the first in a series of pieces from the CNTI team based on the organization's Issue Primers, which summarize research and policy from around the world across 15 specific issues. These serve as starting points for cross-industry discussions and collaborative research. The full list of CNTI's priority issues is also available here.

Authors