June 2023 U.S. Tech Policy Roundup

Kennedy Patlan, Alex Kennedy, Rachel Lau / Jun 30, 2023Rachel Lau, Kennedy Patlan, and Alex Kennedy work with leading public interest foundations and nonprofits on technology policy issues at Freedman Consulting, LLC. Alison Talty, a Freedman Consulting Phillip Bevington policy & research intern, also contributed to this article.

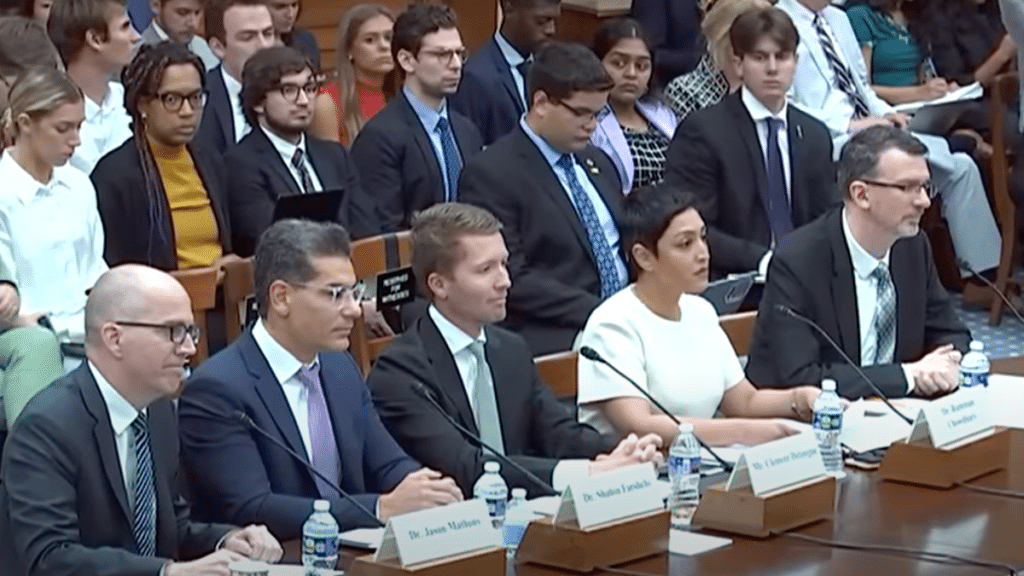

AI took the spotlight for much of June: Senate Majority Leader Chuck Schumer (D-NY) launched the SAFE Innovation Framework for establishing legislative priorities on AI, the National AI Advisory Committee published its first report, and over 60 civil society organizations called on the White House and federal agencies to prioritize civil rights protections and equity provisions in AI policy. Early in the month, Sen. Richard Blumenthal (D-CT) expressed interest in proposing a new agency to coordinate AI issues, alongside other proposals for new digital agencies. Later, the House Committee on Science, Space, and Technology held a hearing, “Artificial Intelligence: Advancing Innovation Towards the National Interest,” which included testimonies from business, academic, and civil society representatives. Google urged the federal government to keep AI oversight spread across multiple agencies, while Meta laid out how it deploys AI across its platforms.

Tech policy activity outside AI spanned a wide range of concerns. Rep. Lou Correa (D-CA), who opposed a variety of tech antitrust bills in the previous Congress, replaced Rep. David Cicilline (D-RI) as the Democrats’ ranking member for the House Judiciary subcommittee on antitrust. In June, the Senate also held a confirmation hearing for potential Federal Communications Commission (FCC) commissioner Anna Gomez.

In agency news, the FCC launched the Privacy and Data Protection Task Force for interagency coordination on privacy issues. Over 40 civil society organizations also published a letter in response to the Federal Trade Commission’s (FTC) Commercial Surveillance and Data Security rulemaking (ANPR), calling for “specific, concrete civil rights protections.” The FTC also published a blog post exploring potential competition issues raised by generative AI.

Kids’ online safety and data privacy continued to be a hot topic on the Hill, with 20 House representatives requesting information from social media companies on measures they are taking to protect children online. The Senate Health, Education, Labor and Pensions (HELP) Committee held a hearing on youth mental health, and Sen. Ted Cruz (R-TX) published a letter to Mark Zuckerberg requesting information on Meta’s actions addressing child sexual abuse material on its platform. Over 60 organizations sent a letter urging congressional leaders to bring the Children and Teens' Online Privacy Protection Act (COPPA 2.0, S. 1418) to a vote as kids’ online safety bills have stalled in Congress. Also this month, Microsoft was charged $20 million in fines for violating the FTC Act and COPPA by failing to protect kids’ accounts on Xbox Live.

In other platform-related news, Senate Judiciary and Senate Commerce panels sent a letter to TikTok CEO Shou Chew questioning TikTok’s management of American user data. Meanwhile, various platforms have relaxed their misinformation policies. YouTube rolled back its policy against U.S. election denialism, and Meta reinstated Robert F. Kennedy Jr.’s Instagram account for his presidential run – he was banned in 2021 for spreading COVID misinformation. Democratic members of Congress and civil rights organizations also wrote letters to Neal Mohan, CEO of YouTube regarding concerns over the platform’s handling of election misinformation. Finally, a lower-court ruling from the Ninth Circuit blocked Facebook from using Section 230 to excuse discriminatory advertising on the platform, granting policymakers and advocates a platform accountability win following SCOTUS setbacks in May.

The below analysis is based on techpolicytracker.org, where we maintain a comprehensive database of legislation and other public policy proposals related to platforms, artificial intelligence, and relevant tech policy issues.

Read on to learn more about June U.S. tech policy highlights regarding efforts to promote equity in AI, action around the Journalism Competition and Preservation Act, and other new legislation.

Major Developments in Federal AI Landscape: Advocacy from Civil Rights Groups, National AI Advisory Committee Year One Report, SAFE Innovation Framework

- Summary: On June 21, Senate Majority Leader Chuck Schumer (D-NY) announced a two-part proposal for congressional leadership on AI. The proposal consists of Senator Schumer’s SAFE Innovation Framework and a call to action for Congress to “invent a new process to develop the right policies to implement our framework,” which will be accomplished through a series of “AI Insight Forums”to take place later this year. The SAFE Innovation Framework aims to protect national security and economic security for workers, support the use of responsible systems that combat misinformation and bias and protect copyright and intellectual property rights, ensure that AI systems are aligned with democratic values and processes, promote explainability, and support U.S. innovation.

- On June 13, The Leadership Conference on Civil and Human Rights, the Center for Democracy & Technology (CDT), and over 60 other organizations sent a public letter on AI policy to the directors of the Office of Science and Technology Policy (OSTP), the Office of Management and Budget (OMB), and the Domestic Policy Council (DPC). The letter commended the Biden-Harris Administration for its recent efforts to center civil rights in technology policy and called for continued leadership on AI, including ensuring “the necessary next steps of further implementation, education, and enforcement across the whole of government.” It urged the administration to make the AI Bill of Rights binding administration policy through OMB guidance, called upon agencies to prioritize coordinated follow-through on Executive Order 14091 and the Blueprint for an AI Bill of Rights, and called for sustained public engagement with diverse stakeholders to better understand and combat algorithmic discrimination.

- On June 22, the National AI Advisory Committee (NAIAC) released its first report, offering recommendations for the U.S. government to “maximize the benefits of AI while reducing its harms.” The report focuses heavily on U.S. leadership in AI innovation, proposing 24 discrete actions focused on “creating and organizing federal AI leadership roles; standing up research and development initiatives; training civil servants in AI; increasing funding of specific programs; and more.” As a result, NAIAC committee members Janet Haven, Liz O’Sullivan, Amanda Ballantyne, and Frank Pasquale used their “Committee Member Perspectives” to urge the Committee and the U.S. to “lead from a position that prioritizes civil and human rights over corporate concerns.” They recommended that NAIAC ground its future work “in a foundational rights-based framework, like the one laid out in OSTP’s October 2022 Blueprint for an AI Bill of Rights,” and that the committee should use a rights-based framework to address urgent issues like the use AI in criminal legal settings, healthcare, public benefits, housing, hiring, employment, and education.

- Stakeholder Response: Via Twitter, NAIAC Committee Member Janet Haven reiterated the need for a rights-based framework for leadership on AI, noting that “AI harms are not hypothetical; they are already here.” She also noted that she and fellow Committee member Liz O’Sullivan will chair a new NAIAC working group focused on “advancing a rights-respecting AI governance framework.” CDT CEO Alexandra Reeve Givens testified before the Senate Committee on the Judiciary Subcommittee on Human Rights and the Law, reiterating the call to action for the federal intervention in the numerous areas where AI is already impacting human rights. Secretary of Commerce Gina Raimondo announced the launch of a new National Institute of Standards and Technology public working group on AI, which will assess and develop guidance for generative AI technologies.

- What We’re Reading: Time, Politico, and The Vergeexamined Senator Schumer’s proposed legislative plan on AI. Justin Hendrix (CEO and Editor of Tech Policy Press) and Paul M. Barrett (Deputy Director of the NYU Stern Center for Business and Human Rights) co-authored an article in Tech Policy Press urging Senator Schumer to “be wary of advice from big tech companies” and use the Senate to “address the fundamentals of regulation.” (The piece followed the release of a report on generative AI that the two authored). In The New York Times, Daron Acemoglu and Simon Johnsonpublished an op-ed calling for AI regulation to guard against surveillance capitalism and data monopolies. Politico reported on a coalition letter from civil rights organizations to the Federal Trade Commission (FTC) calling for rules to address algorithmic discrimination. Addressing the focus on existential threats posed by AI, The Guardian examined comments from European Commissioner for Competition Margrethe Vestager, who posits that AI-based discrimination is a much more pressing concern than possible existential threats due to AI. The Guardian also interviewed Signal Foundation President Meredith Whittaker about “AI hype.”

Senators Advance the JCPA and Reintroduce AICOA, Prompting Civil Society Fervor

- Summary: The Senate Judiciary Committee advanced the Journalism Competition and Preservation Act (JCPA, S.673) on a bipartisan 14-7 vote, prompting a wave of civil society response as the contentious bill moves forward to potential full Senate consideration. The JCPA creates an antitrust carve out for news outlets, allowing news publishers with fewer than 1,500 full-time employees to engage in collective bargaining with covered platforms, and establishes requirements for covered platforms to negotiate in good faith. The bill aims to shift the current power imbalance between platforms like Google and Facebook and independent news publishing entities, which impacts negotiation on issues like pricing, terms, and conditions. The JCPA advanced from the Senate Judiciary Committee in the last Congress, but was never brought to a floor vote, despite a push to include it in must-pass legislation at the end of 2022. The House companion was introduced by Rep. David Cicilline (D-RI) in the 117th Congress, but did not make progress out of the subcommittee.

- Sens. Amy Klobuchar (D-MN), Dick Durbin (D-IL), Lindsey Graham (R-SC), Richard Blumenthal (D-CT), Josh Hawley (R-MO), Mazie Hirono (D-HI), Mark Warner (D-VA), and Cory Booker (D-NJ) reintroduced the American Innovation and Choice Online Act(AICOA). AICOA was first introduced and advanced out of the Senate Judiciary Committee in the 117th Congress by a 16-6 vote. The bill also cleared the House Judiciary Committee, but the 117th Congress ended without the bill receiving a floor vote in either chamber. AICOA seeks to establish limits for “dominant digital platforms to prevent them from abusing their market power to hurt competition, online businesses, and consumers.”

- Stakeholder Response: The JCPA has sparked a range of responses from civil society and business stakeholders. The American Economic Liberties Project published a policy brief on the bill outlining the potential for the bill to realign market forces to support small news publishers. The News/Media Alliance, a trade association representing thousands of North American newspapers, also applauded the passage.

- In contrast, the Chamber of Commerce, Free Press, the Computer & Communications Industry Association, NetChoice, the ACLU, and TechNet published statements in opposition to the bill, citing concerns around content moderation, dis- and misinformation, as well as potential First Amendment violations. More than 20 public interest organizations, trade associations, and companies released a letter opposing the JCPA. Opposing groups also argued that the bill would not empower small publishing entities and increase the diversity of news organizations as intended but lead to more consolidation and allow publishers to charge platforms for links to their content, potentially limiting access to a diversity of credible online news.

- AICOA also triggered widespread civil society response, with TechNet, the Disruptive Competition Project, NetChoice, and R Street in opposition to the bill, arguing that AICOA would undermine cybersecurity and the U.S.’s competitiveness in the world market, give the Federal Trade Commission too much control over businesses, and ban the operation of popular products. In contrast, the Institute for Local Self-Reliance, Public Citizen, Public Knowledge, The Tech Oversight Project, and Consumer Reports applauded the reintroduction, arguing that the bill would boost innovation and stop anti-competitive practices by major tech companies.

- What We’re Reading: BBC reported on Google and Meta’s plans to restrict news access in Canada following the Canadian parliament’s passage of the Online News Act, a law similar to the JCPA. On the Tech Policy Press podcast, Ben Lennett spoke to McGill University professor Taylor Owen about what to expect in Canada. The California Journalism Preservation Act (CJPA), also a similar bill to the JCPA, passed out of the California State Assembly this month, advancing the bill to the State Senate. CNBC wrote about Meta’s threats to stop providing news access for Californians in response to the CJPA’s passage out of the assembly. The Seattle Times analyzed the relationship between the CJPA and JCPA. The Verge published an article on Google’s changes to its News app and the company’s partnerships with news media.

Other New Legislation and Policy Updates

- Transparent Automated Governance (TAG) Act (S.1865, sponsored by Sen. Gary Peters (D-MI): This act requires that the Director of the Office of Management and Budget create guidance for agencies to be transparent about their use of automated and AI-augmented systems for public interactions. This guidance must include provisions on when agencies give notice to the public when they are interacting with any automated or augmented system or when a “critical decision” has been made by one of these systems. On June 14, the TAG Act was reported from the Committee on Homeland Security and Governmental Affairs on a 10-1 vote.

- Platform Accountability and Transparency Act (S. 1876, sponsored by Sen. Christopher Coons (D-DE)): This bill would establish a research program by the National Science Foundation (NSF) and the FTC to explore "the impact of digital communication platforms on society." The act would provide safe and encrypted pathways for independent researchers to access the data these platforms hold. To meet the requirements for qualification, projects must be conducted in the public interest, involve research on a digital communication platform, and be non-commercial in nature. This bill was cosponsored by Sens. Bill Cassidy (R-LA), Amy Klobuchar (D-MN), John Cornyn (R-TX), Richard Blumenthal (D-CT), and Mitt Romney (R-UT).

- No Section 230 Immunity for AI Act (S.1993, sponsored by Sens. Josh Hawley (R-MO) and Richard Blumenthal (D-CT)): This bipartisan legislation clarifies that the use of generative AI does not qualify for immunity under Section 230 of the Communications Decency Act of 1996. This legislation would potentially make it easier for companies to be sued for any harm done by generative AI models.

- National AI Commission Act (H.R.4223, sponsored by Reps. Ken Buck (R-CO), Ted Lieu (D-CA), and Anna Eshoo (D-CA)): The bipartisan National AI Commission Act would establish a blue-ribbon commission that would focus on AI, its risks, and potential regulatory structures. The commission would be composed of 20 members, half selected by Democrats and half by Republicans, who could use their experience in the fields of AI, industry, labor, and government to produce reports on AI regulation.

Public Opinion Spotlight

From April 10-13, 2023 Morning Consult conducted a poll of 1,783 U.S. adults measuring the level of trust Americans have in social media companies regarding their personal data. They found that:

- Only 1 in 5 social media users stopped using a social media platform in the year preceding the survey despite the fact that fewer than 2 in 5 users trust social media companies with their personal data.

- Although the majority of users worry about data privacy, 3 in 4 users who are distrustful of social media report say that they feel it is too difficult to protect data no matter what, justifying their reason for remaining on social media platforms. 7 in 10 users who distrust social media companies believe that their data is already not private, regardless of their social media usage.

- 2 in 5 users are willing to give up data privacy to receive personalized contents from platforms, and 3 in 5 are willing to do the same to stay in touch with loved ones or receive discounts.

The American Edge Project, a Facebook-funded organization, released a survey conducted between April 26 and May 5, 2023 of 1,005 U.S. voters, 1,011 U.K. voters, 1,003 German voters, and 500 Belgian voters. The results reveal that despite a number of regulatory and enforcement conflicts, voters in the U.S. and voters in the E.U. remain united in their shared values and in their concern over China and Russia’s growing technological power. Key findings include:

- 81 percent of U.S. voters and 74 percent of E.U. voters believe China and Russia’s growing technological power threatens national security; 80 percent of U.S. voters and 73 percent of E.U. voters believe it threatens the economy.

- 87 percent of U.S. voters and 78 percent of E.U. voters oppose heavy regulation in their country’s technology sector. Even further, 86 percent of U.S. voters and 79 percent of E.U. voters are concerned that if the U.S. government and European governments continue to regulate each other's technology too heavily, China will gain too much power in the technology sector.

- In an increase from previous years, 51 percent of U.S. voters and 47 percent of E.U. voters favor internet rules created by a potential U.S.-E.U. coalition.

The Anti-Defamation League surveyed 2,139 American adults from March 7-24, 2023 and 550 13-17-year-olds from March 23-April 6, 2023 about their experiences with hate and harassment online. Key findings include:

- In a 12-point increase from last year's survey, 52 percent of adults have experienced social media-based harassment at some point in their lives. 33 percent of adults have experienced online harassment in just the past year, which is an increase of 10 points from last year.

- Certain demographics were more likely to have experienced online harassment. 47 percent of LGBTQ+ people, 38 percent of Black people, and 38 percent of Muslims had experienced online harassment in the past year, all higher than the percentage of total adults who have experienced harassment recently. 51 percent of transgender people have experienced online harassment in the past year.

- For teenagers, 51 percent had experienced online or social media-based harassment in the past year, an increase of 15 points from last year.

A recent survey of 2,198 U.S. adults, conducted May 24-26, 2023 by Morning Consult found that over half of U.S. adults support regulating AI. More specifically, they found that:

- 53 percent of U.S. adults think that the development of AI technology should be regulated by the government. This includes 57 percent of Democrats and 50 percent of Republicans.

- 54 percent of U.S. adults support creating a new federal agency that would regulate AI development. This includes 65 percent of Democrats and 50 percent of Republicans.

- 55 percent of U.S. adults support a tax on AI companies that would be used to pay workers who are displaced by AI. This includes 65 percent of Democrats and 45 percent of Republicans.

We welcome feedback on how this roundup and the underlying tracker could be most helpful in your work – please contact Alex Hart and Kennedy Patlan with your thoughts.

Authors