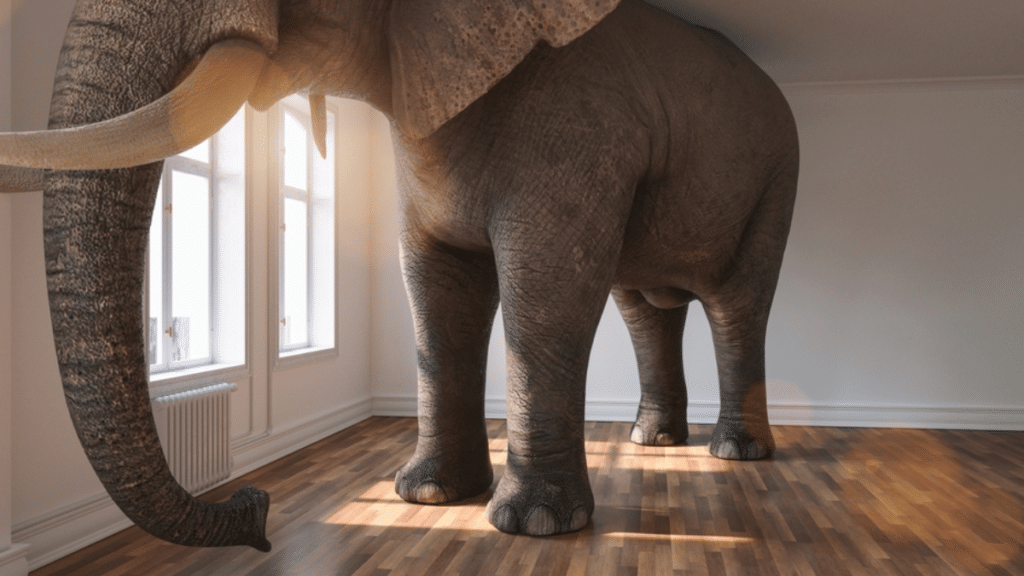

Monopoly Power Is the Elephant in the Room in the AI Debate

Max von Thun / Oct 23, 2023Max von Thun is the Director of Europe and Transatlantic Partnerships at the Open Markets Institute, an anti-monopoly think-tank.

In late September, Amazon announced a sweeping new “strategic collaboration” with leading AI startup Anthropic worth several billion dollars. The deal involves an unusual degree of coordination between the two companies, with Anthropic committing to using Amazon’s cloud infrastructure and proprietary chips to build, train, and deploy its foundation models, while giving Amazon’s engineers and platforms privileged access to those models. In return, Amazon will invest up to $4 billion in Anthropic and acquire a minority stake in the company.

While the deal may not look problematic at first glance, it is just the latest example of Big Tech’s efforts to corner the nascent generative AI market through strategic investments and partnerships. In exchanging model access for use of the highly concentrated computing resources they own and control, the tech giants are effectively buying themselves an insurance policy, ensuring that even if their own in-house AI efforts flop, their digital dominance will be maintained.

Microsoft’s $10 billion partnership with OpenAI, which similarly gives Microsoft privileged access to OpenAI’s technology while locking its dependence on Microsoft’s cloud computing infrastructure, is another clear illustration of this strategy. Other leading startups that have inked major deals with Big Tech firms include Hugging Face (Amazon), Cohere (Google, Nvidia), Stability AI (Amazon) and Inflection AI (Microsoft, Nvidia). These partnerships appear to be serving the same purpose as “killer acquisitions” in the past – think of Facebook’s acquisition ofWhatsApp or Google’s purchase of YouTube – raising serious concerns about fair competition in the fledgling AI market.

Even without the effect of mergers and partnerships between Big Tech firms and leading AI startups, there are good reasons to believe that – left to itself – the market for foundation models trends towards consolidation. Vanishingly few companies have access to the cloud infrastructure, advanced chips, data and expertise needed to train and deploy cutting-edge AI models. The pool is likely to shrink further as network effects, economies of scale, and user lock-in take increasing effect.

Should we be worried about a handful of incumbents controlling AI? There is plenty of evidence to show that market concentration undermines innovation, reduces investment, and harms consumers and workers. This is especially true in digital markets – from search engines and app stores to e-commerce and digital advertising – where a few corporations have gained so much power that they are able to extract value from and impose terms on everyone in their orbit, while having a disproportionate say over the information we consume and the ways in which we communicate.

That includes the exploitative tolls Amazon, Apple and Google are able to impose on the traders and developers dependent on their online superstores, the role of a few huge social media platforms in disseminating disinformation and fueling polarization, and the hollowing out of the media industry as a result of the tech giants’ digital advertising monopoly. These monopolistic abuses are not only bad in economic terms, but also bear significant responsibility for the perilous state our democracies find themselves in today.

Sadly, it isn’t difficult to envision a similar future for AI, where a few dominant firms monopolize critical upstream inputs (cloud infrastructure, foundation models) and use their position to dictate terms to everyone else. Unlike the previous wave of technological disruption, in which plucky upstarts rose from obscurity to create and dominate entirely new markets, when it comes to generative AI, the incumbents have a head start. This isn’t just because of their aforementioned advantages when it comes to computing power, data and talent. They also control ubiquitous platforms that they deploy to nudge or lock existing users into using their favoured AI services – from Microsoft rolling out OpenAI’s technology across its suite of office applications, to Google building generative AI into its search engine.

Through their control of AI, Big Tech firms are likely to steer the technology in a direction that serves their narrow economic interests rather than the public interest, despite the grave societal harms this approach has inflicted over the past two decades. Free from the restraints of competition and regulation, they can be expected to deploy AI to maximize screen time, turbocharge invasive data collection, amplify manipulative targeted advertising, all while doing their utmost to keep users confined in their walled gardens.

As we’ve seen time and time again in highly concentrated markets, this handful of AI giants would be in a position to use their monopoly power to exploit users and customers, erect formidable barriers to entry, and distort efforts to introduce regulation. Microsoft’s move to prevent rivals from using its search index to train generative AI tools is an early example of what such anti-competitive conduct could look like, but there are many ways monopoly power might manifest, from charging app developers extortionate fees for cloud and foundation model access, to obscuring rival AI offerings in app store and search engine results.

As is already happening, the AI giants would be able to convert their economic heft into regulatory influence, using their perceived expertise to hoodwink policymakers into watering down regulation or distracting them with far-off, improbable risks such as planetary extinction. Meanwhile, the resilience of critical industries and government services, many of which rely on the cloud and increasingly on AI, would be undermined by exposure to single points of failure, from server outages and cyberattacks to corrupted data and algorithms gone rogue.

Despite the clear trend towards concentration in AI, the debate on how to regulate it has so far paid little attention to the monopoly threat. High-profile legislative initiatives – in particular the European Union’s flagship AI Act – prioritize placing guardrails on AI (such as risk assessments, human oversight and transparency) to prevent its abuse, with little regard to the scale and power of the actors being regulated. Far worse are the “voluntary commitments” negotiated between governments and large corporations, which in addition to having no real bite, are a clear illustration of corporate capture.

Current regulatory efforts to prevent misuse of AI, while important, fail to acknowledge the central role market structure plays in determining how, when, and in whose interests a technology is rolled out. Trying to shape and limit AI’s use without addressing the monopoly power behind it is at best likely to be ineffective, and at worst will entrench the power of incumbents by regulating smaller players out of existence. On other hand, if complemented by robust action to rein in monopolies, AI regulation will itself be more effective, as the power of corporations to evade, frustrate, or distort it will be greatly diminished.

Regulators need to learn from their past failures to rein in Big Tech by moving fast to prevent monopolization of AI and the technology stack it is built on. Encouragingly, there are already signs that this is happening. Just last month, the UK’s Competition and Markets Authority published a detailed study on the risks of concentration in foundation models, echoing similar concerns expressed by the US Federal Trade Commission (FTC). Meanwhile competition authorities in France, Netherlands and the UK are investigating monopolistic conduct in the markets for the advanced chips and cloud infrastructure that power advanced AI.

In seeking to keep the market for AI open, contestable, and accountable, there are five broad areas where policymakers would do well to focus their efforts.

- Competition authorities should closely scrutinize attempts by dominant firms to corner the market through takeovers, investments and partnerships, and intervene where necessary using existing powers to block illegal mergers and cartels. Google’s 2014 acquisition of DeepMind, Microsoft’s considerable stake in OpenAI, and Amazon’s recently concluded partnership with Anthropic should be at the top of their lists.

- Those same agencies should use their existing antitrust powers to investigate and where necessary punish anti-competitive practices designed to harm and exclude rivals and customers. These include unfairly bundling AI models and tools with other services, or limiting access to the data and software needed to develop competitive AI models. Big Tech firms in particular should be forced to compete on the merits, and not by leveraging their pre-existing dominance to unfairly crush challengers.

- Governments should use regulation to level the playing field and ensure foundation model and cloud providers behave fairly and responsibly. That includes using avenues such as the European Union’s Digital Markets Act, and Common Carrier regulation in the US, to ban dominant firms from discriminating against or otherwise exploiting their customers. It should also entail aggressively enforcing existing privacy and copyright laws, so that protected intellectual property and sensitive personal data are not used illegally or unethically to train AI models and services, as is already happening.

- There is a need to seriously entertain structural interventions to tackle Big Tech’s dominance at source. Given the instrumentality of computing power and data in advanced AI, potential measures include separating ownership of cloud infrastructure and foundation models, imposing interoperability requirements on competing cloud and AI platforms, and opening up access to the proprietary data held by the tech giants.

- There is a role for smart public investment in supporting challengers to Big Tech and steering AI in the public interest. In some limited cases this could entail governments developing cloud infrastructure and foundation models themselves, particularly where national security considerations apply. But the bigger opportunity lies in efforts to foster a more diverse ecosystem, including providing public funding to innovative startups, researchers and universities, and channelling public procurement towards alternatives to the tech giants.

We remain at an early stage in the development of a technology that presents both opportunities and risks. Trying to accurately predict what the market will look like in a decade is a fool’s errand. But taking action now, to stop it from being monopolized in the future, is not.

Authors