Poll Finds Widespread Distrust of Social Media in US Election Cycle

Gabby Miller / Jun 26, 2024More than half of all social media users distrust information they see in their feeds related to the 2024 US election, according to a new Tech Policy Press/YouGov poll. A significant number also believe that much of the political or election-related images or videos they’re seeing is AI-generated.

The online poll, which surveyed more than a thousand US adults from June 12 to June 14, asked respondents to consider both the trustworthiness of election-related information about political issues and candidates that they find on social media as well as the trustworthiness of the platforms' election information tools.

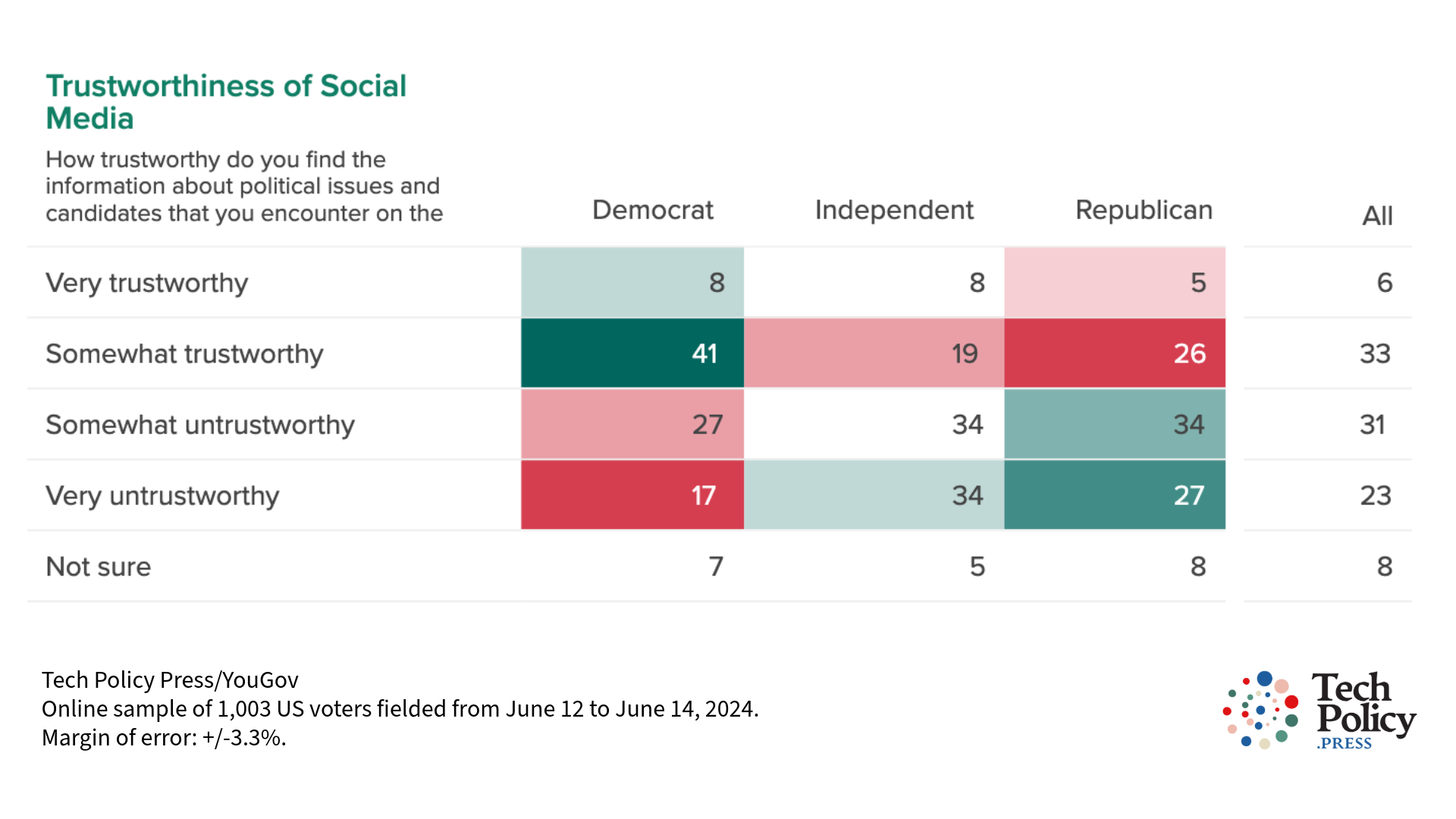

Responses to “How trustworthy do you find the information about political issues and candidates that you encounter on the social media platforms you use?” broken down by party self-identification.

A little more than half of all survey respondents find information around political issues and candidates on social media untrustworthy, with significant differences across party lines. Republicans were more likely to distrust election-related information, making up 27 percent of those who find it “very untrustworthy” – ten points more than Democrats, who generally distrust what they see on social media at a comparable level.

In contrast, only 39 percent of all respondents put some degree of trust in election-related information they find on social media. Democrats were significantly more likely to say that they find this information “somewhat trustworthy” compared to their Republican counterparts, who polled 15 points lower in this category.

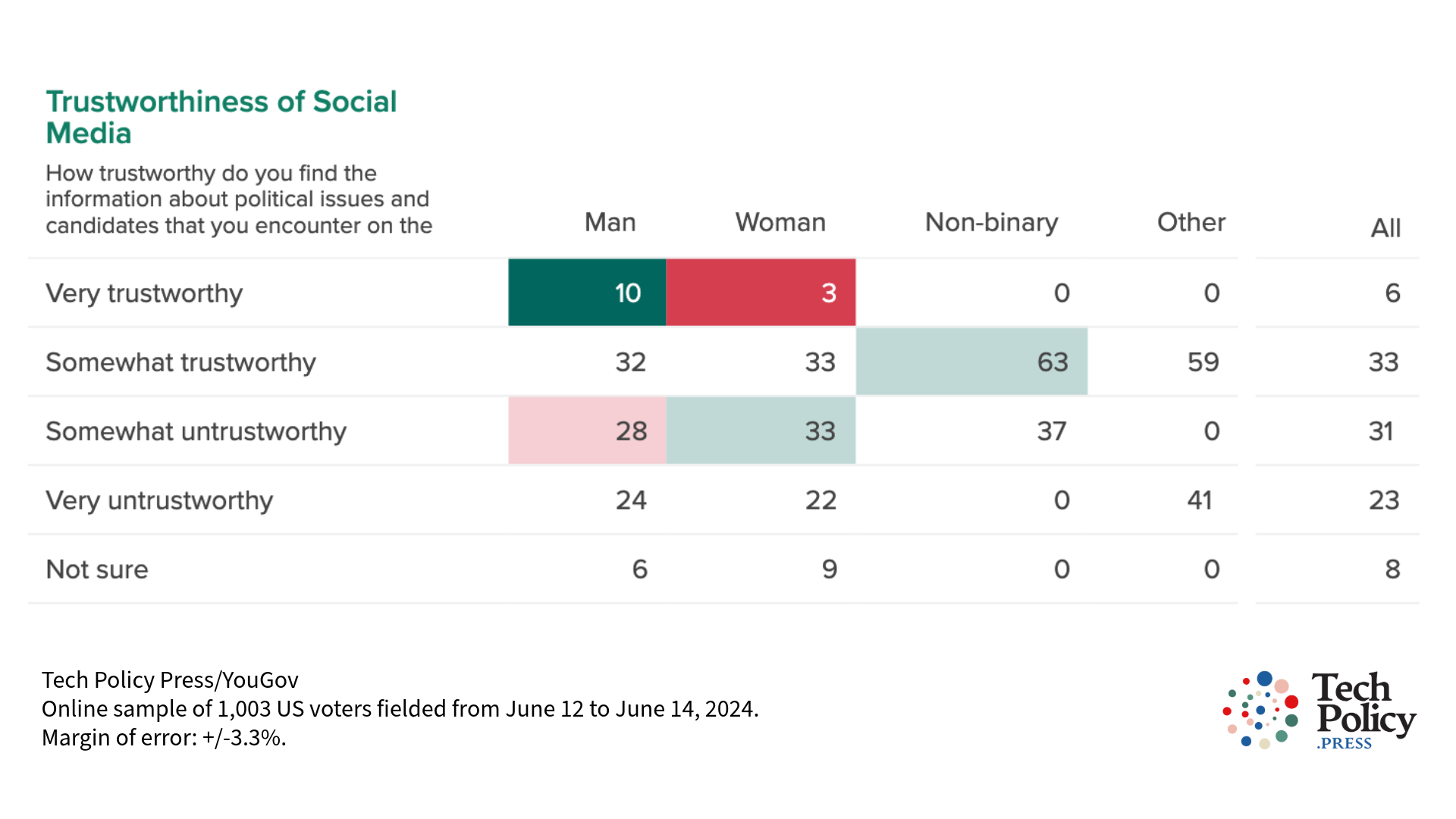

While only six percent of respondents found election-related information on social media “very trustworthy,” there was no partisan difference. However, men were three times more likely to have a high level of trust in the political content they see in their social feeds compared to women.

Responses to “How trustworthy do you find the information about political issues and candidates that you encounter on the social media platforms you use?” broken down by respondents’ gender.

For respondents who found it difficult to rank the level of trust they have in election-related information, a third said that social media helps them find trustworthy news sources that inform how they vote. However, forty percent said they use social networking sites to find trustworthy news sources, but get their election information elsewhere.

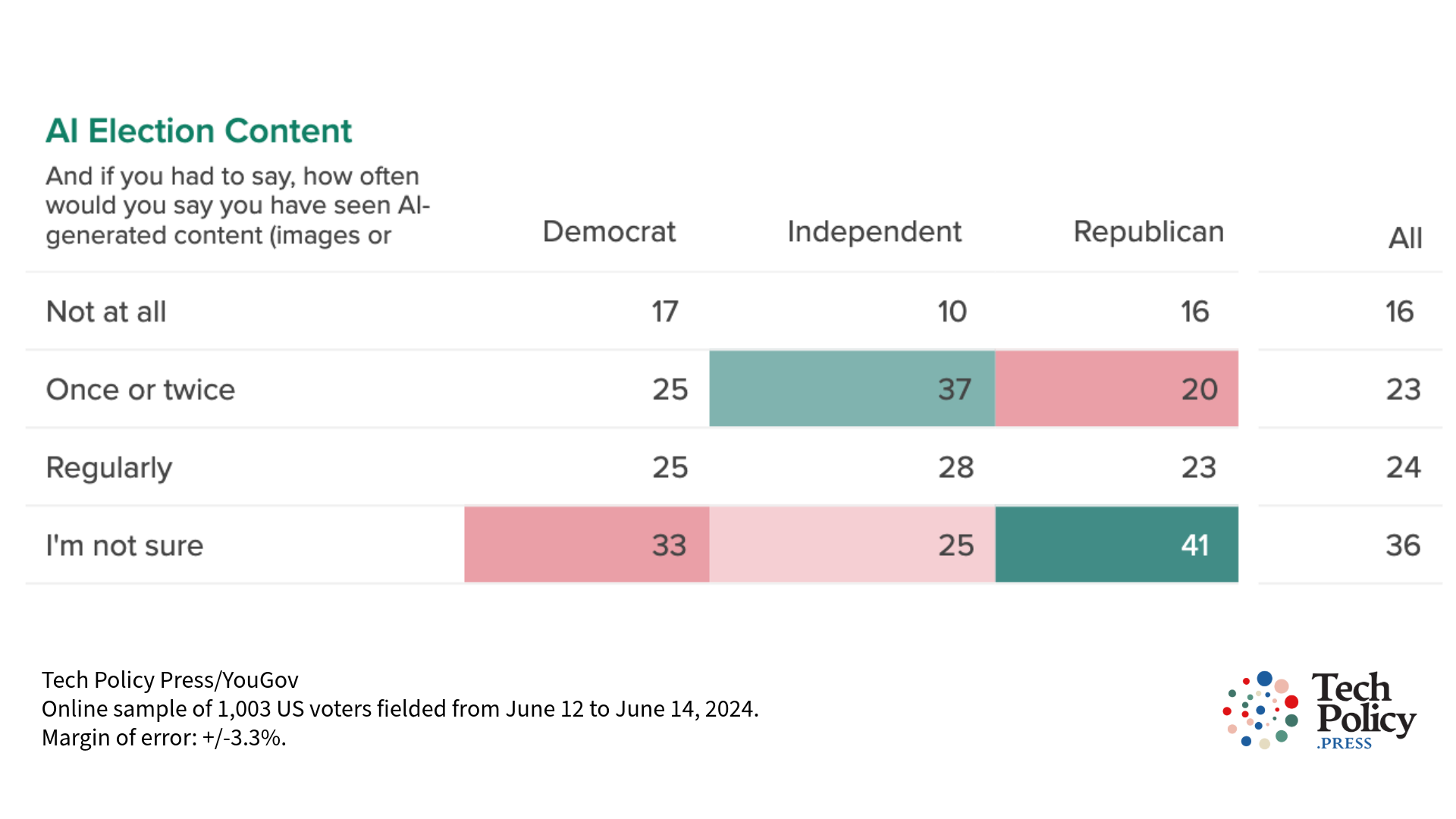

Respondents believe they are seeing AI-generated content about the 2024 elections in their feeds

Close to half of all respondents believe they have seen AI-generated visual content, such as images or videos, related to the 2024 US elections; and almost a quarter believe they’re encountering it regularly. It’s unclear how well respondents are estimating how much generative AI content they’re encountering online, given no solid empirical evidence yet exists for its actual prevalence on social media. For now, no major social media platform has implemented a tool that can reliably detect AI-generated content on its platform. Meta recently announced it would label all AI-generated images produced by its platform or that carry identifiable markers on Facebook, Instagram, and Threads ahead of the November election.

Responses to the question, And if you had to say, how often would you say you have seen AI-generated content (images or video) in your social media feeds related to politics or the 2024 U.S. election? broken down by party affiliation.

Part of the problem can be chalked up to the increasing difficulty researchers face with access to social media data, according to Yunkang Yang, an assistant professor at Texas A&M University. Yang is one of the authors of a study that measured the prevalence of visual misinformation in the 2020 election cycle on Facebook. He told Tech Policy Press that “Elon Musk has made it almost cost prohibitive for most scholars to access” data from X, formerly Twitter. Another example he cited is Meta, which is deprecating CrowdTangle, a tool that many researchers use to study Facebook and Instagram, beginning in August. “Being able to answer questions like how prevalent AI-generated content is on social media requires that social media companies make data easily accessible to researchers,” he said.

People generally – but even experts, too – struggle to distinguish between what’s real or not online. “One way to detect lifelike, AI-generated visual content is via plausibility,” Yang said. For instance, most Americans would know that an image of a young Marlon Brando, who died in 2004, standing next to President Joe Biden at a campaign event is implausible, he explained. “Beyond that, it is very difficult for an average media consumer to tell the difference between a real photo and an AI-generated photo,” Yang said. “This really worries me.”

The poll result may spell trouble, even if the prevalence of AI-generated media is less than people perceive it to be. “If people believe that they are seeing a lot of AI-generated content, then they might also approach real content with skepticism or believe that real content might be AI-generated,” said Kaylyn Jackson Schiff, an assistant professor in the political science department at Purdue University and co-director of the Governance and Responsible AI Lab (GRAIL). “That is the idea behind the concept of the ‘liar's dividend’ – a term coined by two legal scholars and explored in our research – which suggests that the proliferation of real deepfakes makes false claims of deepfakes more believable.”

Her colleague, Daniel Stuart Schiff, an assistant professor of technology policy at Purdue and co-director of GRAIL, says cognizance of the risks of AI-generated content might lead individuals to treat politician cries of ‘deepfakes’ as more credible. “We appear to be in a bit of a Catch-22,” he told Tech Policy Press. “Like media literacy, digital literacy is commonly thought to be a key strategy for mitigating misinformation. But our recent research suggests that individuals with higher awareness of deepfakes are no less susceptible to false claims of misinformation by politicians.” Both helped co-author a report on how politicians may use misinformation about fake news or deepfakes to evade accountability through the ‘liar’s dividend.’

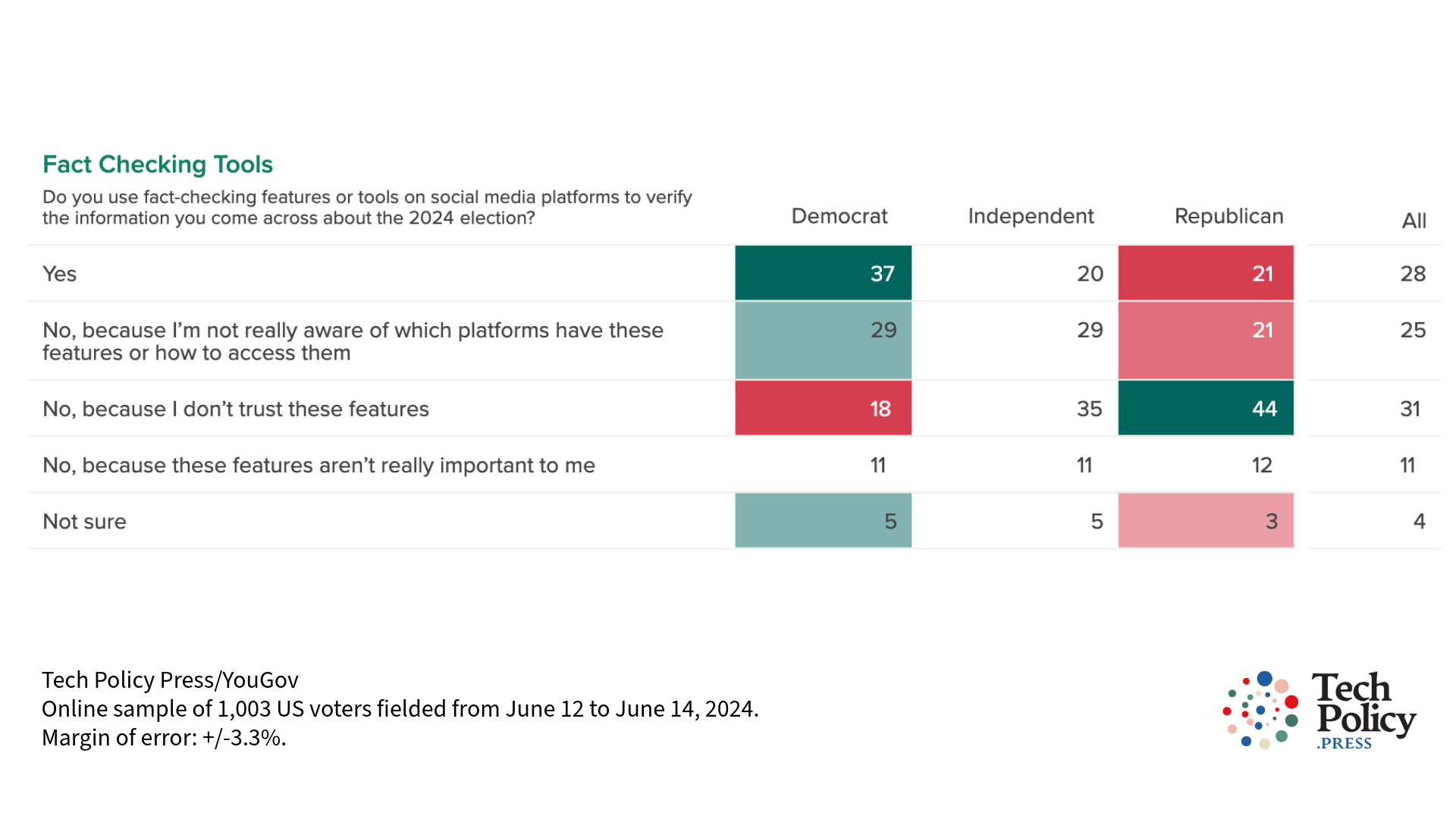

Respondents appear skeptical of platform fact-checking tools and information

Many social media platforms also provide users with tools or features for fact-checking information that appears in their feeds. However, more than half of the survey respondents reported having not used these tools for information related to the 2024 elections. Additionally, a quarter of all respondents reported being unaware of which platforms have these tools or how to access them. About a third didn’t use platforms’ fact-checking features due to distrust.

Responses to a question on the use of fact-checking features or tools provided by social media platforms broken down by party affiliation.

When polled about social media platform usage, Facebook and YouTube were respondents’ most widely-used platforms, with 75 percent and 59 percent of all respondents, respectively, saying they recently visited the two networks. Nearly half of all respondents said they also recently used Instagram, whereas only a third recently accessed X (formerly Twitter).

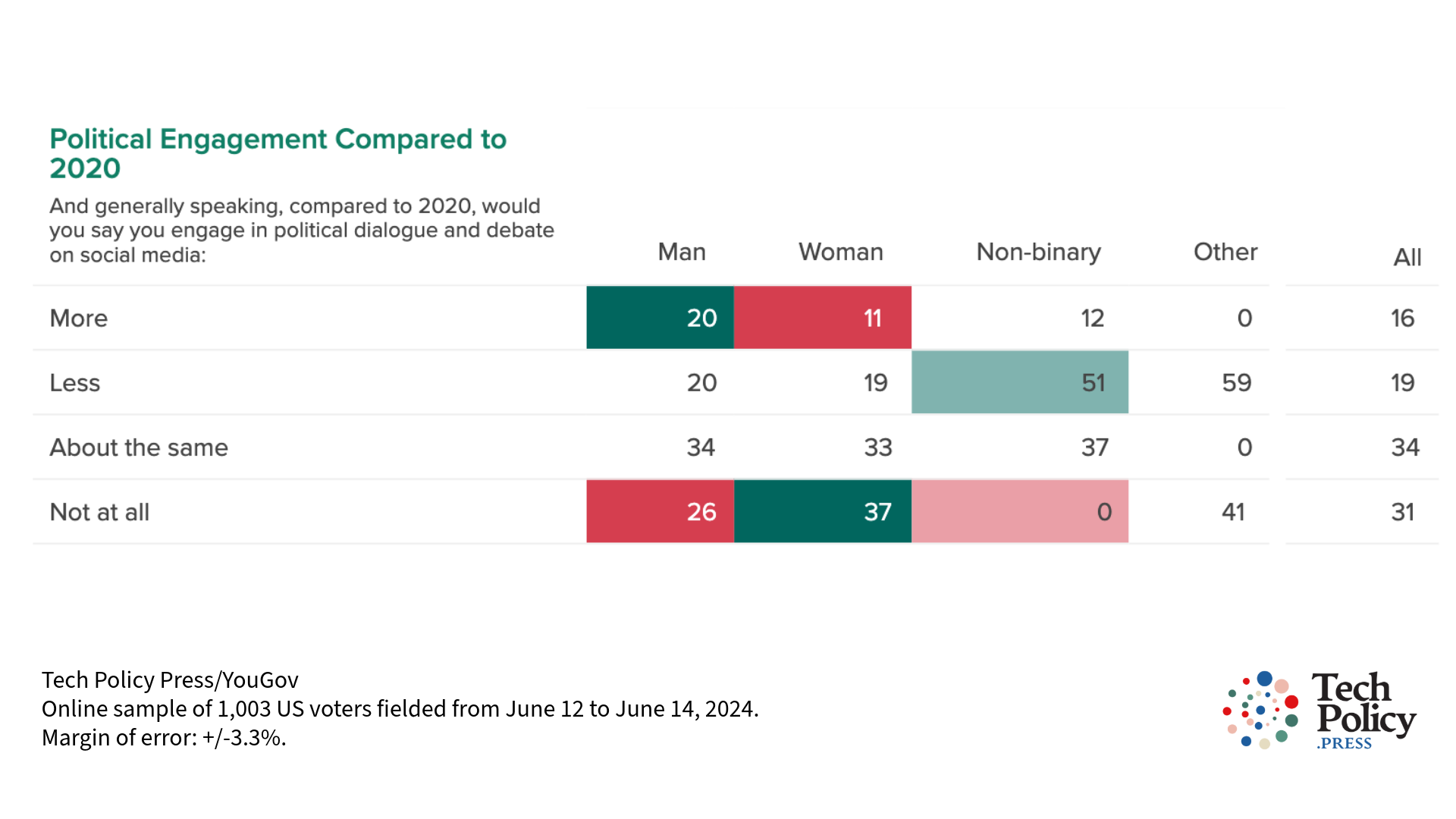

Responses to a question on respondents’ engagement in political debate online in the 2024 US elections, compared to 2020, broken down by gender.

It would appear that women may be less politically engaged on the platforms than men in the current election cycle, compared to that of 2020. About one third of all respondents are engaged in political debate online about the same amount as they were the last election cycle. Another third have opted-out of engaging online entirely, with women respondents significantly more disengaged than men. And among those who have increased their political activities online, men polled double the amount as women respondents.

The full dataset can be downloaded here.

Correction: An earlier version of this article said more than half of all respondents believe they have seen AI-generated visual content, such as images or videos, related to the 2024 US elections. The statement was corrected to reflect that the figure is 'close to half' rather than 'more than half.' We regret the error.

Authors