Proposed Moratorium on US State AI Laws is Short-Sighted and Ill-Conceived

Marc Rotenberg, Merve Hickok, Christabel Randolph / May 21, 2025Marc Rotenberg is executive director, Merve Hickok is president, and Christabel Randolph is associate director at the Center for AI and Digital Policy (CAIDP).

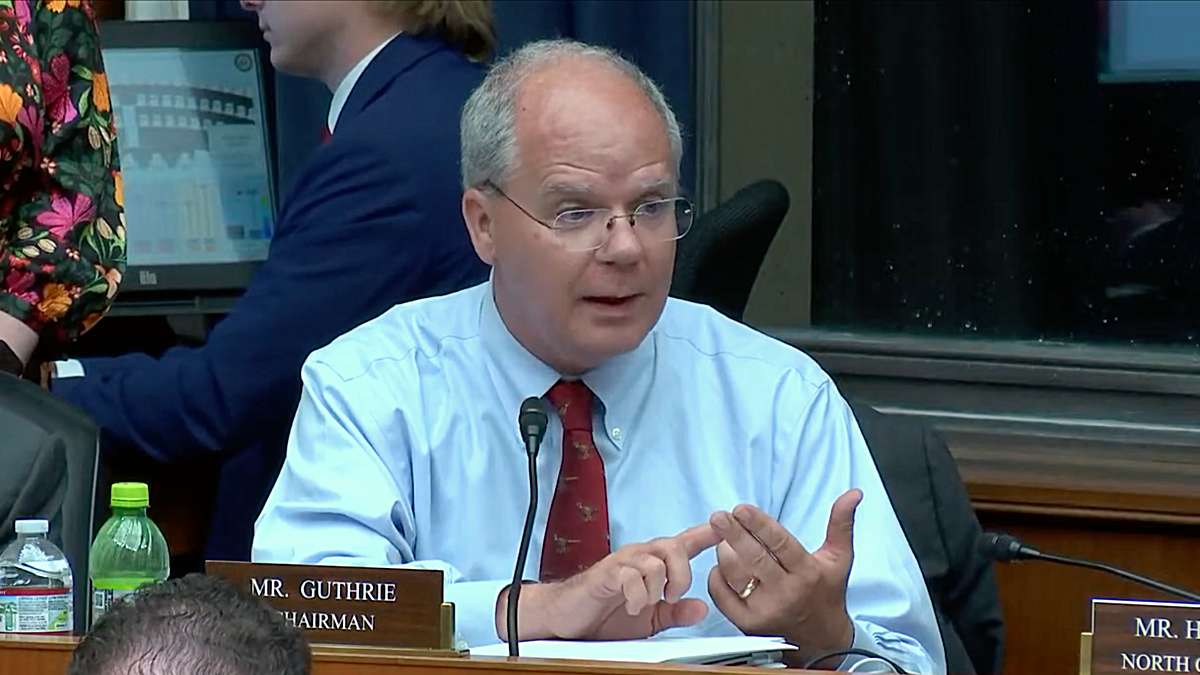

Washington, DC, May 14, 2025 - House Energy and Commerce Committee chairman Rep. Brett Guthrie (R-KY) at a markup hearing on a budget reconciliation bill. Source

Over the last several years, while the United States Congress has failed to pass meaningful legislation governing AI systems, states have established critical new safeguards to protect creators, promote accountability, safeguard children, and ensure the reliability of AI. Now, some members of Congress are proposing a ten-year moratorium that would prohibit state legislators from protecting the public. It is a short-sighted and ill-conceived plan.

The moratorium provision proposed by the House Energy and Commerce Committee is clearly out of step with public opinion, ignores expert advice and calls to regulate, and the progress made at the state level on AI regulation. The irony is not lost that Congress is seeking to establish guardrails against state regulation when it should be focusing on guardrails for AI.

This proposal, circulating under the banner of "federal preemption," would effectively block state legislatures from enacting or enforcing AI regulations for the next decade. Its proponents argue that only the federal government is capable of managing the complexity and scale of AI regulation, and that a patchwork of state laws will hinder innovation. But in practice, this approach would halt progress where it is most dynamic and responsive—at the state level—just when the risks and harms associated with AI systems are escalating.

A bipartisan group of 40 State Attorneys General opposes the moratorium. The AGs pointed out that Congress has failed to establish necessary guardrails for AI. The state AGs argue: “This bill does not propose any regulatory scheme to replace or supplement the laws enacted or currently under consideration by the states, leaving Americans entirely unprotected from the potential harms of AI.”

The National Conference of State Legislatures also told Congress to oppose the moratorium. Expressing strong opposition to the moratorium provision, the state lawmakers said, “A federally imposed moratorium would not only stifle innovation but potentially leave communities vulnerable in the face of rapidly advancing technologies.”

Moreover, the claim that only federal regulation can ensure a "uniform" approach ignores the complexity of the US legal and political system. Uniformity is not always desirable when different communities face different risks and priorities. A one-size-fits-all federal approach may leave vulnerable groups unprotected or limit innovation in protective governance. Worse, by establishing a moratorium, Congress would not be enacting meaningful regulation—it would simply be freezing action while the tech industry continues to advance at breakneck speed.

It is important to ask: Who benefits from this moratorium? The tech industry, particularly large AI developers and platform companies, has lobbied aggressively for federal preemption. They argue that a patchwork of state laws creates compliance burdens and stifles innovation. But what they often mean is that state laws threaten their business models, especially when those models rely on exploiting data, avoiding liability, or obfuscating accountability. These companies prefer a weak or delayed federal standard to dozens of stringent and proactive state laws.

In other words, the moratorium serves industry interests, not the public interest.

Currently, state AGs are conducting proceedings to investigate firms across the tech industry. In Texas, Attorney General Paxton in February announced an investigation into DeepSeek and prior to that in 2024, reached a settlement in a first-of-its-kind healthcare generative AI investigation. State enforcement stands out in the face of the FTC’s inaction on ChatGPT and OpenAI. We now look to the states to enact and enforce necessary safeguards for AI services. However, the House is seeking to eviscerate the ability of the states to safeguard the public.

It is also worth noting that AI systems increasingly mediate cultural, artistic, and creative expression. Generative AI models can replicate the voices, styles, and likenesses of artists without permission or compensation. States like Tennessee and California have moved to protect creators through right-of-publicity laws adapted for the AI age. A federal moratorium would strip states of the power to defend their artists and cultural economies at a time when those protections are desperately needed.

To be clear, there is a legitimate role for federal legislation in the AI space. National standards can help ensure baseline protections, promote international coordination, and reduce some regulatory uncertainty. But those standards should complement, not override, state efforts. A cooperative federalism model, in which baseline national standards are paired with room for state innovation, would better reflect the urgency and complexity of the moment. Federal regulation can and should play a role in establishing baseline safeguards, promoting transparency, establishing accountability, and coordinating enforcement.

AI governance demands a flexible, responsive, and inclusive approach. That means empowering, not silencing, the state-level policymakers who are often closest to the communities affected by these technologies. It means fostering dialogue between states and the federal government, not erecting barriers to cooperation. And it means recognizing that innovation in regulation is just as important as innovation in technology.

States have historically been the laboratories of democracy. In areas ranging from data privacy to consumer protection, from environmental regulation to public health, states have often acted when the federal government has stalled. AI technologies are not static. They are evolving rapidly, integrating into nearly every facet of society, from hiring and lending decisions to policing and education. As these systems become more powerful, so do the potential harms. States, often more closely attuned to these local realities than Washington, are better positioned to act quickly and thoughtfully. These state actions are not perfect, but they represent a commitment to protecting the public that Congress has failed to match. To preempt these efforts wholesale, especially for a decade, is not only anti-democratic—it is dangerous.

Congress should abandon the idea of a ten-year moratorium on state AI laws. Instead, lawmakers should focus on crafting meaningful, rights-respecting federal legislation that sets a strong floor while preserving the ability of states to lead, experiment, and protect their constituents. The future of AI governance should be built on democratic principles, shared responsibility, and a commitment to public interest—not a decade-long silence imposed from above.

The challenges of AI are too great, and the consequences too severe, to wait for a perfect federal solution. States have shown they are ready to act. The question now is whether Congress will support their efforts—or stand in their way.

Authors