Researchers Explore the Use of LLMs for Content Moderation

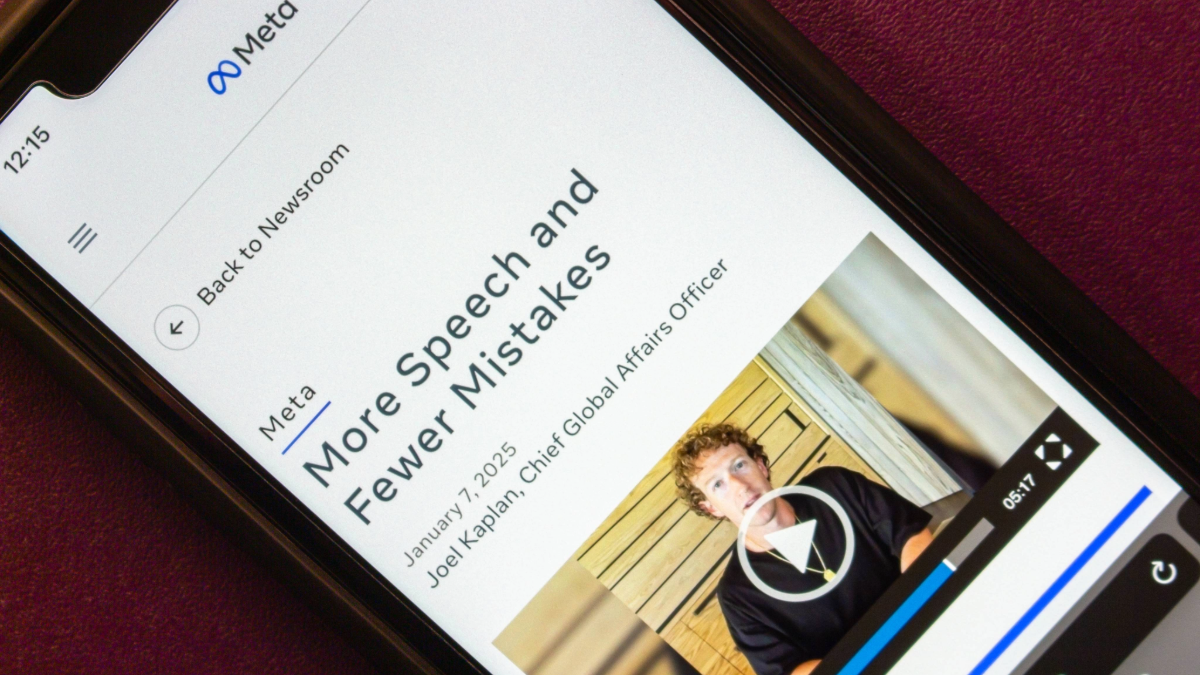

Tim Bernard / Oct 30, 2025AI in content moderation is far from a recent innovation: machine learning (ML) classifiers have been used for content moderation since around 2016. But since the technological advances of LLMs, platforms are accelerating the transition to AI for content moderation, laying off trust and safety workers and outsourced moderators in favor of automated systems. For instance, changes Meta announced to its content moderation practices in January included deploying “AI large language models (LLMs) to provide a second opinion on some content.” In August, TikTok announced it would lay off moderators in favor of AI systems as part of a reorganization plan intended to strengthen its “global operating model for trust and safety,” according to the Wall Street Journal.

The incentives for industry are straightforward, including cutting costs and reducing reliance on overburdened (and frequently exploited) contract workers, for whom the job of sifting through endless streams of unsavory user-generated content can have severe consequences. But alongside their promise, the use of LLMs for this purpose raises a host of questions, some of which are now the topic of study both in universities and in industry. The subject was a stand-out theme at the Trust and Safety Research Conference, held last month at Stanford.

Attempting to unlock the potential of LLMs for moderating content

The first keynote address at the event was given by Dave Willner, a former Facebook executive who, after serving as OpenAI’s first head of trust and safety, researched the potential for this LLM use-case as a fellow at Stanford’s Cyber Policy Center (now the Stanford Tech Impact and Policy Center, which hosted the conference). Willner, along with his Stanford research partner and former Facebook colleague, Samidh Chakrabarti, subsequently founded a startup to provide large LLM solutions to platforms.

As Willner and Chakrabarti discussed in this publication early last year, off-the-shelf LLMs have—along with great potential—serious drawbacks for content moderation. The two have since created a lighter weight model called COPE that was trained using data sets containing different content policies and the outcomes that each leads to for a set of content examples. This model is cheaper to run, according to Willner, and less influenced by prior conceptions than the leading foundation models. It can be finetuned for specific platform policies, he said, and it enables rapid iteration of policy as tweaks can be tested instantly, rather than propagated over months of retraining traditional ML classifiers or outsourced human moderators; Willner described current policy development practices as “like designing airplanes without a wind tunnel.”

Beyond increased accuracy and agility at scale, Willner suggested that LLMs can ameliorate other persistent problems intrinsic to content moderation, through explaining moderation policies and actions to users and by powering personalizable moderation—as in Bluesky’s composable moderation—or community moderation of the kind practiced on Reddit or Discord. He laid out a research agenda of unanswered questions, including: how well these models can moderate in other languages and across cultural contexts; if other approaches to training can produce even better results; how to cope with the complexity of rulesets that LLM-based moderation can lead to; and what the most effective (and palatable) point of intervention with users might be.

Willner was not the only speaker at the conference presenting improved content moderation systems facilitated by LLMs. A Google engineer described how the company has developed a process for vetting social content for its “Perspectives” carousel that appears in some search results. This feature has obvious risks, considering the prevalence of policy-violative content on social media.

The approach that the company landed on centers on an AI rater that uses a retrieval-augmented generation (RAG) system to iteratively improve the accuracy of the vetting by way of a human-in-the-loop validating outputs and providing ongoing feedback. Mirroring some of Willner and Chakrabarti’s finetuning techniques, specific examples of clear positives and negatives are included with the vetting prompt for each topic. The Google team attempts to mitigate model bias for specific topics by augmenting the golden data set with relevant examples for problematic keywords.

Counteracting the technical weakness of LLMs as moderators

A number of researchers that presented at the conference are digging into specific weaknesses with using LLMs for moderation. Kristina Gligoric, a professor of computer science at Johns Hopkins University, presented a study that she and her collaborators undertook regarding the ‘use-mention’ distinction (saying “x” versus talking about x). This phenomenon has direct relevance to current platform policies that explicitly prohibit the airing of certain views while permitting counterspeech that typically mentions those prohibited views in order to argue against them.

Gligoric said her team’s testing found that LLMs, including the most up-to-date commercial models, typically fail to differentiate counterspeech from the speech itself in at least 15-20% of cases. Further experimentation by the team demonstrated that one technique for teaching LLMs to differentiate between use and mention (chain-of-thought prompting) substantially brought down this false positive rate, indicating that this challenge for LLM use in moderation is by no means insurmountable.

Societal resistance to LLM moderation

Even if technical obstacles to using LLMs to moderate content can be counteracted, there remain societal challenges. Matthew Katsaros, Director of the Social Media Governance Initiative at the Justice Collaboratory at Yale Law School, described how people react to being moderated by AI. A number of empirical studies published since 2022 revealed what Katsaros termed the LLM trust deficit: that, broadly speaking, subjects perceived automated moderation (though not necessarily by LLMs) as less trustworthy than human moderation.

Katsaros and his collaborators built on these studies, exploring perceptions of procedural justice for moderation decisions that were either made by LLMs or where a range of LLM-based AI agents were made available to subjects playing the role of users who had a post removed. They concluded that LLMs can be used to build trust when they are used not as moderators (who were again perceived more poorly than humans), but as transparency tools that explain moderation decisions and consult with users to guide them to a better understanding of platform policy and processes.

Adjunct uses of LLMs in content moderation

Katsaros’s team was not alone in using LLMs as a tool in the content moderation process, but not for moderation itself. Ratnakar Pawar, a machine learning engineer at Sony PlayStation, shared how his company engaged with the issue of bias mitigation, in particular for the issue of “celebratory identity speech,” i.e., speech that mentions protected groups in a positive context but is scored as ‘likely discriminatory’ by classifiers because they were trained on a data set of previously moderated content where these groups are frequently mentioned in a discriminatory context.

Although the classifiers themselves may have been traditional machine learning models, LLMs played a crucial role in the mitigation efforts: they were used to generate an additive body of training data for the classifiers, seeded with historical moderation data, but more diverse and designed to balance toxic and benign mentions of specific protected groups. Along with careful designation of metrics for each protected group and keeping humans in the loop at all stages, this technique has been successful in reducing false positive rates that impact minority communities without a corresponding increase in false negatives.

Finally, Haiwen Li, a PhD student at MIT’s Institute for Data, Systems, and Society, presented a study on how LLMs can help community notes systems—which label factually-suspect content with user-generated factchecks—scale without introducing unreliability. The process that Li’s team landed on uses an LLM to generate notes and retains an all-human group of volunteers as raters. They developed a custom reinforcement learning process that improves the LLM’s abilities to generate "bridging" content (i.e., content ranked highly by raters of different persuasions) that lies at the heart of the community notes system by constantly incorporating new notes and ratings. The team also proposed that the new system should exist as a platform-independent layer, accessible by services across the internet.

***

The conference presentations demonstrated that researchers in both academia and industry are finding genuine potential for LLMs not only to replace human moderators and older ML classifiers but also as tools that can make incremental improvements at a range of points across the content moderation matrix. The technology appears to offer pathways toward more accurate, more equitable, and more broadly acceptable content governance, though, as Willner highlighted in his talk, some serious questions remain open. The impacts of introducing LLMs on global and cross-cultural moderation have yet to be measured. As public opinion remains wary of advances in AI, will widespread adoption of LLM moderation worsen the already beleaguered trust deficit in content moderation? Are other subtle but critical lacunae like the use-mention distinction yet to be uncovered?

It seems inevitable that platforms will increasingly adopt LLM moderation, but independent researchers, regulators and industry insiders must remain vigilant to ensure that these powerful new tools are deployed for public benefit, and not just for affordable scale.

This piece was updated to correct a characterization of Google's research.

Authors