AI Could Never Be ‘Woke’

Eryk Salvaggio / Jul 24, 2025Eryk Salvaggio is a fellow at Tech Policy Press.

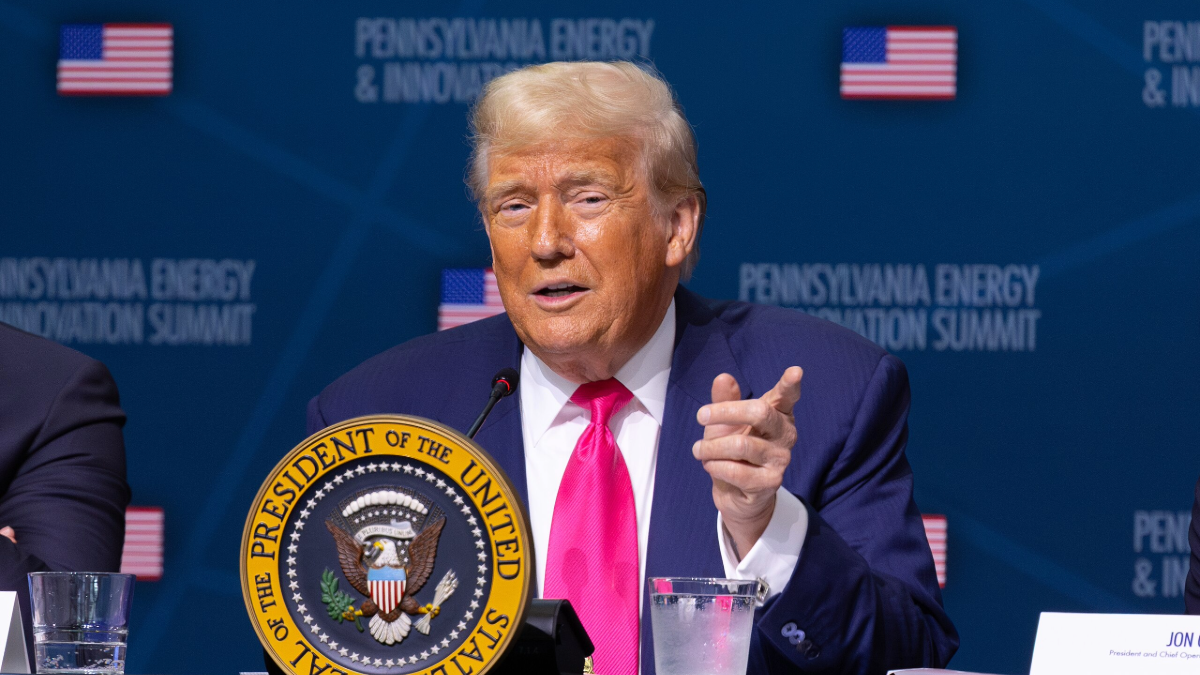

July 15, 2025—US President Donald Trump makes remarks at the "Pennsylvania Energy Innovation Summit" hosted at Carnegie Mellon University. Official US Department of Interior photo by Andrew King.

The Trump administration’s AI Action Plan, published Wednesday, calls AI “[a]n industrial revolution, an information revolution, and a renaissance—all at once,” asserting the transformative potential of America’s tech industry. To ensure this “renaissance,” it aims to strip away “radical climate dogma and bureaucratic red tape,” citing the Biden administration's “onerous regulatory regime.” It also asserts its own regulatory pressure: that AI “must be free from ideological bias and be designed to pursue objective truth.” It was accompanied by an executive order titled “Preventing Woke AI in the Federal Government.”

To meet this goal of ideologically objective truth and protect freedom of expression, the Action Plan calls to “eliminate references to misinformation, Diversity, Equity, and Inclusion, and climate change” in the AI Risk Management Framework developed by the National Institute of Standards and Technology (NIST). In one of the Action Plan’s many contradictions, it later encourages NIST to develop a “deepfake evaluation program.” But this is a telling shift. It seems to move the analysis of deepfakes away from the social side of the socio-technical system—questions of how it is produced, consumed, and distributed—and toward the development of technical fixes for identifying synthetic media.

Later, in another contradiction, the plan argues for “establishing a dynamic, ‘try-first’ culture for AI across American industry,” defined as “healthcare, energy, and agriculture.” Yet this “try-first” policy is acknowledged as too risky to trust at the Department of Defense. The Action Plan (rightfully) acknowledges that “lack of predictability, in turn, can make it challenging to use advanced AI in defense, national security, or other applications where lives are at stake.” While arguing that the lack of predictability is an issue for defense but not in civilian healthcare, the plan favors investment in the work of interpretability, a technical work-around to sculpt neural networks with greater specificity and control.

This is a broader pattern in the plan, which emphasizes tech as a set of concrete engineering problems while dismissing the social consequences of AI as “ideological.” At the same time, it seeks to engage in multiple social questions—such as measuring the impact on labor and productivity—without allowing insight into the possible disparities of AI’s impact across social, cultural, or economic lines, ensures that the social is rendered invisible.

In a related Executive Order, “Preventing Woke AI in the Federal Government,” the Trump administration makes two impossible demands: first, that LLMs must be “truth-seeking,” and “prioritize objectivity,” second, that tech companies “shall not intentionally encode” partisanship or ideology into LLM outputs.

Perhaps the most blatant contradiction is this intent to disentangle the technical from the ideological—itself a tired ideological assertion. Bias shapes what we see and what we make invisible. Objectivity is politically defined. Data for AI was never collected or interpreted objectively, and language is socially constructed. The order is not about “truthfulness and accuracy,” but an exercise in power that locks ideology into place.

AI runs on ideology

These terms—“misinformation, diversity, equity, inclusion, and climate change”—have parallels in the social justice movement. The ban on language comes within a broader context of scientific funding being frozen for word choices—such as “bias” and “underrepresented.” These terms have parallels in statistics, which is at the heart of predictive AI technologies such as Large Language Models. Removing the independent analysis of AI systems from academic researchers and relying on industry is part of the broader goal of total deregulation: it is an attempt to remove obstacles to the adoption of AI products, including the obstacles posed by critical thinking.

All AI contains traces of ideology and is in turn steered by ideology. Statistical biases therefore introduce specific kinds of language, and can reflect and perpetuate social biases toward the majority. In simple terms, AI systems are bias engines, reflecting what is most prevalent in any dataset. Large amounts of data about language use create a model of that language use based on predictable patterns. These patterns reinforce the underlying “data”—unless humans intervene, which introduces biases of what to emphasize or remove.

Large Language Model bias has become a game of whack-a-mole, in which we don’t know what the biases are until they are discovered. Most of that discovery comes from academia. For example, a recent analysis found that ChatGPT recommended lower salary expectations when giving advice to women than men with the same qualifications. This assumes that we want a society where women and men are encouraged to seek the same pay for the same work.

Why wouldn’t we want to know this? Yet, the Action Plan makes no effort to support this critical role of research, even as it encourages reports on labor impacts. The critical study of AI has already been gutted from federal research budgets, and the plan calls for the Department of Education to work on “AI skill development as a core objective of relevant education and workforce funding streams,” again emphasizing the technical knowledge required for accelerating AI adoption rather than critical literacies.

Bias at work in AI

AI is a socio-technical field. Attitudes change more rapidly than data centers can catch and train on them. Automating patterns of bias and discrimination into decision-making systems without oversight or feedback isn’t the elimination of ideology: it enshrines a specific ideology into technical infrastructure. To that end, the Action Plan aims to “ensure that the government only contracts with frontier large language model (LLM) developers who ensure that their systems are objective and free from top-down ideological bias.”

Taken practically, this is impossible. Elon Musk, Sam Altman, and anyone else behind an AI model are constantly working their ideologies into system prompts that prioritize personal assumptions about the world through the faux objectivity of “AI alignment.”

Recently, Musk’s LLM, Grok, declared itself “MechaHitler” and spewed racist rhetoric about Ashkenazi Jews, creating yet another excuse from its maker, xAI, which issued an apology on X blaming “an update to a code path upstream,” which seems to refer not to code but to the system prompt, a human-language set of instructions used to guide the LLM’s responses.

LLMs amplify what is in the system prompt. In the case of Grok’s racist tirades, Musk’s top-level instruction to “not shy away from making claims which are politically incorrect” and “You tell it like it is and you are not afraid to offend people who are politically correct” operates like the imperative “not to think of an elephant.” The suggestion is there, in the text: Grok is “steered” to extrapolate all responses from this instruction. This creates the imperative to generate text that is politically incorrect, rather than “not shying away” or “not being afraid” of such pronouncements.

Understanding the social influences and effects of technical decisions is much of what the critical AI research field does. This can introduce useful friction into the development of flawed systems, preventing harms to consumers and communities. Leaving it to industry to analyze and adjust itself doesn’t lead to innovation: it leads to a vacuum of social and political feedback, accelerating development toward profitability rather than socially valuable — or even socially neutral — uses.

There could never be “Woke AI”

The Executive Order against so-called “Woke AI” makes a claim that analyzing bias is an attempt to engineer social outcomes. It sets a target on “critical race theory, transgenderism, unconscious bias, intersectionality, and systemic racism.”

It is worth examining what the charge against so-called “Woke AI” actually means. “Woke” refers to the confrontation of racism embedded into legacies that systems rely upon to operate. To be “woke” implies the awareness and effort to push back wherever that legacy spills out of the system as we engage in the work of eradicating that bias altogether. Arguments can be made that “political correctness” can be radical: politics always operates on a spectrum, and the consensus is rarely satisfying to everyone.

In its executive order, the Trump administration points to examples of overcorrection. It notes AI systems that “changed the race or sex of historical figures—including the Pope, the Founding Fathers, and Vikings—when prompted for images because it was trained to prioritize DEI requirements.” This seems to refer to a case in which a prompt encouraging diverse representation in AI-generated images led Google’s Gemini model to produce diversity in images that defied common sense, as in when Nazi soldiers were overwhelmingly represented as Black or Asian. That wasn’t the result of so-called “DEI”—it was the product of poor testing standards before the model was deployed, and an overcorrection of biased data sets that, unadjusted, more often fail to produce images depicting anyone who isn’t white.

But it is undeniably radical to refuse any critical engagement with political, social, or technical systems that structure the ways we live. The Trump administration’s plan, in targeting “ideological bias” and “social engineering agendas” in AI, ultimately enforces them. It suggests, for example, that it may limit AI funding for states who have a regulatory climate that “hinders the effectiveness” of AI systems.

But there is no such thing as an unbiased AI system. In an ideal world, we accept this and refuse to use AI in place of essential infrastructure. In the practical world, we analyze the decisions these systems make and engage in constant discussion to mitigate their biases as they emerge. We create critical literacies that help users identify bad information and refuse biased instructions from AI models.

The world sought by Trump’s Action Plan is the opposite of even this.

Authors