The OMB Memo Shows That AI Can Be Governed

Janet Haven / Mar 29, 2024Janet Haven is the executive director of Data & Society, and co-chair of the Rights, Trust and Safety Working Group of the National AI Advisory Committee.

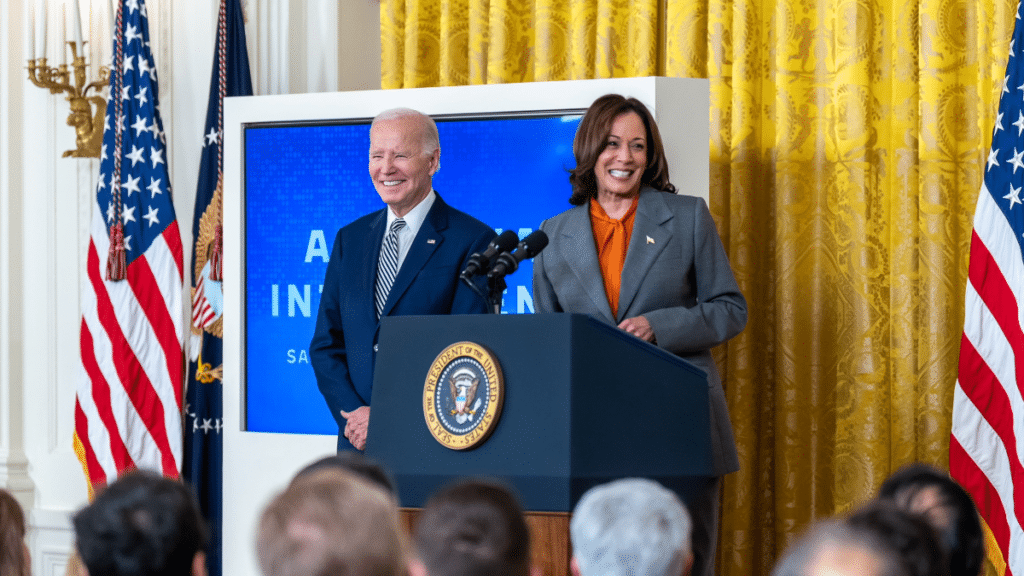

US President Joe Biden and Vice President Kamala Harris at the signing of an executive order on artificial intelligence, October 30, 2023. Source

Yesterday marked the public release of the US Office of Management and Budget’s long-anticipated “Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence” management memo, a significant companion to President Joe Biden’s executive order on AI, released last fall. The memo provides detailed direction to federal agencies on how they can – and cannot – use AI systems to make decisions about citizens and non-citizens alike. Even more than the executive order, the OMB memo details concrete actions that federal agencies are required to take to ensure the safety and protect the rights of Americans. From December 1st of this year, federal agencies must take proactive, pre-release steps to protect against the known harms of AI systems – up to and including not using an AI system at all if the risks to safety and rights are deemed too significant.

Reading the memo, anyone concerned about the impacts of AI on people, communities, and society should feel cautiously optimistic. That’s not because the OMB memo is a perfect document – it’s not, and implementation is a major challenge. Still, optimism is warranted because the OMB memo represents an important stance that other branches of the US government, particularly Congress, should emulate: AI, in the here and now, can be governed. To govern AI means that as a society, we can set rules about how it can be used, in consultation with the people most impacted by those decisions. We can create systems to enforce those rules. And when people or companies or institutions don’t follow those rules, we can hold them accountable. The European Union already made this case for enforceable governance with the recent passage of the EU AI Act. Now, the US has taken meaningful steps in the same direction.

Why is the OMB memo news? In recent years, it's become clear that continuing to default to governance of data-centric technologies like AI via industry self-regulation and voluntary commitments to good behavior fails to ensure even basic protections Americans should expect – data privacy, civil rights, and fundamental accountability to the public. Government must play a more active role in defining and enforcing regulation and law around how we develop and use technology. And for no technology is this clearer than for AI, as earlier White House documents like the Blueprint for an AI Bill of Rights laid out.

The OMB memo’s directives underscore the need for a more robust governance stance, and get specific about what that should look like.

First, the memo says that the government has a responsibility to evaluate and validate decisions to use AI systems that directly impact people, and their rights and safety. And this is appropriate: the commitment by a democratic government to uphold and protect the public interest and individual rights is a binding social contract, unlike even that of the most well-intentioned company.

Second, the OMB memo implicitly argues that the “tech can’t fix the tech.” These are not closed technical systems; rather, they are “sociotechnical” tools with broad societal impacts. The evaluation of their impact, informing governance actions, needs to happen both in the context of their use, and via methodologies that prioritize consultation with the people and communities likely to be most impacted by them.

Third, the memo emphasizes that some uses of AI (which it enumerates) carry a higher risk of rights and safety harms than other uses – and thus require special and sustained attention from government users and regulators.

This is a significant change from the early days of free-for-all technology development — and part of a shift toward AI governance we’ve seen with the AI Executive Order’s directives to agencies, January’s launch of the AI Safety Institute within the Department of Commerce, and the careful, publicly-informed work being done by agencies like the National Telecommunications and Information Administration (NTIA), the Federal Trade Commission’s antitrust division, and the Department of Justice.

Now, with the policy outlined in the OMB memo, we need three critical pieces to come into place.

First, we need to build the infrastructure of governance. Governance is a muscle, and the US has a serious weakness in this area. There are promising starting points. One is the opportunity the OMB memo presents: for the government to effectively govern its own use of AI, leading to a quick and valuable learning curve about how to implement systems of accountability for AI.

Further, the AI Executive Order mandates a Chief AI Officer (CAIO) in every agency who both holds responsibility for advancing the use of AI in that agency, and for implementing the OMB’s policies. That is a significant remit for one person, with broad discretion in interpreting and implementing the OMB’s policy. This discretion in the hands of the CAIO is both an opportunity for shaping a powerful accountability role, and perhaps the weakest part of the policy if left to a CAIO who does not prioritize the rights and safety issues at stake. Selecting and supporting CAIOs with a strong commitment to the task of governance will be critical. Equally critical are the experts within agencies who will implement these protections, who will test and document the evaluation approaches and their outcomes, and who will refer and adjudicate cases in civil rights and privacy divisions. Documenting and refining process and methodology are central to governance development, and ensuring that learning and iteration is taking place across agencies and within operational cohorts is a major part of building this infrastructure. The mandated agency AI Governance Boards could play a major role here.

Second, we need independent monitoring of the memo’s directives, and ongoing research into the efficacy of these approaches and methodologies for accountability. The OMB memo mandates public transparency for a number of critical parts of AI in government: the AI inventory that each agency must regularly produce; the CAIO justification for waiving minimum evaluations on a particular AI system; and information about open systems being used by the government. Transparent information and analysis of the impact of these new systems is critical for civil society, academia, and advocates to evaluate the government’s actions as they implement the memo’s directives. This is a tremendous opportunity to learn about building and implementing new governance models. Are these processes sufficient to deliver meaningful protections?

Finally, what we need most to advance OMB’s effort to assert governance of AI is, of course, legislation. A core limitation of the OMB memo is its reach – it only governs the actions of federal agencies. While it will impact company behavior through procurement, that is far from the work that binding legislation could do. To date, Congress has convened forums, held hearings, heard experts, and may be putting together a comprehensive legislative response. Recent history tells us that even if Congress acts, we’re unlikely to see legislative action in an election year. But legislation that protects American rights and safety when encountering AI is needed now.

To be sure, the OMB memo does not solve the full scope of AI governance. But it is a meaningful signaling step that should embolden government leaders, particularly in Congress, to see the opportunity that is in front of them: to govern.

Authors