The Real Cost of the UK’s ‘Free AI Training for All’ is Democracy

Elinor Carmi, Tania Duarte, Mark Wong, Susan Oman, Tim Davies / Feb 10, 2026

UK Secretary of State for Science, Innovation and Technology Liz Kendall speaks at the opening of Google's new data center in Waltham Cross, Hertfordshire on September 16, 2025. (James Manning/Press Association via AP Images)

On Wednesday, January 28, the UK public was informed of a new initiative led by Liz Kendall, Secretary of State for Science, Innovation and Technology, offering “Free AI training for all.” This was not a surprise, since the government announced last June that it would dedicate £187 million to AI training and collaborate with Big Tech companies such as NVIDIA, Google and Microsoft to deliver skills programs. The initiative is part of the UK government’s AI Opportunities Action Plan, which says “we need to embrace AI to change lives.”

While developing citizens' skills is important, these collaborations raise questions about the government's close relationships with corporations that are responsible for cultural, societal and environmental harms. The initiative also raises questions around the allocation of public money, and the fact that it is not going to local and community organizations who have been delivering literacy programs for years.

Together with UK based academics and civil society leaders led by the digital rights organization Connected by Data, we wrote a letter on July 8, 2025, to the UK government, urging it to prioritize AI literacy for all. In the letter, we argue that “Building critical AI literacy for all requires accessible and independent materials, beyond a focus on individual companies and tools, alongside a range of opportunities for different communities to engage critically with learning about AI in context: not just how to adopt and use AI.” Ultimately, the AI upskilling courses that the UK government offers are meant to train people to be better workers and better consumers. Other important aspects of AI literacy, — such as learning how to use AI for your own benefit and your community, what are your rights, how to negotiate the use of AI in different settings, or whether they should use AI at all — seem to be absent. That is why we are writing a follow-up letter to ask the government to focus on scaling and sustaining comprehensive, critical AI literacy programs for all citizens together with local UK-based organizations.

What the ‘AI Skills Hub’ offers

The AI Skills Hub indexes hundreds of AI-related courses on a platform that has already received criticism about how poorly designed it is. What may have cost the UK tax payer £4.1 million (we don’t know the precise amount as there is no transparency on this), the public received a ‘bookmark’ hub, which reportedly has fake courses, courses that no longer exist, courses that cost thousands of pounds, courses that do not apply in the UK context, and courses with many inaccuracies about the UK legal system (for example, around intellectual property). Beyond the dodgy courses, the hub offers “AI Skills Boost,” which includes the free ‘Foundation’ courses that receive a prime position on the hub to signal that they are the mandatory courses every worker should take. These 14 courses are exclusively from big US organizations, promoting and training on their platforms.

Screenshot provided by the authors.

While the selling point for the initiative was ‘AI skills for all,’ subscription to the hub reveals the focus is on workers, and apparently those from specific industries. The creative industries focus feels particularly contradictory in a UK context which recently saw unprecedented mobilization by artists, journalists and creators against the unfair use of their work by AI companies in what was termed the #MakeItFair campaign. UK workers have a complicated relationship with AI, and the automatic adoption of it without involving workers and unions in the decision-making process goes against what workers themselves appear to want. The government’s announcement indicates the program was developed with input from experts and unions, but never really mentions which ones, begging questions about which organizations and unions officials actually consulted with and if they were meaningfully engaged.

'AI Skills Hub' screenshot provided by the authors.

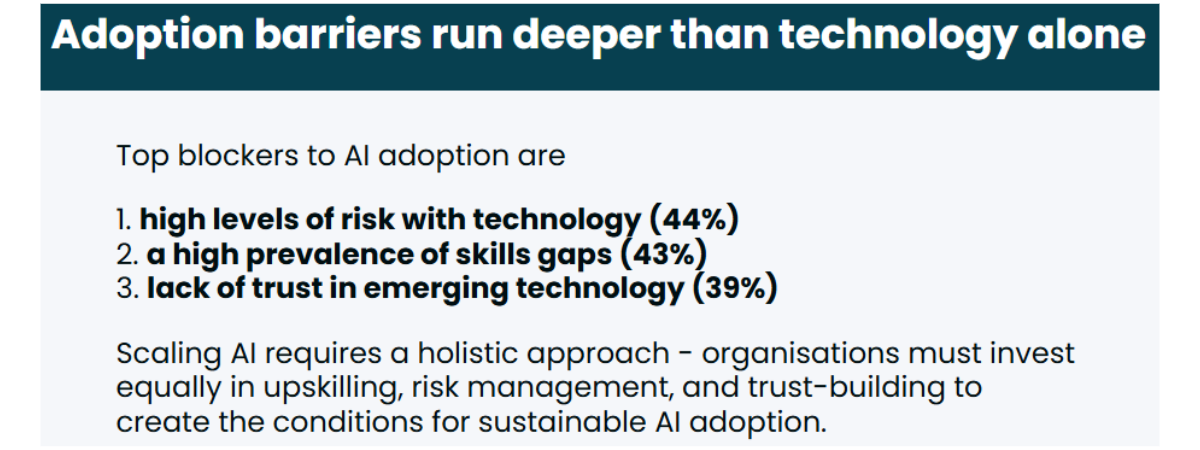

Ironically, in the AI Skills Hub’s ‘research’ section, the government’s research shows how people do not use AI because they mistrust the AI companies (image below). These are the same companies that have been using dark patterns and other deceptive methods, seen or unseen, to persuade people to give up their data in order to exploit their vulnerabilities. So people and workers are right to mistrust AI companies, especially the US Big Tech firms. And yet the UK government seems to be eager to force these companies on workers without promising any regulation, oversight or scrutiny of the companies in return. This echoes findings from a recent survey by the Ada Lovelace Institute which revealed 51% of adults in the UK do not trust Big Tech companies to act in the public interest, while distrust towards the government and social media companies is even higher.

'AI Skills Hub' screenshot provided by the authors.

Commercial ties that are difficult to untangle

While the European Union is starting to distance itself from US Big Tech monopolies with countries moving towards tech sovereignty, the AI Skills Hub is one example of how, conversely, the UK is moving away from sovereignty to greater dependency. Just this week, it was announced that the UK government will turn Barnsley into the ‘first Tech Town’ as part of “Labour’s drive to inject AI into Britain’s bloodstream,” and the medicine seems to be tech solutionism. This experiment, which seems to be a rebranding of the ‘smart city,’ is apparently driven by the government’s thirst for growth at the expense of anything else.

But Big Tech companies have been playing this game for a long time, embedding themselves deeper into society’s infrastructure so that they can ‘lock-in’ people and organizations as customers. Recent US court documents reveal that Google has been trying to get its software into schools to get kids hooked into its products (just like the tobacco and pharmaceutical industries) when they are young in order to hopefully lead them to use the company's products as adults. As one slide said, “You get that loyalty early, and potentially for life.” But getting people hooked on their products when they are young is not enough, and lately, several universities, including Oxford and Manchester, have signed collaborations with OpenAI and Microsoft.

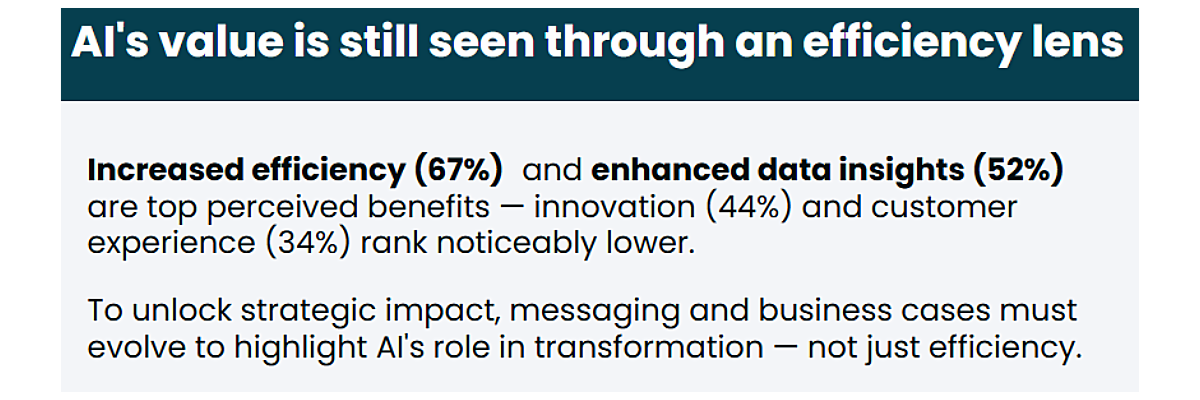

'AI Skills Hub' screenshot provided by the authors.

We know some of today's most hyped AI companies operate on huge losses, and that the AI bubble is predicted to pop. CEOs say that AI does not bring the promised efficiency or profit, despite the research the UK government puts on the AI Skills Hub, as the picture above shows. That is because the costs are often higher than the profits. So just as many tech companies before them have done, AI companies such as OpenAI are pivoting to sell advertisements and possibly adult content. But to make profit from ads, they need a bigger user base. And that is why we are seeing successful lobbying attempts to infiltrate society’s infrastructures through education, government services, and the work force — to increase subscriptions and sustain the ‘hype’ to make these companies too big to fail.

One of the key issues is that these collaborations normalize the close relationships with US Big Tech firms, setting them up to be key actors in governance who provide technological solutions to important social ‘problems’ and how the overall direction of the country will be shaped. Another example emerged in July 2025, when the UK government signed an agreement with OpenAI to develop AI solutions “to the UK’s hardest problems, including in areas such as justice, defence and security, and education technology [and] to expand public engagement with AI technology.”

But it is important to emphasize that profit-driven companies do not share the same values as democratic government bodies, or even necessarily believe in democracy itself. So procuring Big Tech to deliver education training risks leaving out more critical aspects of education, not to mention that some of these companies have active legal cases against them. How will the UK government’s collaboration with them affect these legal cases when they are essentially becoming 'partners'?

The strong dependency that the government creates is resting on significant risks, not only morally, but also legally and financially. The market dominance of some of these companies is increasingly fragile. The growing concerns around data privacy and vulnerability, such as ‘AI poisoning,’ and the lack of GDPR compliance for many generative AI tools contradict the government’s blind faith in these technologies and the companies that create them.

Where is the public in this?

The focus on practical skills undermines other national projects that are happening at the moment, including digital inclusion and the media literacy inquiry. Collaborating and promoting skills training from the same companies who are responsible for a lot of the online harms people experience, signals to the public that the government prioritizes Big Tech. And unsurprisingly, the UK public feels that this is a suspect relationship. The survey by the Ada Lovelace Institute reveals 84% of the UK public fear that the government will prioritize its partnerships with Big Tech companies over the public interest when regulating AI.

Ultimately, the AI Skills Hub risks training people to be AI boosters using the language of solving AI blockers. It also frames people as either consumers or producers, leaving them no room to be resisters or avoiders, or to consider whether an AI-assisted approach to a task is appropriate, beneficial and without social or environmental impacts. And it certainly doesn’t afford the citizenry access to the decision-making process. This narrows the public’s position in democracy, ensuring that people buy the AI ‘inevitability’ narrative without any option to have a meaningful say.

The failure of the UK government to create a meaningful, fit-for-purpose AI Skills Hub, which genuinely benefits people, is consistent with years of cronyism with US Big Tech companies. The current UK Government continues to live in the shadows while continuing an ‘empire’ mentality in the global AI race. But at what cost? The real harms hit people and communities hardest who are the most disempowered and most harmed by uses of AI. The latest ‘AI skills boost’ does not meaningfully enhance AI skills, while worsening people’s trust in public institutions as they continue to see government and tech companies working together for limited economic gains that are not equitably shared with the wider public. The AI Skills Hub should be re-thought with input from civil society groups and public interest organizations across the UK that have been building AI and related literacies for years.

Authors