The TAKE IT DOWN Act Is US Law. Platforms Must Do More Than The Bare Minimum

Becca Branum / Aug 13, 2025

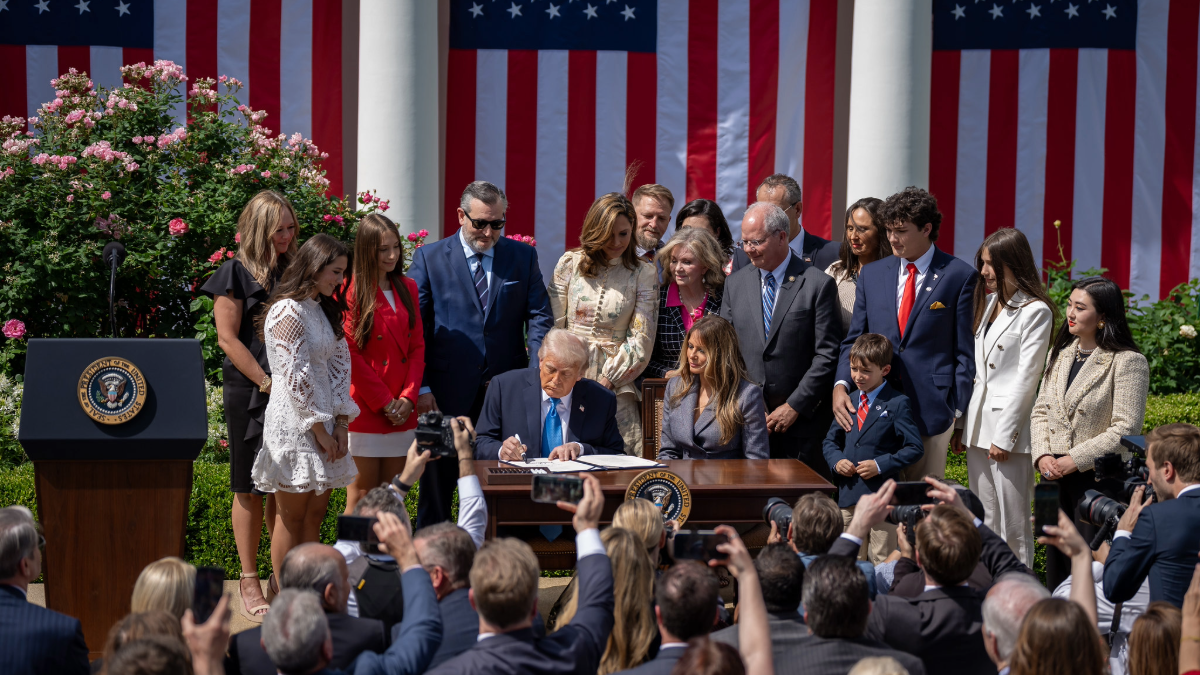

President Donald Trump and First Lady Melania Trump participate in a bill signing ceremony with lawmakers and advocates for the Take it Down Act, Monday, May 19, 2025, in the White House Rose Garden.(Official White House photo by Carlos Fyfe)

Nonconsensual distribution of intimate imagery (NDII), whether real or AI-generated, is one of the most insidious forms of abuse on the internet today. Ubiquitous access to powerful generative AI tools has exacerbated longstanding and under-appreciated risks of image-based sexual abuse, and people from K-12 students to Members of Congress are suffering the harms. Congress responded by passing the TAKE IT DOWN Act, both criminalizing NDII and requiring platforms to remove NDII within 48 hours of a valid complaint. The law’s ambiguous requirements, though, leave open questions that allow tech companies wide latitude as to what counts as compliance. Companies could choose either to do the minimum required to avoid Federal Trade Commission (FTC) enforcement, or to actually invest the time and effort necessary to protect users and meaningfully respond to image-based sexual abuse.

Responsible platforms must choose the latter. To make the TAKE IT DOWN Act’s requirements meaningful, victims must be able to quickly and effectively navigate the process for reporting NDII. If tech companies are serious about addressing NDII — and not just avoiding legal liability — they must go beyond check-the-box compliance. This means designing reporting processes that respond to victims’ needs and experiences.

As a recent CDT report reveals, platform reporting processes can fail victims by being opaque, inconsistent, and difficult to use. Platforms frequently bury NDII policies within confusing terms of service or other lengthy documents written with compliance — rather than effective communication or trauma-informed practices — in mind. The result? Victims may not know their rights or how to request the removal of their images. Some sites also require users to have an account to report NDII, meaning victims in acute distress may need to navigate not just reporting tools but also the process of account creation. Where age verification is in place, doing so could mean handing over sensitive personal information. Such requirements of victims add insult to injury and slow down the removal process.

Platforms should begin by writing NDII policies in plain, consistent, accessible language, clearly stating that both real and AI-generated intimate images shared without consent are prohibited. Doing so will help many users: AI-generated NDII has exploded in scale, particularly targeting women, LGBTQ+ individuals, and public figures. Policies must catch up to this reality and reflect it in practice, and clearly and plainly include this prohibition in all relevant documentation.

Companies should also provide users with multiple, accessible options to report abuse. No one should have to fumble through account creation just to request the removal of NDII. While placing a report button next to content is industry best practice for NDII, victims shouldn’t be required to directly interact with NDII to request its removal. Victims, therefore, must be able to request NDII removal without needing to interact with it, and must be confident that their privacy will be protected throughout the process.

In addition to takedown tools, platforms should offer victims support. That includes connecting users to helplines, legal aid, and tools that may assist in preventing future abuse. A link to professional resources right after submitting a report could make a profound difference to someone in crisis.

Doing more than the bare minimum also means implementing the TAKE IT DOWN Act with the rights of all users in mind. Companies must create enforcement processes that remove NDII while preserving consensual and lawful content. Notice and takedown systems are prone to abusive takedown requests, risking not just the wrongful censorship of lawful and consensual sexual expression but also the TAKE IT DOWN Act’s viability as an effective and trustworthy response to image-based sexual abuse. To ensure accountability — for both victims and the public — companies should be more transparent about how they respond to complaints and use the data they collect to improve the accuracy of their responses to removal requests.

The path forward is clear. Platforms must build NDII reporting systems that are intuitive, empathetic, and effective — not just because the law now compels them to act, but because they owe it to the people they serve. Compliance is the floor. Dignity, efficacy, and accountability are the ultimate goals.

Authors