The World’s Growing Information Black Box: Inequity in Platform Research

Rachelle Faust, Daniel Arnaudo / Nov 7, 2025This piece is part of “Seeing the Digital Sphere: The Case for Public Platform Data” in collaboration with the Knight-Georgetown Institute. Read more about the series here.

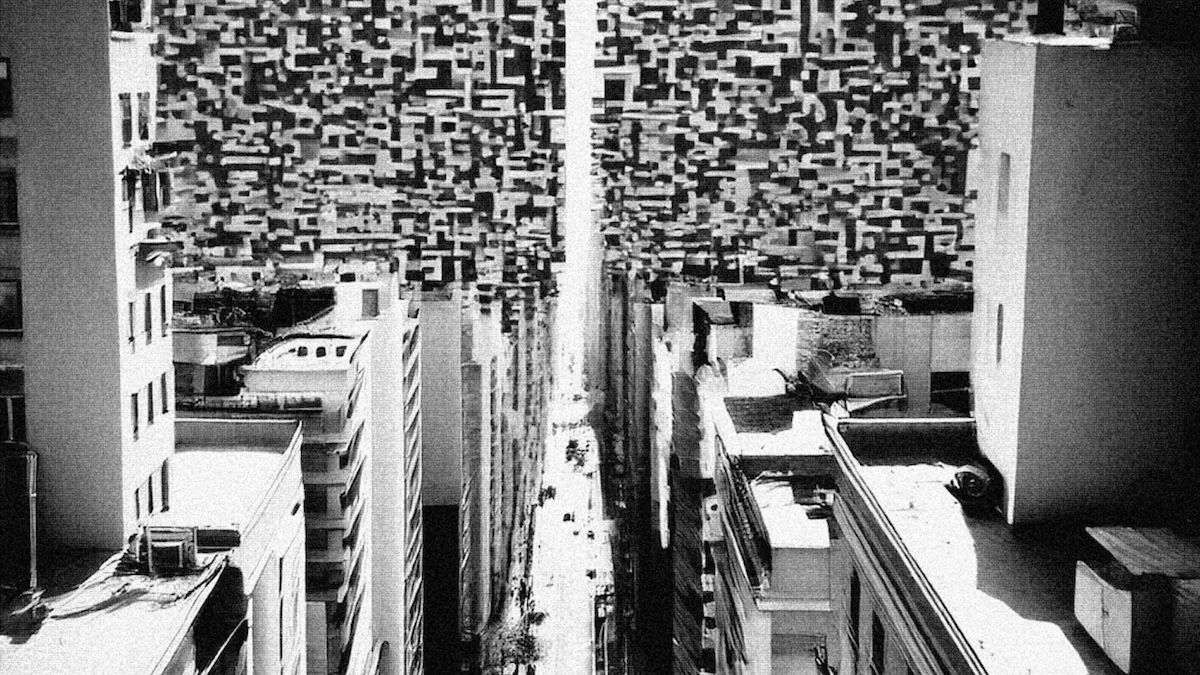

Elise Racine & The Bigger Picture, Glitch Binary Abyss II, Licensed by CC-BY 4.0

In an age of automated agents and increasingly powerful and complex technologies, it’s becoming nearly impossible to study the internet. The golden era of independent public interest research on platforms, marked by free data access and expansive partnerships between platforms and researchers, has come and gone. No longer can fact-checkers use a proven research tool like CrowdTangle to track real-time narratives on Facebook during local elections, nor can researchers use Twitter’s application programming interface (API) to identify patterns in tech-facilitated gender-based violence at no cost.

Even during this “golden era” of platform data access, there’s always been a comparative data drought in Global Majority countries. The barriers to access erected in recent years have been widespread, particularly outside of the U.S. and Europe, but perhaps none have felt them more acutely than the frontline defenders of information integrity.

Who are these frontline defenders? They’re the fact-checkers working to debunk falsehoods before malign actors influence a critical election result. They’re the human rights activists investigating rising online attacks against women politicians. They’re the civil society researchers untangling sprawling influence campaigns during a public health crisis.

More often than not, they’re working with a budget too small to afford the exorbitant price of access to meaningful data through X’s API. Or they lack the right connections or standing to navigate the cumbersome application process for the Meta Content Library (MCL), which, unlike its predecessor, requires applicants to submit a formal proposal detailing a predetermined research question and secure approval from an Institutional Review Board, something universities have but most civil society groups do not. The countries where these frontline defenders operate also lack regulatory mechanisms like the European Union’s Digital Services Act, which allows vetted researchers to petition for access to non-public platform data to study systemic risks, such as illegal content and harms to public discourse.

This regulatory gap, combined with financial and technical barriers, creates a profoundly unequal playing field. The current state of data access worsens this inequity, making it even more difficult to conduct research in diverse contexts and languages where it is needed most.

Evidence from the frontlines

During our time on the National Democratic Institute’s Democracy and Technology team, we conducted a survey of members of a global network of frontline defenders. The unpublished survey received a total of 28 responses, representing every region of the world. Among other questions about how recent changes to platforms’ policies and operations have affected their understanding of local information environments, we asked how their access to platform data has changed and whether this impacted their ability to do their work.

Seventy-five percent of respondents indicated it has become more difficult to conduct public interest research on digital platforms, due in large part to platforms discontinuing or imposing high fees for direct research tools. When asked which platforms have implemented changes that negatively impacted their work, over 85 percent of respondents cited X, 75 percent cited Facebook, and over 50 percent cited Instagram, with smaller numbers highlighting TikTok, WhatsApp, and Telegram. Smaller platforms are less studied and often have fewer resources available to begin with.

A respondent representing a civic tech organization in Iraq noted that ten of their employees previously had comprehensive access to real-time data through CrowdTangle. In contrast, with the new access requirements for the MCL, only one of those employees was approved for access, significantly constraining the organization’s ability to identify and address online threats.

Restrictions on access to data is not the only barrier to research. Meta has also scaled back its “trusted partners” program—created to help Meta understand the impact of its platforms in diverse communities around the globe and help keep users safe—by eliminating its third-party fact-checking program in the United States and redirecting resources to other company priorities. Furthermore, the company has been shown to prioritize reports from trusted partners in well-resourced countries and languages, while others, where fewer resources have been invested, experience drastically different response times and resolution rates.

Another survey respondent from Taiwan expressed frustration with how the MCL can be used in practice. The respondent was unable to connect the research infrastructure they had invested significant time and resources to build with the Meta Content Library’s clean room environment. Meta’s new system is far more limited, with fewer tools to help civil society organizations operating on tight budgets with small staff capacity, who have more of a challenge to analyze key metrics such as reach and engagement, and adapt it to their workflow.

Platforms’ practices deepen the data divide

We worked for years training and equipping people all over the world to adapt and apply systems like CrowdTangle in their local contexts, and can testify that these changes have made the already huge challenge of understanding their information environment far less surmountable for all kinds of organizations.

Even if they’re harder to study, online harms haven’t disappeared—nor have platforms’ efforts to address them. TikTok was allegedly used for foreign interference during Romania’s 2024 presidential election, which led to a formal investigation by the European Commission. In response, the platform established a dedicated Election Center to connect users to reliable information. Yet TikTok restricts access to its research API to “qualifying researchers” in the United States and Europe. This means that researchers working to detect such foreign interference during elections that impact 95 percent of the world’s population do not have access to TikTok’s tool.

This reflects a common bias for platforms: Resources, content, support, and access are all generally in English and offered principally to users in Europe and North America. Exemplifying this challenge, one survey respondent with more than ten years of experience studying the misuse of digital platforms by bad actors during elections in Uganda lamented the tradeoffs of directing grant money towards data access fees and away from actual research and analysis.

Why aren’t platforms acknowledging the vital role of independent researchers in identifying these malicious threats and giving them the access to platform data they need to do so? User privacy is a legitimate concern, but researchers don’t need unfettered access to personal data to draw useful conclusions about content, trends and coordinated networks. And while platforms increasingly restrict access to data for public interest research, they continue to collect and monetize ever larger quantities of user data for advertising and the training of artificial intelligence systems.

Ultimately, platforms have moved away from independent data access because they view it as a cost center, with little upside to sharing information that they view as potentially damaging, and transparency is a risk. Cases such as the attacks on Rohingya people in Myanmar in 2015, where posts on Facebook contributed to the violence, spurred criticism and more data transparency efforts to show platforms were responsive and open. But many in leadership have seen these efforts as misguided and a challenge to their ability to define their brands and how they are perceived publicly.

A new framework for data access

The Knight-Georgetown Institute’s new framework for accessing high-influence public platform data is a critical step toward reclaiming the public square. It sets out recommendations to lower barriers to accessing platform data while minimizing privacy risks. The framework was designed to provide a baseline for researchers in all their diversity, grounded in the understanding that fact-checkers, human rights activists, and civic tech groups often have research questions and objectives, such as elections or ongoing observation, that differ significantly from those of academic researchers.

Academic inquiry, while vital, often focuses on long-term, large-scale studies of platform architecture or information ecosystems. Civil society, however, needs real-time, granular data access to address immediate, localized harms. For example, an academic might study the evolution of a platform's general content moderation policy over a year. In contrast, a fact-checker might need to track a coordinated, real-time disinformation campaign during a local election lasting weeks or months. A human rights group might need to quickly identify a surge in targeted online abuse against a minority ethnic group, or to document video footage of atrocities that may be deleted in the future. These researchers operate with an urgency and a focus on micro-level, high-stakes contexts that are often overlooked in conversations driven by university-based academics in the US and Europe.

All too often, these conversations center on platforms largely developed in and operating from the U.S. The conversations ignore voices from pretty much everywhere else in the world—voices that are just as impacted by the content shared and disseminated on platforms. We leveraged the findings from the aforementioned survey, along with years of conversations with frontline actors, to help shape the framework so that it would resonate with groups working in obscure languages, with limited budgets, and different levels of technical capabilities. This meant, among other things, advocating for free access to high-influence public data to relieve the financial burden on CSOs.

We also grappled with how to structure the framework’s absolute and relative thresholds for “high-influence.” In defining “highly disseminated content,” for example, the framework includes both an absolute threshold of 10,000 total unique views, listens, or downloads, as well as content that is in the top 2 percent of weekly views in a particular information environment (for example, linguistic or geographic).

This works to ensure that the framework would apply equally in Germany or Brazil, as well as in a small country or a diaspora community spread across dozens of countries. Ultimately, we acknowledge that the thresholds we landed on for the framework likely won’t capture all relevant content for every community—and that’s why it should be considered a baseline. It’s a necessary foundation upon which platforms, regulators, and research consortia must build dynamically going forward.

Future efforts to implement and expand upon this framework should continue to include perspectives of researchers from different countries, sectors, and with varying goals and levels of technical expertise. Transparency is a foundational element of democratic vibrancy. When so much of our daily lives and public debate takes place online, enabling privacy-respecting public interest research through access to high-influence platform data is a critical step towards ensuring transparency and accountability. Digital platforms are a central component of almost everyone's lives and dictate how we view the world.

It is more essential than ever that we understand how these platforms operate, through access to robust, meaningful data about all contexts, especially those that are most often home to conflict and other threats. We urge platforms and others working with them to strive for greater transparency to prevent online harms and promote open, rights-respecting data access systems going forward.

The views expressed above belong solely to the authors and do not reflect the position or opinions of their current or former employers.

Authors