Three Reasons the White House AI Commitments Are a Game Changer

Brandie Nonnecke / Jul 31, 2023Brandie Nonnecke, PhD, is Director of the CITRIS Policy Lab & Associate Research Professor at the Goldman School of Public Policy at UC Berkeley.

It’s easy to criticize the White House AI Commitments as too weak, too vague, and too late. The EU will soon pass comprehensive AI legislation that will have global ramifications, placing legal requirements on AI developers to build safe, secure, and trustworthy AI—the very goals the White House seeks to achieve.

But in the United States, where AI-related legislative and regulatory efforts are often impeded by party politics and where laissez-faire capitalism has a stronghold, these voluntary commitments may well be the strongest possible steps to hold AI companies accountable. Here are three reasons why.

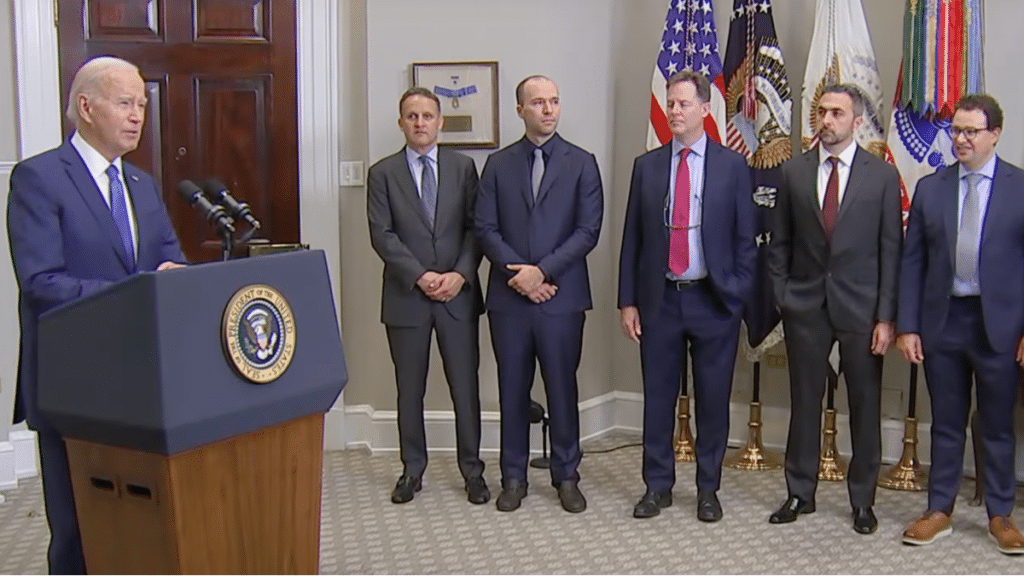

First, the Federal Trade Commission (FTC) has the capacity to hold AI companies accountable for their misdeeds, and the White House AI commitments support this authority. The FTC Act authorizes the FTC to protect consumers from deceptive and unfair practices. The seven companies that joined the White House announcement– Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI– have committed to conduct rigorous internal and external security testing of their AI systems and to publicly report system capabilities, limitations, domains of appropriate and inappropriate use, safety evaluations, and implications for societal risks such as fairness and bias. These processes will generate extensive documentation of their procedures and findings, providing critical evidence that can support the agency's oversight.

Second, the commitments will support greater transparency and accountability of AI systems by enabling auditing and research activities. These commitments couldn’t have come at a more critical time. During the big tech layoffs in recent months, trust and safety personnel were first on the chopping block, internal and external research activities dwindled under funding restrictions or were halted altogether, and third-party access to APIs for meaningful research purposes suffered under newly implemented pricing schemes and query limits.

The AI companies convened by the White House have pledged to prioritize research on societal risks posed by AI systems and to enable third-party discovery and reporting of issues and vulnerabilities. In order to do so, they have committed to investing in tools and APIs that enable auditors’ and researchers’ access to data and models and in bounty systems, contests, or prizes to incentivize third-party discovery and reporting of vulnerabilities. While these voluntary commitments may seem inconsequential, they evade potential legal challenges that mandatory requirements would face.

Compelling AI companies to provide data and information to third parties may not pass legal scrutiny. In Washington Post v. McManus, a Maryland law that compelled online platforms to make data and information publicly available on political ads it hosted was struck down. The court determined the law violated the platforms’ First Amendment rights because it compelled their speech. A law compelling AI companies to make data and models publicly available could face similar First Amendment scrutiny, not to mention legitimate concern over the protection of proprietary assets. AI companies’ voluntary commitments to share data and open up their AI systems to third-party scrutiny are more likely to lead to meaningful transparency and accountability now than mandates that would likely get tied up in lengthy legal proceedings.

Third, the commitments incentivize information sharing that can support and speed up AI safety efforts. The AI sector is fiercely competitive and secretive, yet these companies often have shared risks and vulnerabilities. Identifying and managing AI risks—from privacy concerns and bias to security vulnerabilities, misuse, and abuse—are too significant for AI companies to navigate in isolation. By sharing information between companies, governments, academia, civil society, and the public, the AI safety field will progress at a much faster pace. And AI companies know this. Four of the seven companies that signed on to the White House AI Commitments have already banded together to form an industry forum to support AI safety research for frontier AI models.

Even as I applaud these commitments, I’m not naive. The companies have significant financial incentives to develop evaluations and transparency reports that present their AI systems and oversight processes in a favorable light. Yet while these voluntary measures may seem insufficient to some or disingenuous to others, we must recognize their significance in a landscape often obstructed by political impasses and staunch market forces. The White House AI commitments are a game-changer, a call to action for responsible AI development that transcends political divides and industry rivalries. By rallying companies together under the banner of shared responsibility, the White House AI commitments are helping to forge a path toward safe, secure, and trustworthy AI.

Authors