White House AI Executive Order Takes On Complexity of Content Integrity Issues

Renée DiResta, Dave Willner / Nov 1, 2023Renée DiResta is the technical research manager at Stanford Internet Observatory. Dave Willner is a Non-Resident Fellow in the Program on Governance of Emerging Technologies at Stanford Cyber Policy Center.

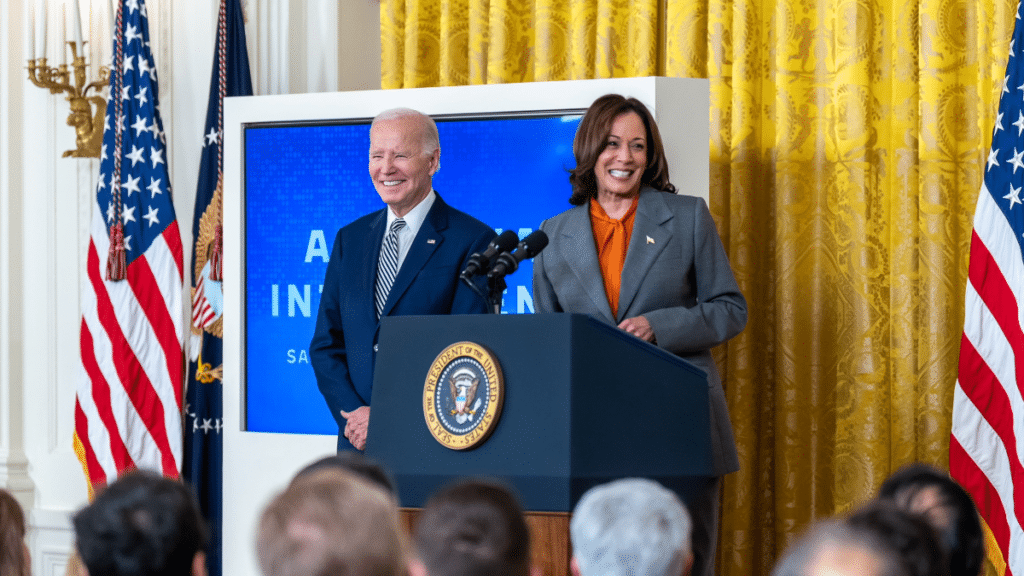

On the morning of October 30th, the Biden White House released its long-awaited Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence. There are many promising aspects of the document, and the initiatives it outlines. But on the subject of content integrity – including issues related to provenance, authenticity, synthetic media detection and labeling – the order overemphasizes technical solutions.

Background

The launch and widespread adoption of OpenAI's ChatGPT and text-to-image tools in late 2022 captivated the public, making hundreds of millions of people worldwide aware of both how useful – and how potentially destabilizing – generative AI technology can be. The shift is profound: just as social media democratized content distribution, shifting the gatekeepers and expanding participation in the public conversation, generative AI has democratized synthetic content creation at scale.

The White House’s Executive Order raises and addresses many of the most prominent concerns that the American public has about artificial intelligence, including the potential negative impacts on national security, privacy, employment, and information manipulation. But it also highlights the importance of remaining competitive, and the opportunities associated with leveraging a powerful new technology for the benefit of society.

The guidance in the Executive Order, it’s important to note, is primarily within the context of leveraging existing authorities: while Congress continues to consider an array of AI-related bills, continued partisan gridlock means that there is little hope of any regulation being passed in the next year.

The Order directs specific agencies to prioritize the development of distinct capacities of generative AI related to their missions, both toward advancing research into generative AI and related AI safety efforts, but also in terms of the ethical adoption and use of such technologies by the federal government itself. For example, it acknowledges the potential impact on privacy, particularly with regard to training data, and tasks the National Science Foundation with promoting adoption of privacy-preserving technologies by federal agencies. It addresses consumer protection – a critical area of concern, particularly given the increase in scams that leverage voice and text generation technology to manipulate people.It notes the potential impact on jobs, but also the critical necessity of the United States remaining at the forefront of AI research and advancement.

The Executive Order focuses on the significant national security implications of the technology both in terms of how the United States military and intelligence community will use AI safely and effectively both in their own missions as well as to counter adversaries. And, it delves into concerns around content integrity.

Tackling content integrity and trust

It is on this subject that, while it is a significant step forward,there are some important considerations missing from the Executive Order. Both of us are researchers at the Stanford Cyber Policy Center. One of us studies how generative AI is likely to transform child safety and information integrity. The other recently left his position as Head of Trust and Safetyat OpenAI, and now studied risks and harms associated with large language models and text-to-image diffusion models. Therefore, we are both interested in some of the technological feasibility aspects within the Executive Order - particularly watermarking and detection, which the Order calls for in the context of reducing the risks posed by synthetic content.

We are both strong proponents of watermarking and other efforts at content authentication, and believe that the Executive Order’s focus on this adds a government imprimatur to what has largely been an industry effort at establishing best practices, emphasizing its importance. But the administration's approach fails to reckon with the fact that many of the harms of generative AI come from the open source model community, particularly where child sexual abuse materials and non-consensual intimate imagery are concerned. And it does not acknowledge that watermarking of generated content may not be adopted universally, and will not be adopted by bad actors using open source models.

Since social media platforms will be primary users of watermarking signals as they decide what to surface (particularly during breaking events), over-reliance on technological solutions for watermarking generative content risks creating systems that miss non-watermarked but nonetheless AI generated content, creating a false perception that it is legitimate. Additionally, there are increasingly complex attempts to assign provenance to authentic content at the time of its creation. Here too we will find ourselves in an intermediate world where older devices and many platforms and publishers do not participate. The challenges of ascertaining what is real from among this combination of generative-and-watermarked, generative-and-not-watermarked, real-and-certified, and real-and-not certified will potentially be both very confusing and deeply corrosive to public trust.

We have already seen instances of this confusion in the Israel - Hamas conflict in which authentic images of atrocities were dismissed as fakes because they fooled an AI image detector. That this confusion is unfolding in the most preliminary stage of the above complexity only further underlines the risk. The Order does note that the federal government will issue guidance to agencies for labeling and authenticating its own digital content to build public trust. These are admirable and important efforts, but a focus on technological solutionism to the exclusion of other efforts will be insufficient to solve this problem.

The most important line of defense: an educated public

Generative AI is enabling a broad societal shift, where trust in what we see and hear is not as simple as it was even a few months ago. The technology will continue to rapidly advance. This speaks to one final area that is missing from the executive order: education. While the public is aware of the existence of the technology, navigating the changing dynamics of trust in media and sources of information requires educating the public about how to determine whether content is authentic (to the greatest extent possible), and how to think critically about the potential that it may not be.

We are almost certainly looking at a future with a permanently transformed relationship between veristic media and truth. Yes, we need to put up as many technical defenses as we can. But the most important defense will be an educated citizenry trained in critical thinking that is reflexively skeptical of claims that are too outlandish, or too in line with their own biases and hopes.

Renée DiResta serves on the board of Tech Policy Press.

Authors