White House Executive Order on AI Gives Sweeping Mandate to DHS

Justin Hendrix / Nov 1, 2023Justin Hendrix is CEO and Editor of Tech Policy Press. Views expressed here are his own.

Co-published with Just Security.

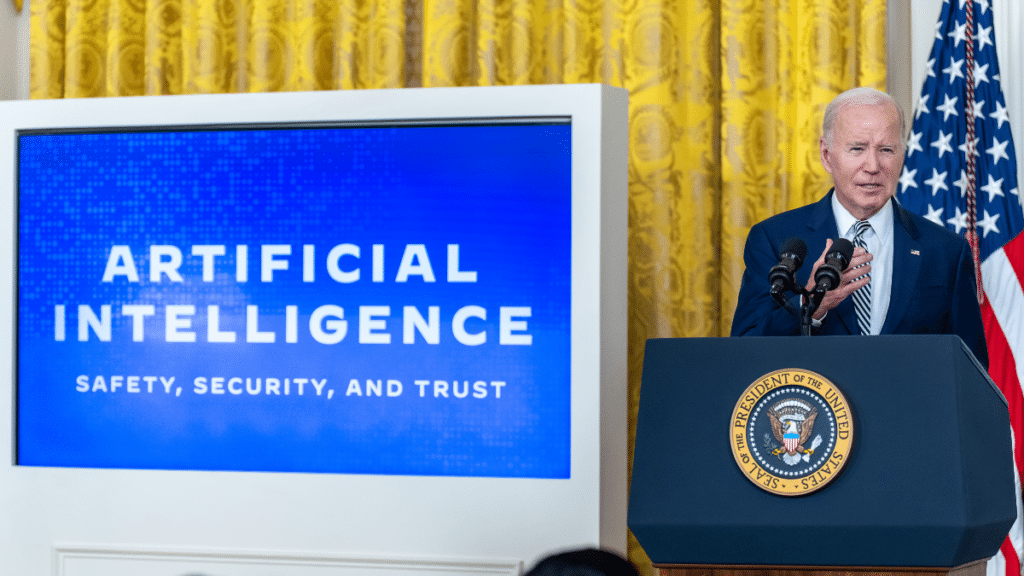

On Oct. 30, the Biden administration issued the “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.” The document is ambitious, covering a broad range of risks that AI poses – including the production of biological, chemical, and nuclear weapons, risks to cybersecurity, the generation of deepfakes, and privacy violations.

But while the Executive Order directs nearly the entire alphabet of federal agencies – from Agriculture to Veterans Affairs – to engage in various new activities, it can be read primarily as a national security order, giving important new responsibilities to the Secretaries of Homeland Security, Defense, State, and the Director of National Intelligence.

But it is the Department of Homeland Security (DHS) that is perhaps given the most extensive to-do list.The Executive Order gives DHS a wide-ranging portfolio of responsibilities related to AI, in addition to a variety of activities it is asked to collaborate on with other agencies:

- The DHS Secretary is tasked with leading “an assessment of potential risks related to the use of AI in critical infrastructure sectors involved, including ways in which deploying AI may make critical infrastructure systems more vulnerable to critical failures, physical attacks, and cyber attacks,” and is asked to translate the AI Risk Management Framework developed by the National Institutes of Standards and Technology (NIST) into “relevant safety and security guidelines for use by critical infrastructure owners and operators.”

- The DHS Secretary will establish a new “Artificial Intelligence Safety and Security Board” to provide insights aimed at “improving security, resilience, and incident response related to AI usage in critical infrastructure.”

- With the Secretary of Defense, the DHS Secretary is directed to establish a pilot project to use AI systems “such as large language models” to identify and remediate vulnerabilities in critical infrastructure.

- DHS will be responsible for addressing risks at the intersection of AI and chemical, biological, radioactive, and nuclear weapons. The DHS Secretary is tasked with preparing a report for the President on such risks to the homeland, working with other agencies.

- DHS must “develop a framework to conduct structured evaluation and stress testing of nucleic acid synthesis procurement screening,” and report on how to strengthen it. The document indicates the administration is particularly concerned with vulnerabilities in the “nucleic acid synthesis industry,”

- The Secretary of Homeland Security is asked to “clarify and modernize immigration pathways for experts in AI,” and to work with the Secretary of State to use “discretionary authorities to support and attract foreign nationals with special skills in AI and other critical and emerging technologies” to work in the United States. With the Department of Defense, DHS is asked to “enhance the use of appropriate authorities for the retention of certain noncitizens” deemed to be “of vital importance to national security”.

- DHS is directed to assist developers in combating intellectual property (IP) risks, including the investigation of IP theft and development of resources to mitigate it.

- With the Attorney General, DHS is asked to develop recommendations for law enforcement agencies to “recruit, hire, train, promote, and retain highly qualified and service-oriented officers and staff with relevant technical knowledge.”

- Working with other agencies, the Secretary of Homeland Security is given the job of leading coordination with “international allies and partners to enhance cooperation to prevent, respond to, and recover from potential critical infrastructure disruptions resulting from incorporation of AI into critical infrastructure systems or malicious use of AI.” And, the Secretary is asked to “submit a report to the President on priority actions to mitigate cross-border risks to critical United States infrastructure.”

“The President has asked the Department of Homeland Security to play a critical role in ensuring that AI is used safely and securely,” said DHS Sec. Alejandro Mayorkas in a statement.

DHS has indicated it intends to be an “early and aggressive” adopter of AI technology, and already has an extensive inventory of ways AI is being used across the department. This fall, a task force appointed by Mayorkas developed new DHS policies on the acquisition and use of AI and machine learning technologies, as well as the Department’s use of facial recognition. Mayorkas noted he has already appointed “the Department’s first Chief AI Officer,” and the agency vaunted examples of its use of AI to identify suspicious vehicles that may be trafficking fentanyl, combat online child sexual abuse, and to assess damage following national disasters.

The Biden administration’s Executive Order on AI follows other administration initiatives, including the release of the Blueprint for an AI Bill of Rights, the National Institute of Standards and Technology (NIST) AI Risk Management Framework, and various actions and warnings from the Federal Trade Commission and Justice Department. But with no imminent legislation likely to pass Congress, despite a lot of AI hearings and forums on Capitol Hill, it is still safe to say the United States has adopted a generally laissez faire approach to AI regulation. It is in that broader context that DHS will pursue its new portfolio.

This broad mandate may be cause for concern, since DHS’ record on the use of technology and handling of data is hardly unblemished. A new Brennan Center report considers its use of automated systems for immigration and customs enforcement, finding a lack of transparency that is in tension with the principles set out in the White House Blueprint for an AI Bill of Rights. Earlier this year, reports emerged about an app using facial recognition deployed by DHS that failed for Black users. And there remain substantial concerns about the department's surveillance activities. Perhaps the Department will have to improve its own record before it can reliably lead others on AI governance and safety.

Authors