Beyond Content: Why Monetization Governance Is The Next Frontier Of Tech Policy

Victoire Rio / Apr 28, 2025Victoire Rio is the founder and executive director of WHAT TO FIX.

On March 28, I woke up to terrible news: Myanmar had just been hit by a devastating earthquake.

I’ve spent the better part of the last decade supporting tech accountability efforts in Myanmar, and this news hit me hard. Sitting thousands of miles away, I turned to social media for updates. Any updates. I needed visuals to help me process and empathize with my friends, both inside and outside the country, who were desperately trying to reach their loved ones.

As I ran searches and scrolled through social media feeds, it wasn’t long before I came across images of rubble and photos of trapped individuals. But something was off. Some images were clearly from past disasters, in places like Turkey or Syria. Others were more perplexing, combining typical Burmese features and architecture in ways that didn’t quite make sense. One photo superimposed historical temples typically found in a plain in the center of Myanmar over an urban setting. On second look, it was AI-generated.

Ultimately, this deluge of inauthentic content was no accident. There’s a clear explanation for it: money.

When disaster strikes, searches for related content on social media spike. That boost in traffic is a gold mine for financially motivated actors, who use social media platforms’ revenue redistribution programs to convert views and engagement into money.

These actors don’t need to be on the ground or even have any connection to the affected country. They can use search and generative AI, hopping from event to event to chase engagement. It’s a trend I’ve observed and documented time and again. But the worst part of it is that it’s entirely preventable.

Revenue redistribution programs, sometimes referred to as “revenue sharing programs” or “bonuses,” are a mainstay of social media monetization offerings, now available across all major social media platforms. They are also rapidly expanding, as competition for creators intensifies. Take Facebook, for example, which has grown its partner-publisher membership to close to 3.8 million over the last few years. Over one million new accounts appear to have been added since the beginning of March, according to figures drawn from Facebook’s partner-publisher disclosures.

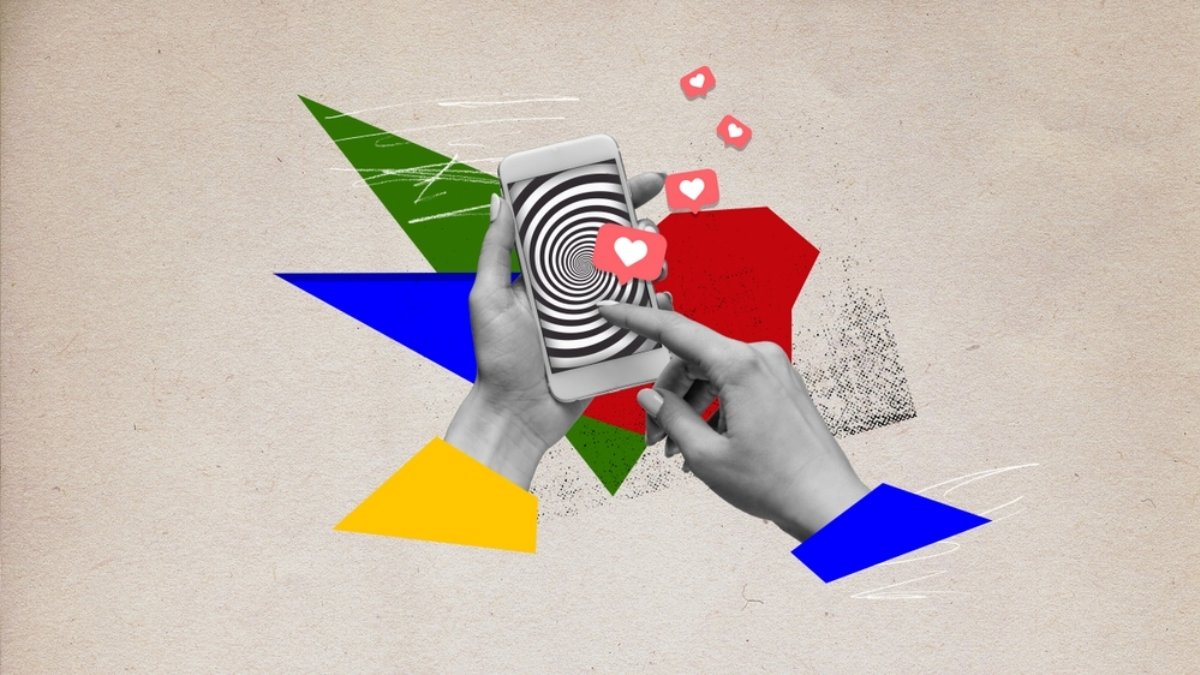

The revenue redistribution model is simple: the more views and engagement generated, the more money the platform pays.

Source: WHAT TO FIX archive of Meta partner-publisher disclosures.

From content governance to monetization governance

In the tech policy community, we have spent much of the last decade discussing content governance. We’ve grappled with how to improve content moderation, and more recently, with how to prevent the amplification of harmful content through recommender systems and paid advertising.

What I’m here to ask the field to consider is the tech policy issue we aren't discussing: what my colleagues and I are calling “Monetization Governance.” More specifically, I want you to consider how social media platforms preside over the redistribution of enormous sums of money, and how their monetization decisions ultimately shape the information environment.

My organization, WHAT TO FIX, estimates that social media platforms redistributed upwards of $20 billion of their revenue to over 6 million accounts last year. That's in addition to helping facilitate the flow of tens of billions of dollars through other monetization features, such as audience subscription services, tips, and branded content partnerships.

And yet, we have no idea who the platforms paid, how much they paid, or for what content.

Why should you care about monetization governance?

Let's get one thing straight: I’m not arguing against platforms redistributing revenue. Whether we like it or not, we live in a world where social media platforms overwhelmingly mediate content consumption. And if we are to sustain the production of quality content, we can’t let platforms squeeze out all of the revenue. We need to ensure that media outlets and content creators who put in the work to produce authentic content are compensated fairly. If anything, we need platforms to redistribute more, not less, revenue.

My concern is with leaving decisions over revenue redistribution to social media platforms, without transparency or oversight.

It’s not just inauthentic content that spikes in the wake of natural disasters or other high-engagement events, such as elections. The impact of this underexamined and poorly regulated aspect of social media operations has diverse and far-reaching consequences.

By financially rewarding actors involved with inauthentic content distribution, platforms undermine content quality, encourage copyright violations, and worsen AI’s environmental footprint. They also provide these actors with the finances to further expand, internationalize, and develop ways to automate their activities.

In the most egregious cases, we’ve seen platforms onboard well-known disinformation actors, political entities, and even sanctioned entities into their revenue redistribution programs. The fact that platforms are paying these actors is a concern for society, but also for advertisers, whose brands can be damaged by association.

Creators and independent media, meanwhile, not only face a harder and harder time getting their content noticed but also struggle to secure financial compensation. In competition with financially driven, poor-quality content, they’re not just getting a reduced share of revenue. Their revenue is also at the mercy of platform monetization decisions, most of which are automated. When platforms get it wrong, creators and independent media can lose their income overnight, with limited recourse and no compensation for lost revenue.

Concerns over the inadequacy of social media monetization decisions are not new. YouTube made headlines in 2017 for financing extremist actors. Data scientists at Facebook have also pointed to monetization as a key driver of misinformation and foreign abuse as far back as 2019.

Monetization governance is where concerns over societal harms, media and creator viability, and brand safety meet. At the center of it all are platform monetization systems: monetization terms, policies, and their review and enforcement processes.

Why are monetization systems overlooked in existing efforts to regulate social media?

Neither the EU nor the UK, both of which have adopted a risk management approach to platform regulation in their respective Digital Services Act (DSA) and Online Safety Act, squarely addresses platform monetization systems as a vector of risk.

Why is that?

In part, I think it is due to the staggering lack of transparency surrounding monetization programs, including their design, functioning, and use. Go to any of the platforms’ dedicated transparency centers, and you will be hard-pressed to find a reference to monetization terms, policies, or any detail on their approach to monetization due diligence and enforcement. You’re equally unlikely to find any information on the scale or effectiveness of that enforcement.

Another potential reason is that, among the many stakeholders impacted by social media monetization, it’s advertisers who have been the most active and successful in framing their concerns, pulling the policy focus towards brand safety and advertising regulation.

But advertisers and their brands are not the only ones being impacted here. Publishers and creators are faced with income unpredictability, fueled by the theft of their content and recurring AI-powered demonetization. And ultimately, society bears the bulk of the impact when harmful and illegal content and activities continue to be incentivized – and subsidized – by platforms.

What must be done?

We need to start by looking at monetization systems as a separate policy issue, connected but distinct from content moderation systems, recommender systems, and advertising systems.

Monetization systems are central to platforms’ operations. They are the systems that power the business relationships between platforms and content publishers. These relationships – and the systems that support them – must be scrutinized. They must be accounted for when assessing systemic risks and developing risk mitigation measures. And they must be subject to far greater overall transparency.

At WHAT TO FIX, we’ve spent much of the last 18 months mapping monetization services and investigating the systems that power them. We’ve worked to consolidate what is known, as well as known unknowns. We’ve tracked down monetization terms and policies, as well as all available disclosures around monetization approaches and monetized accounts. We’ve also worked to frame recommendations for how to assess the impact of monetization systems on systemic risks, and have kicked off a multi-stakeholder conversation around what a principled approach to monetization governance should entail, complete with recommendations on desirable transparency and stakeholder accountability.

For all our efforts, the picture is still very incomplete. And that’s very much by design. Platforms know all too well that we can’t exercise oversight – or even dare demand it – without case studies. And they are not volunteering information that could help investigate their monetization practices.

The challenge ahead is significant, but the cost of inaction is even greater. Monetization has become a fixture of all major social media platforms and is expanding rapidly. We have to start establishing robust frameworks for monetization governance.

Authors