Senate Hearing on AI and the Future of Journalism

Gabby Miller / Jan 11, 2024

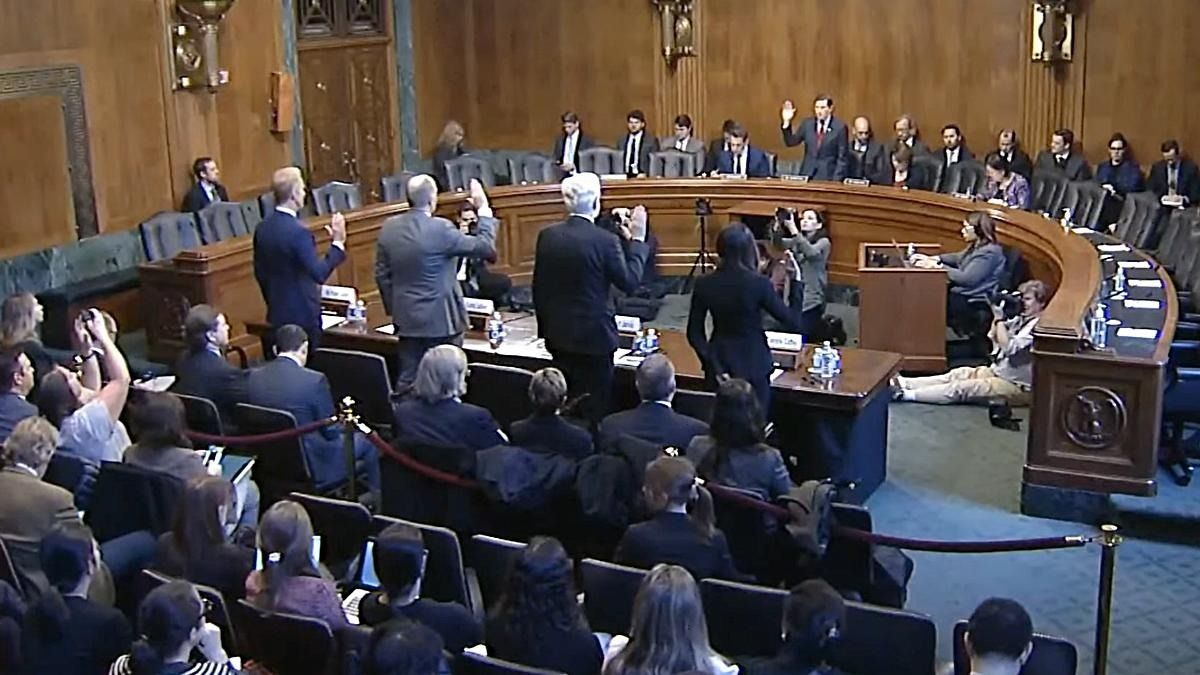

Witnesses being sworn in at a hearing on AI and the future of journalism, hosted by the Senate Judiciary Subcommittee on Privacy, Technology, and the Law.

The journalism crisis in local news, and how the rise of generative artificial intelligence may exacerbate it, prompted a hearing in the US Senate on Wednesday dedicated to identifying risks and ways to mitigate them. Witnesses called for Congress to clarify whether ‘fair use’ applies to news under copyright law as AI companies use journalistic works to train their large language models, and debated legislative solutions, like the Journalism Competition and Preservation Act (JCPA), a news media bargaining code-style bill that would allow publishers to negotiate with major technology companies for use of news on their platforms, among other issues.

The hearing, titled Oversight of Artificial Intelligence (AI): Future of Journalism, was hosted by the Senate Judiciary Subcommittee on Privacy, Technology, and the Law. Committee Chairman Sen. Richard Blumenthal (D-CT) and Ranking Member Sen. Joshua Hawley (R-MO) opened the floor with a vision for the hearing that also included topics like antitrust law and the applicability of Section 230 of the Communications Decency Act to generative AI.

The hearing comes in the wake of a copyright infringement lawsuit filed by the New York Times against Microsoft and OpenAI last month, which alleges that millions of the news company’s articles were unlawfully used to train the general-purpose language models that power chatbots such as ChatGPT. Much of the case turns on the thorny legal questions surrounding fair use, a major theme of the hearing.

Senators Blumenthal and Hawley, in their opening statements, pointed to their No Section 230 Immunity for AI Act, a bill proposed last June and ultimately blocked by Sen. Ted Cruz (R-TX) in December, that limits liability protections for online service providers for content that involves the use of generative AI.

Sen. Amy Klobuchar (D-MN) similarly drew attention to her AI-related bill introduced last May called the REAL Political Advertisements Act, which would require content creators to disclose if AI was used to generate videos or images in a political ad. And, as one of the sponsors of the JCPA, Sen. Klobuchar added that she’s “hopeful” that the bill will be considered as a path forward in resolving lingering questions addressed in the hearing. Different versions of the JCPA have been introduced in both the House and the Senate, with one even passing overwhelmingly out of the Subcommittee on Privacy, Technology, and the Law in 2022, before dying on the floor. Sen. Klobuchar indicated another version may be introduced this year.

Witnesses in attendance included:

- Danielle Coffey, President and CEO of News Media Alliance (written statement)

- Jeff Jarvis, Tow Professor of Journalism Innovation at CUNY Graduate School of Journalism (written statement)

- Curtis LeGeyt, President and CEO of the National Association of Broadcasters (written statement)

- Roger Lynch, CEO of Condé Nast (written statement).

The below transcript of the hearing has been lightly edited for clarity.

Sen. Richard Blumenthal (D-CT):

Welcome everyone. I'm pleased to convene the subcommittee and welcome our witnesses. Welcome everyone who's come to hear, and of course, my colleagues from both sides of the aisle for hearing that is critical to our democracy. It's critical to the future of journalism in the United States because local reporting is the lifeblood of our democracy and local reporting by newspapers and broadcast stations are in existential crisis. It is in fact a perfect storm. The result of increasing cost, declining revenue and exploding disinformation. And a lot of the cause of this perfect storm is in fact technologies like artificial intelligence, not new, not original for me to observe it, but it is literally eating away at the lifeblood of our democracy, which as we all know is essential to local jobs, local accountability, local awareness and knowledge. Everything from obituaries to the planning and zoning commissions and you can't get it anywhere else.

National news you can buy by the yard or by the word, but local reporting is truly the result of sweat and tears and sometimes even blood of local reporters. What we're seeing, and it's a stark fact about American journalism in an existential crisis, is the decline and potential death of local reporting. As a result of that perfect storm. Local papers are closing at a staggering rate. A third of our newspapers have been lost in the last two decades. I don't need to tell anyone here that some of the oldest newspapers in the country, like the Hartford Courant, have closed their newsrooms and it is a national tragedy, a painful and traumatic time for reporters, editors and their industry and a deep danger for our democracy. Hedge funds are buying those papers, not for the news they can communicate, but often for the real estate that they own, they are publishing weekly instead of daily, and they are buying out reporting staffs, sometimes firing them. Millions of Americans now live in a news desert where there's no local paper, and that's especially true for rural populations and communities of color. So there's an equity aspect to this challenge as well. For any of us on this panel, we know the importance of local news, but so should people who live in those communities because just as local police and fire services are the first responders, local reporters are often the first informers, and that information is no less important to them than fire and police service in the long term.

The rise of big tech has been directly responsible for the decline in local news, and it is largely the cause of that perfect storm accelerating, expanding the destruction of local reporting. First, Meta, Google, and OpenAI are using the hard work of newspapers and authors to train their AI models without compensation or credit adding insult to injury. Those models are then used to compete with newspapers and broadcast, cannibalizing readership and revenue from the journalistic institutions that generate the content in the first place. As the New York Times recent lawsuit against OpenAI and Microsoft shows, those AI models will even essentially plagiarized articles and directly permit readers to evade paywalls to access protected content free of charge. I realize that those claims and allegations are yet to be contended or concluded in court, but they are certainly more than plausible. Second, the models may also misidentify or misattribute statements about media outlet content or endorsement of a product, and the result is more rampant misinformation and disinformation online and damage to the brand and credibility of those media outlets. And there are legitimate fears that AI will directly replace journalists. The experiments we've seen with fake reporters are a breach of trust. It's never a substitute for local reporters in local newsrooms, broadcasters, journalists who reflect their community and talk to their neighbors. So our purpose here must be to determine how we can ensure that reporters and readers reap the benefits of AI and avoid the pitfalls. That's been one of the themes of our hearings. And another has been that we need to move more quickly than we did on social media and learn from our mistakes in the delay there. Once again, we need to learn from the mistakes of that failure to oversee social media and adopt standards. And I think there are some that maybe we can form a consensus around just as Senator Hawley and I have on the framework that we've proposed.

First on licensing, guaranteeing that newspapers and broadcasters are given credit financially and publicly for reporting and other content they provide. Second on an AI framework, such as we've proposed requiring transparency about the limits and use of AI models, including disclosures when copyrighted material is used as provided for in our framework now, but we may need to expand on it and make it more explicit. Third on Section 230, clarifying that Section 230 does not apply to AI. Again, as Senator Hawley and I have proposed in our legislation to create the right incentives for companies to develop trustworthy products. And finally, not really finally, but fourth, updating our antitrust laws to stop big tech's monopolistic business practices in advertising that undercut newspapers. And I want to thank my colleague, Senator Klobuchar and Senator Lee for their work on legislation that I've joined as a co-sponsor. We're fortunate to have an extraordinary panel today. I want to thank all of you for being here and now turn to the ranking member for his comments.

Sen. Joshua Hawley (R-MO):

Thank you very much, Mr. Chairman. Thank you for convening this hearing, the first hearing of the year, but the latest in a series of hearings that Senator Blumenthal and I have been able to host together and Senator Blumenthal has just done tremendous, tremendous work chairing this committee. And I've started to notice a pattern, and this is the fifth or sixth hearing I think we've had, and I've noticed now a pretty decided pattern. And it goes something like this. You have on the one hand all of the AI cheerleaders who say that AI is going to be wonderful, AI is going to be life changing, it's going to be world changing, it is going to be the best thing that has ever happened to the human race, or something to that effect. And then you have a group of people who have concerns and they say, well, I don't know. I mean, what's AI going to do to jobs? What's AI going to mean for my privacy? What's AI going to mean for my kids? And here's the pattern I've noticed over a year, almost everybody who takes the AI cheerleading stance is here in this building. And then when you leave the confines of this building and the lobbyists who inhabit it, when you go out and actually talk to real people working real jobs, you find the second set of concerns.

I have yet to talk to a Missourian who is an enthusiastic, no holds bar cheerleader for AI, not one. There probably is someone somewhere, but they haven't talked to me yet. What I hear over and over and over from workaday people who are working their job, raising their kids, trying to just keep it going, what they say over and over is, I don't know about this. I don't know what this is going to mean for my kids online. I don't know what this is going to mean for my job in the future. I don't know. I have concerns. And they also usually say, I sure really wish that Congress would do something about this. And that leads me to the second observation, the second pattern that I have noticed in this last year, and that is while there's a lot of talk about the need for Congress to act, we're beginning to slip into a familiar pattern whereby the biggest companies who increasingly control this technology, just like they've controlled social media, don't want us to act on, are willing to expend any amount of resources, money, time, and effort, influence to make sure we don't. Senator Blumenthal mentioned our bipartisan bill that would just be a very modest bill, if I may say that would just clarify that AI generated tools are not entitled to the Section 230 protections. Very modest.

Do you know when we had AI executives sitting right here in this room and we asked them directly, do you think section 230 covers your model in your industry? They said, no. So Senator Blumenthal and I wrote it up, said, well, good, good. This is consensus. Let's pass this. I went to the floor to try to pass this bill in December and immediately was blocked and objected to the same story we've been hearing for years from the technology companies. It's always, theoretically, we should put safeguards in place for real people, but when you come to do it, oh no, no, no, no, no, no, we can't. That's too soon. It's too much. It's too quick. And what it really means is it would interfere with our profits. That's what they really mean. We cannot allow that pattern to continue with ai. And that's the final thing I noticed is this from the hearings and the information we've gathered is AI is supposed to be new, but it really is contributing to what is now a familiar, familiar story, which is the monopolization in this country of information, of data, of large swaths of our economy, AI increasingly controlled by 2, 3, 4 of the biggest companies, not just in this country, the biggest companies in the world, and apropos of today's subject to this hearing, I think we have to ask ourselves, do we want all the news and information in this nation to be controlled by two or three companies?

I certainly don't. I certainly don't. So I think we've got to ask ourselves, what are we going to do practically to make sure that normal people, whether they are journalists, whether they're bloggers, or whether it's just the working mom at home, what they can do to protect their work product, their information, their data, how are we going to make sure they are able to keep control of it, how they're able to vindicate their rights because they do have rights and they should have rights, and it shouldn't be the just because the biggest companies in the world want to gobble up your data, they should be able to do it. And you know what? Too bad we're all just supposed to live with it. So I think we've got a tall task in front of us. I salute Senator Blumenthal again for holding these hearings and I hope that we'll be able to drive towards clarity and then towards solutions because that's what the American people deserve. Thank you, Mr. Chairman.

Sen. Richard Blumenthal (D-CT):

Thanks, Senator Hawley. I'm going to turn to Senator Klobuchar, who's been a leader in this area. As you know, she's the chairman of the subcommittee on antitrust of the Judiciary Committee.

Sen. Amy Klobuchar (D-MN) (20:07):

Thank you very much, Senator Blumenthal, thank you for your work and outlining some solutions, which I appreciate it, and Senator Hawley as well. We have the bill to ban deep fake political ads, which is getting growing support. I'm hopeful if there is a package on AI that that will be included. And of course, Senator Hirono is with us who's been there from day one on these issues. So this is personal for me. As many of you know, my dad was a journalist. He spent his career as a reporter, a columnist. He also had a local radio station, local TV show. When he retired in 1995, he had written 8,400 columns and 12 million words, and he did it all without AI. I will note, as a timely matter, he wrote 23 books including one called Will the Vikings Ever Win the Super Bowl, which is sadly still relevant after this season.

So local news, Senator Blumenthal really laid out the problems and what we've seen with the closure of newspapers, and we note this is how so many people, whether it is local radio and I keep adding local TV as well, this is where they get their disaster alerts. This is when they find out if there's floods in Missouri. This is how they find out what the fire cleanup is in Hawaii. This is how they find out if a blizzard is coming in Minnesota. It's also where they find out their local football scores. And if a business is opening and it is, yes, how finely city councils get reported on and what's covered, if this goes away, it literally frays at the connections in our democracies, so what's happened? Diagnosis, the closure because of ad revenue, not only because of that, but a lot because of that.

In one quarter, three months in October, Google reported this as last year, more than $59 billion in advertising revenue in one quarter in a single three month period. When you look at what's happened with a newspaper's advertising revenue, from 2008 to 2020 it went down from $37 billion to $9 billion, and I am very concerned that these trends will only worsen with the rise of generative AI. So that is why in addition to the solutions that Senator Blumenthal, the top ones on licensing and the like that we need to look at, we can finally include some version I hope of the bill that Senator Kennedy and I have led for years, the Journalism Competition and Preservation Act. We know that just recently Google negotiated an agreement in Canada after a similar bill had passed in Canada, which is going to mean a major shift for local news there, which will mean $74 million annually to those news organizations.

We know that in Australia where a similar bill passed $140 million has shifted to the content providers, to the people who are actually doing the work, who are reporting the stories on the frontline. So to me, that is a market-based way by having negotiation of what the actual rates are. It is a market-based way to resolve this. It's why people like Senator Cruz support this bill. It is not some bill that is supported by one party or the other. It's been bipartisan from the very beginning. So I'm hopeful that that will be considered as one of our solutions to all of this. And of course, we're also very concerned, as was noted by Senator Blumenthal about these fake images of news anchors, people who are trusted in addition to the politicians, in addition to, it's not just movie stars, it's not just Tom Hanks and Taylor Swift, as cool as they are, it's also going to be the local news anchor that maybe people haven't heard of nationally, but is trusted in Rochester, Minnesota. So that's why I think this is such a timely hearing and I want to thank Senator Blumenthal for having it as well as Senator Hawley.

Sen. Richard Blumenthal (D-CT):

Thank you. Thanks. Senator Klobuchar, just for the record, our local news anchors in Connecticut are pretty cool too, and you'll take that back to them, Mr. LeGeyt. Thank you. I want to introduce the witnesses, then we'll swear them in and ask for your opening statements. Danielle Coffey, welcome, is president and CEO of News Media Alliance, which represents more than 2200 news and magazine media outlets worldwide serving the largest cities and smallest towns as well as national and international publication. She's a member of the board of directors at the National Press Club Journalism Institute. Jeff Jarvis is the Tow professor of journalism at CUNY's Newmark School of Journalism. He's the author of six books, including the Gutenberg Parentheses, the Age of Print and Its Lessons for the Age of the Internet. And later this year, preview of coming attractions, the Web We Weave: Why We Must Reclaim the Internet from Moguls, Misanthropes and Moral Panic. He co-hosts two podcasts this week in Google and AI Inside. Curtis LeGeyt is president and chief executive Officer of the National Association of Broadcasters. That organization is the premier advocacy association for America's broadcasters. It advances radio and television interests in legislative, regulatory and public affairs. Roger Lynch is the CEO of Condé Nast, the global media company that creates and distributes content through its iconic brands, including the New Yorker, Vanity Fair, Vogue, Wired, GQ, Bon Appetit. Prior to his current position at Condé Nast, he served as CEO of the music streaming service, Pandora, and also was the founding CEO of Sling TV. If you would please rise, I will administer the oath. Do you swear that the testimony that you will give to this committee is the truth, the whole truth, and nothing but the truth, so help you God? I do. Thank you, Ms. Coffey. If you would begin please.

Danielle Coffey:

Thank you Chairman Blumenthal, ranking Member Hawley and members of the subcommittee. Thank you for inviting me here to testify here today on AI in the future of journalism. My name is Danielle Coffey and I'm president and CEO of the News Media Alliance representing 2,200 news publications, magazines, digital only outlets, some publications that started before the Constitutional convention such as the Hartford Courant. My members produce quality journalistic and creative content that seeks to inform, educate, and connect with readers and enrich their daily lives. We cover natural disasters, conflict zones, school boards, city halls, town halls, entertainment and the arts and matters of public interest. We invest a significant amount of financial and human capital to serve our readers across the country. Our publications adhere to principles and processes that support verification, accuracy and fidelity to facts. We abide by standards and codes of conduct and provide readers a voice through correction policies to ensure accuracy and reporting.

Unfortunately, the same accountability is not seen across the rest of the internet without proper safeguards. We cannot rely on a common set of facts that promote healthy public discourse without quality reporting. We cannot have an informed electorate and functional society. Generative artificial intelligence (GAI) could create an even greater risk to the information ecosystem with inaccuracies and hallucinations, if not curbed with quality content produced by news publications, this risk will become worse as the economic decline of our industry accelerates. Our revenue has been cut in half. Over the last 10 years, demand for our content has grown the same amount over the same period of time in the opposite direction. This lack of return is because dominant distributors of our news content are scraping publications, websites, and selling portions for engagement and personal information to target users with advertising. Over the last several years, there have been countless studies, investigations, and litigation by the DOJ and the FTC in the past two administrations that have found anti-competitive conduct by the monopoly distributors of news content.

This proves the direct nexus between anti-competitive conduct and the steep decline of financial revenue return to news publications leading to a dramatic shortfall of the revenue that's needed to invest in news gathering and hiring journalists. We are deeply appreciative of Senators Klobuchar and Kennedy for their relentless leadership on the Journalism Competition and Preservation Act, which addresses this marketplace imbalance, and we thank this committee for overwhelmingly passing the legislation last July. This marketplace imbalance will only be increased by GAI, an analysis that the N/MA commissioned, as well as complaints pending before the courts. It shows evidence of copyright protective expressive works being taken from behind paywalls without authorization and used repeatedly in modeling, training, processing, and display. In addition to massive amounts of content used in training GAI output results to users' inquiries often contain summaries, excerpts, and even full verbatim copies of articles written and fact-checked by human journalists.

These outputs compete in the same market with the same audience serving the same purpose as the original articles that feed the algorithms in the first place. Because these uses go far beyond the guardrail set by the courts, this is not considered fair use under current copyright law. To be clear, news publishers are not opposed to ai. We want to help developers realize their potential in a responsible way. N/MA's members are by and large willing to come to the table and discuss licensing terms and licensing solutions to facilitate reliable, updated access to trustworthy and authoritative content. A constructive solution will benefit all interested parties and society at large and avoid protracted uncertainty in the courts. Some AGI developers are good actors and seek licensing agreements with news publications, and we applaud their efforts. In addition to encouraging such licensing practices, there are other issues that Congress can address around transparency, copyright, accountability, and competition that we have submitted.

For the record, the fourth estate has served a valuable role in this country for centuries, calling on governments and civic leaders to act responsibly in their positions of power. Local news especially has uncovered and reported on events around the country that keep readers informed, educated, and engaged in their communities. We simply cannot let the free press be disregarded at the expense of these new and exciting technologies. Both can exist in harmony and both can thrive. We must ensure that for the future of our country and the future of our society. Thank you for the opportunity to participate. I look forward to questions.

Sen. Richard Blumenthal (D-CT):

Thank you, Ms. Coffey. Professor Jarvis.

Jeff Jarvis:

Thank you so much for this opportunity and invitation. I've been a journalist for 50 years and a journalism professor for the last 18. Of those, I'd like to begin with three lessons about the history of news and copyright that I've learned from researching my book, The Gutenberg Parenthesis. First America's 1790 Copyright Act protected only maps, charts, and books. Newspapers were not covered by the statute until 1909. Second, the post office act of 1792 allowed newspapers to exchange copies for free enabling so-called Scissors editor, an actual job title, to reprint articles, thus creating a network for news and with it a nation. To this day, journalists read, learn from and repurpose facts from each other. The question before us today is whether the machine has similar rights to read and learn. Third, a century ago when print media faced their first competitor, radio, newspapers were inhospitable limiting broadcasters to two daily updates, excluding radio from congressional press galleries and even forbidding on-air commentators from discussing an event until 12 hours after the fact, they accused radio of stealing content and revenue.

They warned radio would endanger democracy as they have since to television, the internet and now AI. But today I prefer to focus on the good that might come from news collaborating with this new technology. First, a caveat. Large language models have no sense of fact and should not be used where facts matter, as some have learned the hard way. Still AI presents many opportunities. For example, AI is excellent at translation, news organizations could use it to present their news internationally. AI is good at summarizing specific documents. That is what Google's notebook LM does. Helping writers and journalists organize their research. AI can analyze more text than any one reporter. I advised one editor to have citizens record, say, 100 school board meetings so the technology could transcribe them and then answer questions about how many boards are discussing, say, banning books. I'm fascinated with the idea that AI could extend literacy, helping people who are intimidated by writing to tell and illustrate their own stories.

A task force of academics from the Modern Language Association concluded recently that AI could help students with wordplay, analyzing, writing, and overcoming writer's block. AI now enables anyone to write computer code. As a tech executive told me in an AI podcast that I co-host, the hottest programming language on planet earth right now is English. Finally, I see business opportunities for publishers to put large language models in front of their content to allow readers to enter into dialogue with that content. I just spoke with one entrepreneur I know who's working on a business like that, and this morning I talked to an executive at shipstead, the very good Norwegian newspaper company, and they're working on something similar. All these opportunities and more are put at risk if we fence off the open internet. Common Crawl is a foundation that for 16 years has archived the web, 250 billion pages and 10 petabytes made available to scholars for free yielding 10,000 research papers.

I'm disturbed to learn that the New York Times demanded that the entire history of its content, including that which had been available for free, be erased. Now, when I learned that my books were included in Books3, a data set used to train AI, I was delighted, I write not only to make money, but also to spread ideas. What happens to our information ecosystem and our democracy when all authoritative news retreats behind paywalls available only to privileged citizens and giant corporations that can afford to pay. I understand the economic plight of my industry. I've been an executive in this industry. I've run a center for entrepreneurial journalism. I understand the urgency, but instead of debating protectionist legislation sought by lobbyists for a struggling industry, I hope we also have a discussion about journalism's moral obligation to an informed democracy. And please let us rethink copyright for this age.

In my next book, the Web We Weave, I ask technologists, users, scholars, media, and governments to enter into covenants of mutual obligation for the future of the internet and by extension AI. So today I propose to you as a government to promise first to protect the rights of speech and assembly that have been made possible by the internet. Please base decisions that affect internet rights on rational proof of harms, not the media's moral panic. Do not splinter the internet along national borders and encourage and enable new competition and openness rather than entrenching incumbent interests through regulatory capture in some. I seek a Hippocratic oath for the internet. First, do no harm.

Sen. Richard Blumenthal (D-CT):

Thanks, professor, Mr. LeGeyt.

Curtis LeGeyt:

Good afternoon, chairman Blumenthal, ranking member Hawley and members of the subcommittee. My name is Curtis LeGeyt. I'm the president and CEO of the National Association of Broadcasters. I'm proud to testify today on behalf of our thousands of local television and radio station members who serve your constituents every day. Study after study shows that local broadcasters are the most trusted source of news and information. Our investigative reports received countless awards for exemplifying the importance and impact of journalism as a service to the community. This includes WTNH News 8 in New Haven, which received an Edward R. Murrow Award for its reporting on child sex trafficking in the state and KO Max Radio and KMOV TV in St. Louis, which were also recently honored with Murrow Awards for their accurate and heartfelt reporting of a deadly school. Stories like these are the antidote to the misinformation and disinformation that thrives online.

Broadcasters will build on this trust by embracing new AI tools that will help our journalists, particularly when it comes to delivering breaking news and emergency information. For example, one broadcaster is piloting a tool that will use AI to quickly cull through inbound tips from email and social media to produce recommendations that they can verify and turn into impactful stories. Other broadcasters are using AI to translate their stories into other languages to better serve diverse audiences. When AI can help these journalists, real people perform their jobs and their communities, we welcome it. However, this subcommittee should be mindful of three significant risks that generative AI poses to broadcast newsrooms across the country. First, the use of broadcasters' news content in AI models without authorization diminishes our audience trust and our reinvestment. In local news, broadcasters have already seen numerous examples where content created by our journalists has been ingested and regurgitated by AI bots with little or no attribution. For example, when a well-known AI platform was recently prompted to provide the latest news in Parkersburg, West Virginia, it generated outputs copied nearly word for word from WTAP TV's website. The station did not grant permission for that use, nor were they even made aware of it.

Not only are broadcasters losing out on that compensation, but this unauthorized usage risks undermining trusts as stations lose control over how their content is used and whether it's integrated with other unverified information. All of the concerns that drive Senator Klobuchar, Kennedy and this committee's work on the Journalism Competition and Preservation Act are exacerbated by the emergence of generative AI. Second, the use of AI to doctor, manipulate or misappropriate the likeness of trusted radio or television personalities, risk spreading misinformation or even perpetuating fraud. One example, a recent video clip of a routine discussion between two broadcast TV anchors was manipulated to create a hateful racist anti-Semitic rant and Univision's Jorge Ramos, one of the most respected figures in American journalism, has repeatedly been a victim of AI tools, appropriating and manipulating his voice and image to advertise all kinds of unauthorized goods and services. We appreciate the attention of Senators Coons, Blackburn, Klobuchar, and Tillis to this growing and significant problem, which should be addressed in balance with the First Amendment.

Finally, the rising prevalence of DeepFakes makes it increasingly burdensome for both our newsrooms and users to identify and distinguish legitimate copyrighted broadcast content from the unvetted and potentially inaccurate content being generated by ai. To give a recent illustration following the recent October 7th terrorist attacks on Israel, fake photos and videos reached an unprecedented level on social media in a matter of minutes. Of the thousands of videos that one broadcast network sifted through to report on the tax, only 10% of them were authentic and usable. As I document inmy written testimony, broadcasters across the country have launched significant new initiatives to bolster the vetting process of the content that is aired on our stations. But these efforts are costly and the problem grows more complex by the day. In conclusion, America's broadcasters are extremely proud of the role we play in serving your constituents, and we are eager to embrace AI when it can be harnessed to enhance that critical role. However, as we have seen in the cautionary tale of big tech, exploitation of new technologies can undermine local news. This subcommittee is wise to keep a close eye on AI as well as the way our current laws are applied to it. Thank you again for inviting me to testify today. I look forward to your questions.

Sen. Richard Blumenthal (D-CT):

Thank you, Mr. LeGeyt. Mr. Lynch.

Roger Lynch:

Thank you, Chair Blumenthal, Ranking Member Hawley, and members of the subcommittee. Thank you very much for inviting me to participate today in the critical discussion about AI and the future of journalism. My name is Roger Lynch and I'm the CEO of Conde Nast. Conde Nast iconic brands and publications include the New Yorker, Vogue, Vanity Fair, Wired Architectural Digest, Conde Nast Traveler, GQ, Bon Appetit, and many more, including I might add Tattler, which was first published in the UK in 1709. Our mission is to produce culture defining exceptional journalism and creative content, and this purpose is evident in our output, an output that only humans can make together with rigorous standards and fact-checking that can be produced. The result is stories that change society for the better, information that helps people make decisions in their daily lives, and content that creates an informed and empowered electorate. As an example, this is what the New Yorker has done for over a hundred years from the publishing of John Hershey's 1946 account of the survivors of the atomic bombing of Hiroshima to the serialized chapters of Rachel Carson's Silent Spring, which helped launch the modern environmental movement in 1962 to more recently, Patrick Radden Keefe's exposes on the Sackler family's ruthless marketing of Oxycontin, the drug that profited billions from people's addictions. I have long experience leading companies through times of great technological change. You could say it's in my DNA and generative AI is certainly bringing about such change and already demonstrating tremendous potential to make the world a better place. But gen AI cannot replace journalism. Journalism is fundamentally a human pursuit, and it plays an essential and irreplaceable role in our society and our democracy. It takes reporters with grit, integrity, ambition, and human creativity to develop the stories that allow free markets, free speech and freedom itself to thrive. In each business that I've led, successful new businesses were built on a foundation of licensing content rights. Licensing allowed distributors to work together with content creators to innovate new and better consumer experiences and generate profits that were invested in creating more great content.

I'm here today because congressional intervention is needed to make clear that gen AI companies must also take licenses to utilize publisher content for use with gen AI. Unfortunately currently deployed gen ai tools have been built with stolen goods. Gen ai companies copy and display our content without permission or compensation in order to build massive commercial businesses that directly compete with us. Big tech companies argue that this is fair use, that their machines are just learning from reading our content just as humans learn and that no licenses are required for that. This is a false analogy. Gen AI models do not learn like humans do. They maintain complete copies of the works. They train on our content and output its substance while keeping a hundred percent of the value for themselves and training consumers to come to them for information, not to deprive us of essential customer relationships. Fair use is designed to allow criticism, parody, scholarship, research and news reporting.

The law is clear that it is not fair use when there is an adverse effect on the market for the copyrighted material. It is that market that creates the incentive to invest and innovate in content. And in that way, the market supports journalism and innovation in the long run. Fair use is not intended to simply enrich technology companies that prefer not to pay. Moreover, gen AI technology enables misinformation on an unprecedented scale. In the wrong hands, gen AI can generate outputs that are customized for individuals making misinformation in all forms. Fake photographs, audio, video and documents look real. Widely available, gen AI tools hallucinate and generate misstatements that are sometimes attributed to real publications like ours, damaging our brands. Americans can't possibly spend all of their time determining what's true and what's false. Instead, they may stop trusting any source of information with devastating consequences.

Luckily, there is a path forward that's good policy, it's good business, and it's already the law: licensed and compensated use of publisher content for both training and output. This will ensure a sustainable ecosystem in which high quality content continues to be produced by brands consumers trust. This will also ensure that information comes from many sources without big tech companies as gatekeepers, licensing will support a sustainable future. In conclusion, we urge Congress to take immediate action to clarify that the use of publisher content for both Gen AI training and output must be licensed and compensated. The time to act is now and the stakes are nothing short of the continued viability of journalism. Thank you very much for your work on these important issues.

Sen. Richard Blumenthal (D-CT):

Thank you very much, Mr. Lynch. And let me begin where you ended to say that what we're proposing really is to clarify, the argument could be made. I think that the law now requires this kind of licensing, but I think you would agree with me that the costs of enforcing those rights through litigation, the financial costs, the delay, the costs and time, make it often a impractical or less effective remedy than is needed and deserved. Is that the thrust of your testimony?

Roger Lynch:

Yes. Yes, yes, Senator. If the big AI companies had agreed with that position, we would be able to negotiate arrangements with them. Unfortunately, they do not agree with that. They do not agree that they should have to be paying for this, and they believe that it is covered under fair use and hence the lawsuits that you've already seen.

Sen. Richard Blumenthal (D-CT):

In a blog post, I believe from OpenAI, reported very recently, within the last 24 hours or so, the reference was made in fact to ongoing negotiations proceeding. The New York Times lawsuit and possibly other negotiations are ongoing, but in the long term, those kinds of individual ad hoc agreements may be unavailing to the smaller newspapers or broadcast stations that don't have the resources of a New York Times. Correct?

Roger Lynch:

Certainly that would be a major concern is that the amount of time it would take to litigate appeal, go back to the courts appeal, maybe ultimately make it to the Supreme Court to settle between now and then there'd be many, many companies, media companies that would go out of business.

Sen. Richard Blumenthal (D-CT):

One of the core principles of the framework that Senator Hawley and I have proposed is record keeping and transparency. Developers ought to be required to disclose essential information about the training data and safety of AI models to users and other companies. Ms. Coffey, you make the point that this is important for newspapers. The AI companies have been intensely secretive about where they get their data, but we know based on even the limited transparency that's available to us, that they use copyright material and it's the bedrock for many of their models. For example, Meta and other companies use a database called Books3, which is a collection of nearly 200,000 pirated books. That's not public information, that's theft in my view. I'm a former prosecutor, so I don't use that term lightly, but it seems to me that's the right term for it. So part of the problem is that AI companies are using this hard work, not just the sweat and tears and sometimes blood, but also the financial investment that is made to produce news. It doesn't appear magically, it's not out of thin air. It's an investment and the kind of lack of transparency makes it harder for you to protect your rights. Is that correct?

Danielle Coffey:

That is correct. And we found the same thing with the news media. It's 5 of the top 10 sites that are used by C4, which is the curated, more responsible dataset that trained the models of Common Crawl. We found that 5 of the top 10 were news media sites because it is quality, it is reliable, it is vetted and fact-based. And then we would agree that licensing would be the best marketplace solution so that we can avoid protracted litigation. And in order to do that, we need to know how they're using our content. So a searchable database would be something that we would recommend. There are other options and ways to do that, and I know that there's legislation that we're supportive of that would identify how our content is being used because any sort of tagging that we do, any authentication, we work with Adobe and others, but it's stripped whenever they take our content and it doesn't follow the content through to the output. So we don't know how our content is actually being used and therefore we can't enforce and it's difficult to negotiate if we don't have that information. There's different versions that might work, but we would be very supportive of that so that we can enforce our rights.

Sen. Richard Blumenthal (D-CT):

I would be very interested in more specifics from all the witnesses. We may not have time today to explore all the technical details, but obviously those details are important. I just want to emphasize the point that I think really all of you have made, which is that there's great promise, there are tremendous potential benefits to using ai. For example, in investigative reporting to go through court files or documents, discovery, depositions, all kinds of stuff that can be more effectively and efficiently explored and summarized by ai. But we also need to be mindful of the perils. We're going to have five minute rounds of questions, and I'll turn to the ranking members. Senator Hawley now.

Sen. Joshua Hawley (R-MO):

Thanks again, Mr. Chairman. Thanks to all the witnesses for being here. Mr. Lynch, if I could just come back to you. I was struck by your comment that the problem with generative AI as it exists currently, it's been built with stolen goods. Let's just talk a little bit about the sort of content licensing framework that you would favor. Can you give us sort of a sketch of what that would look like, something that protects existing copyright law, something that you think would be workable? I'm not asking you to write the statute for us, but just maybe give us a thumbnail overview.

Roger Lynch:

I think quite simply, if Congress could clarify that the use of our content and other publisher content for training and output of AI models is not fair use, then the free market will take care of the rest just like it has in the music industry where I worked and film and television, sports rights, it can enable private negotiations. And in the music industry where you have, you think about millions of artists, millions of ultimate consumers consuming that content. There have been models that have been set up, ASCAP, BMI, CSAC, GMR, these collective rights organizations to simplify the licensing of that content. And the nice thing about that is it doesn't take a company the size of Conde Nast or the New York Times to create these licensing deals. These organizations can do it for all content producers. So fundamentally, we think a simple fix as or clarification that use of content for training and output of AI models is not fair use. The market will take care of the rest.

Sen. Joshua Hawley (R-MO):

I have to say that seems imminently sensible to me, and it leads me to ask the question, why shouldn't we expand that regime outward? I mean, why shouldn't we say that anybody whose data is ingested and then regurgitated by generative ai, whether that's their name, their image, their likeness, why shouldn't they be able also to have a right to compensation? I mean, our copyright laws are quite broad. I think justly so. so why shouldn't. I'm very sympathetic to this argument for journalists and content creators for whom content creation is a career, but there are lots of other folks for whom it's not a career, but they create things, they have work products, they post things online. Why shouldn't they also be protected? It seems to me that they should. I mean, it seems to me that every American ought to have some rights here in their data and in their content.

And this sort of regime you've described, I mean these ordinary Americans already protected by copyright law. It seems that we should find a way to enforce that. And the way that you've described in terms of clarifying fair use seems to me to be a potential path forward. Mr. LeGeyt, let me shift gears and ask you about something that you said. It's in your written testimony and you mentioned it too just a moment ago about this video clip. One of many unfortunately with the broadcast TV anchors that was manipulated and we've seen this is proliferating. The New York Times did a report just a couple of days ago about similar manipulation of images. In this case, not news reporters. This was just what the times reported on, I think a doctor, online trolls took screenshots of a doctor from an online feed of her testimony she was giving at a parole board hearing and edited the images with AI tools in that case to make them sexually explicit, completely fake.

This is the classic deepfake, but obviously extremely harmful, extremely invasive, and then posted them online. I mean, used these images, created these images, and then in this case, put them on 4chan. So my question to you is, shouldn't there be some sort of federal limit, federal ban on deep fake images, certainly of a sexually explicit nature, but shouldn't every American have the right to think what's appropriate First Amendment exceptions for parody, but shouldn't we have the right to think that? Listen, you're not just going to, if you put a picture on Instagram of your child, it's not going to be scraped up by somebody, some company or some individual using company software to generate a sexually explicit image. Or in the case of these news anchors to put literally words in their mouth.

Curtis LeGeyt:

You are absolutely right to prioritize this issue for local broadcasters. All our local personalities have is the trust of their audiences. And the second that that is undermined by disinformation and these technologies, these DeepFakes are going to put all of that on steroids. It is just more noise. And I think we have seen the steady decline in our public discourse as a result of the fact that the public can't separate fact from fiction. So absolutely, this is an area that demands your attention. This committee's attention. There are certainly some elements of this that in the expressive context that the committee needs to be mindful of. Certainly we've aligned ourselves with the Motion Picture Association and in the creative context, some of the potential carve-outs that they have flagged. But this is an issue that is existential for local news. It's existential for our democracy. And so we absolutely support this committee's attention to it.

Sen. Joshua Hawley (R-MO):

And I would just say in closing, Mr. Chairman, to your point, Mr. LeGeyt, the reason that the public can't separate fact from fiction is in the case of these images, I mean, they look like they're the real thing. I mean, they're indistinguishable. I mean, it's not a matter of the public. They're not paying attention. They are paying attention. The problem is that they look absolutely real. And whether you're a broadcaster or a doctor or a mom or whatever, I just think the idea that at any moment you live in the fear that some image of me out there could be manipulated and turned into something completely else and ruin my reputation. And what's your alternative? Go file a legal suit and fight that out? And I mean, most people don't have money for that. This just seems to me, Mr. Chairman, like a situation we've got to address quickly. Thank you.

Sen. Richard Blumenthal (D-CT):

Thank you, Senator Hawley. Senator Klobuchar.

Sen. Amy Klobuchar (D-MN):

I agree with what Senator Hawley just said. Why don't we start with you, Ms. Coffey. So numbers I have, Pew Research Center found that we lost about 40,000 newsroom jobs between 2008 and 2020. Why are these newspapers shutting down? Is it because we are so perfect that you have nothing to cover, or perhaps there's a lot to cover, but there's not enough reporters? Could you answer why they're shutting down?

Danielle Coffey:

So you're right to recognize that there is plenty to cover and the value in the audience is exponentially increasing every year. Like I said, it's the opposite trajectory when it comes to the revenue that returns to us. Because we have two dominant intermediaries who sit in between us and our readers. 70% of our traffic is relied upon. So we have no choice but to acquiesce to their terms of letting them crawl, scrape, and place our content within their walled gardens where users are ingesting right now, images, snippets, featured snippets, and now soon ai, which will make them just never leave the platform because it's by design personalized to get personal information to then target users with advertising using our content to engage those users in the first place. And so if there's no return to those who create the original content that's distributed by the monopoly dominant platforms, then we're just not going to be able to pay journalists who create the quality in the first place.

Sen. Amy Klobuchar (D-MN):

Exactly. Mr. Lynch, do you have any choice over whether you are able to decide whether your content is used to train AI models, and do you have a choice about whether to let them scrape your content or not?

Roger Lynch:

It's a somewhat complicated issue. The answer is they've already used it. The models are already trained. And so where you hear some of the AI companies say that they are creating or allow opt-outs, it's great. They've already trained their models. The only thing the opt-outs will do is to prevent a new competitor from training new models to compete with them. So the opt-out of the training is too late. Frankly, the other side of the equation is the output. So remember, the models need to be trained, then they need access to content, current content to respond to queries. So in the case of search companies, if you opt out of the output, you have to opt out of all of their search. Search is the lifeblood of digital publishers. Most digital publishers, half or more of their traffic originates from a search engine. If you cut off your search engine, you cut off your business. So there's not a way to opt out of the output side of it, letting it have access to your content for the outputs, retrieval augmentation in generation without opting out of search.

Sen. Amy Klobuchar (D-MN):

So you have a dominant search engine like 90% with Google, and so then you pay fees, right, to get this content to get to you. Is that right? You said in your testimony, Ms. Coffey, you explained that when users click through to news sites, big tech gets fees for web traffic. Is that right? Yes. The ad tech, that's correct. For the ad tech, right? But then if you want to opt out of the AI model, you'll be opting out of what is for many news organizations now, the monopoly model that you're forced to use is the only way to get to your site.

Roger Lynch:

Correct. You’d opt out of search.

Sen. Amy Klobuchar (D-MN):

Got it down. Okay. So last question. In addition to the licensing issues my colleagues have raised and ways we can clarify that is the bill that Senator Kennedy and I have. And Mr. Lynch, in your testimony, you explained that Australia's news media bargaining code has been successful in leading to negotiations between tech platforms and news organizations. And people have to understand these are trillion dollar companies and sometimes you'll have a tiny newspaper for a town of a thousand people and they won't even return their calls. And can you talk about how the success of the new laws in Australia and actually in Canada have supported journalism and led to the hiring of additional journalists?

Roger Lynch:

Yes. There's been reporting out of Australia that the $140 million that has flowed back to news organizations and publishers there have resulted in hiring or in rehiring of journalists. So the first thing we have to do, as you heard from Ms. Coffey earlier about the decline, what was the cause of the decline? The number of reporters in the last year alone, 8,000 journalists have lost their jobs in the US, Canada, and the uk. So the first thing is to stop that decline because that decline, I would argue, undermines democracy and undermines the fourth estate. And the way to do that is to ensure there's compensation for the use of this content. There's adequate, I think, evidence now from what's happened in Australia and now in Canada that the big tech companies didn't fall over. Their business models continued on, and now journalism is starting to flourish again in these markets.

Sen. Amy Klobuchar (D-MN):

Just Ms. Coffey, anything you want to add to that, then I'm done.

Danielle Coffey:

We can send something. It's back on the labor index. It shows that journalists' jobs spiked afterwards and have plateaued and then have steadily risen.

Sen. Amy Klobuchar (D-MN):

Okay. Thank you.

Sen. Joshua Hawley (R-MO):

Thank you. Senator Klobuchar. Senator Blackburn.

Sen. Marsha Blackburn (R-TN):

Thank you. Thank you to each of you for being here because this is an issue that needs our attention. And Professor Jarvis, we've had Mr. Lynch discussing fair use. I will have to say, dealing with musicians and creators in Tennessee, I have often referred to fair use as a fairly useful way to steal their content, and that's what it turns out to be so many times. And I actually argued for a narrowed application of fair use in the amicus brief that I wrote for the Supreme Court in the Warhol versus Goldsmith case. I think that's important. I think the court made the right decision here. And you've said that you agree with OpenAI's models using materials, training AI models on publicly available material. So let's look at this from the commercial side. So do you not believe that content creators should be compensated when they're going to be competing commercially with the content that is generated from the AI models?

Jeff Jarvis:

Senator, thank you for the question. I am concerned about all the talk I hear about limiting fair use around this table because fair use is used every day by journalists. We ingest data, we ingest information, and we put it out in a different way. Newspapers complained about radio over the years for doing rip and read. They tried to stop it. They created the Biltmore Agreement to stop newspapers, to stop radio from being in news at all. In the end, democracy was better served because journalists could read each other and use each other's information. And I think if we limit that too much, we limit the freedom that comes. I'm also, if we talk about trying to license all content for all uses, we set precedents that may affect, in fact journalists, but also will affect small open source efforts to compete with the big tech companies.

Sen. Marsha Blackburn (R-TN):

So you would have a broader allowance for fair use instead of a narrowed use?

Jeff Jarvis:

Yes, and I think that it is fair use and it is transformative, but as Mr. Lynch also separated--

Sen. Marsha Blackburn (R-TN):

So then for Ms. Coffey, then what you're doing is saying there are content creators who would not be compensated, even though in the commercial applications that AI generated content is competing directly with the original creator of that content, that would be your position.

Jeff Jarvis:

Not to pick on the New York Times, but I can point you to many news organizations that resent when their stories are taken by the times without credit or payment.

Sen. Marsha Blackburn (R-TN):

Let me move on. I want to talk about bias in AI algorithms. And I think we all know it's no secret the mainstream media tilts left and significantly to the left when giving Americans their news. And now we're seeing bias against conservatives in some of the AI tools and training. And there are a couple of examples that we really need to put on the record. Now, if you go to ChatGPT and you say, I want to write a poem admiring former President Trump, what ChatGPT says is, "I'm sorry, but I am not able to create a poem admiring President Trump." If you turn around and next you say, I want to write a poem, ask for a poem, admiring Joe Biden, here's what you get. And I quote ChatGPT, "Joe Biden, leader of the land with a steady hand and heart of a man. You took the helm in troubled times with the message of unity, your words of hope and empathy, providing comfort to the nation." And it goes on and on. And here's a screenshot that I have for the record. So my question to you would be, is this type of bias acceptable in these training models, machine learning for ai?

Jeff Jarvis:

First, it's really bad poetry. So I think maybe, perhaps President Trump is lucky not to have been so memorialized. I think that if we try to get to a point of legislating fake versus not fake, true versus false, we end up in a very dangerous territory and similarly around bias, what all of these models do is reflect the biases of society. So I'll take you, as you say, that the media are generally liberal and thus what they ingest is going to be that way.

Sen. Marsha Blackburn (R-TN):

I think it reflects the bias that is coming from what they're ingesting. My time has expired. Thank you all.

Sen. Joshua Hawley (R-MO):

Thank you, Senator Hirono.

Sen. Mazie Hirono (D-HI):

Thank you very much. As we wrestle with what kind of regulation or parameters we should put on the training and AI, it just seems to make sense that the creators of the content should get some kind of compensation for the use of their material and training the AI platforms. So this is for Mr. LeGeyt, don't we? Do you not already have a lot of expertise, experience in figuring out what would be an appropriate way to license for copyrighted material and other the use of this kind of content, and how would that be applied in the case of ai?

Curtis LeGeyt:

Senator, thank you for the question. Over the last three decades, local television broadcasters have literally done thousands of deals with cable and satellite systems across the country for the distribution of their programming. The notion that the tech industry is saying that it is too complicated to license from such a diverse array of content owners just doesn't stand up. We negotiate with some of the largest cable systems in the country. We negotiate with small mom and pop cable systems. And the result of that is that instead of just doing national deals with our networks, our broadcast networks or news organizations in New York and LA, every local community in the country is served by a locally focused broadcast station, and that station's programming is carried on the local cable and satellite system. So we have a lot of experience here. Similarly, on the radio side, there are licensing organizations. Mr. Lynch referred to this in his testimony that allow for collective licensing of songwriter rights, performing rights, all of this, our industry has been in the center of both as a licensor and licensee, and has been tremendously beneficial. Do we

Sen. Mazie Hirono (D-HI):

Do we need to enact legislation for this kind of licensing procedures to be put together and implemented?

Curtis LeGeyt:

I think it is premature. Some of the discussion around legislation thus far in the hearing has been about reaffirming the application of current law. If we have clarity that current law applies to generative ai, let the marketplace work and we have every reason to believe the marketplace will work, but I think it is incumbent on this subcommittee to pay careful attention to these court decisions and also the way that the tech industry is treating the current law in the interim. Because as we said, if it's an arms race of who can spend the most on litigation, we know that the tech industry beats out everyone else.

Sen. Mazie Hirono (D-HI):

This is also for Ms. Coffey. So do you think, for both of you, do you think that current law, the application of current law should be left and determined by what cases lawsuits then have been filed? How do we clarify that? Current law applies in the AI situation?

Danielle Coffey:

So we submitted a lot of recommendations for the record that have to do with transparency, that have to do with accountability, supporting legislation that's already pending that have to do with competition, going to previous references to the search and tying to ai. When it comes to licensing, there is already a healthy ecosystem, as my colleagues have said, and there's also already a market when it comes to newspapers because we have archives that have existed over hundreds of years that we've spent a lot of money to digitize so that you can find the history as far as the current existing market and the current law. I would encourage these licensing agreements and arrangements between AI developers and news publishers so that we can avoid protracted litigation. That's not good for either industries,

Sen. Mazie Hirono (D-HI):

But for that to happen. Do you agree that we don't need to enact legislation? We should wait to see how things turn out a little bit longer before we take steps that may end up with some unintended consequences.

Danielle Coffey:

There are things that Congress can do at this time around copyright law to ensure responsibility, but as far as what's pending in the courts at this time, we do believe we have the law behind us and we have the protection in the current law.

Curtis LeGeyt:

These technologies should be licensing our content. If they are not, congress should act, but under current law, they should be doing it.

Sen. Mazie Hirono (D-HI):

Thank you. Thank you, Mr. Chairman.

Sen. Richard Blumenthal (D-CT):

Thank you Senator Hirono. As you may have gathered, we're kind of playing tag here. We have a vote going on. We have another one after it. There's also a conference of Republican senators that will be ongoing. I think it's at 3:30. So in case Senator Hawley leaves or I leave or others leave, you'll know why. Senator Padilla?

Sen. Alex Padilla (D-CA):

Thank you Mr. Chairman. Generative AI tools reduce the cost and time needed for content creation. I think we've all recognized that, you've established that, and sadly, it includes the production of misinformation. Now, these models cannot be relied upon to provide a hundred percent accurate information, obviously, because it can generate and often do generate false information. So I believe all the panels affirm both of the statements that I just made. We're trying to predict how then in such an environment, consumer behaviors will shift and its impact on the future of the news industry. One prediction is that in a sea of unhelpful or false information, consumers will gravitate more directly towards trusted news sources like the outlets represented by the witnesses today as opposed to away from them. Ms. Coffey and Mr. LeGeyt. In both of your testimonies, you expressed concern that consumers who would actually lose faith generally in the validity of all information they come across, including from sources you represent. Can you expand upon why and we'll start with Ms. Coffey?

Danielle Coffey:

Thank you, Senator. We've seen there have been reports in the EU when they passed early laws around TDM, which is an early version of AI where there was an impact assessment done and it showed how the competing product does not allow for the return of revenue to the original content creator. So you stay in these walled gardens and revenue is not returned to those who use it. There was also another study done by the ACCC in Australia that demonstrates the same and also the house majority report shows that these walled gardens act as a competing marketplace for the same audience, the same eyeballs as our original content. And we find that 65% of users do not leave those walled gardens and click through, which is the only way albeit taxed, we can monetize through advertising. So AI will only make the situation gravely worse because when you have summaries and when there's nothing left you need from the original article, this will become an existential threat to our industry and there's just no business model for us in that ecosystem.

Curtis LeGeyt:

Senator, we've focused a lot here on the need for fair compensation to support the local news model, but you're actually touching on a second and equally important point that underscores why our authorization should be needed before our content is used by these systems, which is the control we need to maintain over how our content is used, right? If we don't have control over the way our content is used, our trusted news content in these technologies, it can be confused with non verified facts. It can be shaped in misleading ways. There's not always transparency as to what other information being ingested into the generative AI technology. It's not always clear the explainability element of this, of how the information being used together. So all of that raises major concerns as to how we maintain control of how audiences see our content. And then the second piece of this is the deep fakes. The trust in local broadcast only goes as far as the trust in our local personalities. Your communities value their local broadcasters because they know these news anchors, they see them in the grocery store, they see them at town hall events. One deep fake can undermine all of that trust.

Sen. Alex Padilla (D-CA):

Thank you. And as a follow up, professor Jarvis, how do you predict consumer behaviors adapting to a GI environment?

Jeff Jarvis:

It's a good question, which is how professors buy time to find an answer. I don't know. I think that there'll be distrust of the output of these machines. We know that they are not reliable when it comes to fact. I covered the show cause hearing for the lawyer who famously used ChatGPT for citations and the problem wasn't the technology. The problem was the lawyer didn't do his job. And so in these cases, I think that brands and human beings, yes, absolutely will still matter. However, I also watch my own international students use these technologies to be able to code switch their English for US professors and to use these tools to do things that are beneficial for them. So I think that right now we're in an early stage where it's a parlor trick. Oh look, it can write a speech for you and that's funny and that's amusing, but it's not going to be terribly useful as long as these machines can't do facts and they cannot, they can do other things. So I think we'll find different applications that will come that will make these things more useful for consumers. Now it's a trick.

Sen. Alex Padilla (D-CA):

Thank you very much. Thank you Mr. Chair.

Sen. Richard Blumenthal (D-CT):

Thank you Senator Padilla. I have very little sympathy for the lawyer who relied on AI to do that legal research as one who spent literally countless hours in law libraries with red books that were called shepherds. Senator Hawley may or may not have been through this experience, but the reason that I mentioned it is that the chief justice in his annual report noted that many lawyers who didn't use shepherds and submitted briefs to the courts would sometimes say The Lord is my shepherds, and you can't rely on the Lord or AI to do shepherd of cases. The reliance here and it goes to trust is so important because whether it's a lawyer or a journalist or anyone, the fallibility of these systems is ever present. And I have some additional questions. We're going to have a second round. I'm going to call on Senator Hawley because he's going to have to leave and vote.

Sen. Joshua Hawley (R-MO):

Thank you Mr. Chairman. Just one follow-up on fair use since we were going back and forth on fair use. You're talking about limited readings or broader readings, but correct me if I'm wrong, my understanding is that currently the broadest reading possible is the one that these tech companies, AI companies are adopting, which is their view that none of your content should be compensable in any way, right? Mr. Lynch, have your properties received any form of licensing compensation from these generative AI?

Roger Lynch:

No, we have not. And that has been their position, although they negotiate with us, their starting point is we don't want to pay for content that we know that we should be able to get for free.

Sen. Joshua Hawley (R-MO):

Right? I mean anybody, Mr. LeGeyt, different for you?

Curtis LeGeyt:

I don't want to speak for every one of my members because do think there are conversations taking place. It's also hard to paint with a broad brush here between the major tech companies and let alone the nascent entrance. But no doubt there are a substantial number of these companies taking that position.

Sen. Joshua Hawley (R-MO):

Yeah, I'm just concerned that this is going to be, if they're reading, if the AI companies, which are really just the big tech companies, again, if they're reading of fair use prevails, is that fair use is going to be the exception that swallowed the rule. It's the mouse that ate the elephant. I mean, we're not going to have any copyright law left. I mean it won't be a matter of, well, it's a little too broad. It won't exist. It just won't exist. That can't possibly be right. I mean to see an entire body of law just destroyed. I mean that can't possibly be right. Thank you, Mr. Chairman.

Sen. Richard Blumenthal (D-CT):

Thanks and thank you Senator Hawley for your input to this hearing. As Senator Hawley mentioned at the very start, we've held a number of hearings, not a single one of our participants, not a single witness has contended that ai, generative or otherwise is covered by Section 230. And in fact, Sam Altman at our very first hearing acknowledged that it is not covered. Nonetheless, we have introduced No Section 230 immunity for AI Act just to make it explicit and clear. And there is a really kind of deeply offensive irony here, which is that all of you and your publications or your broadcast station can be sued. You can be sued for in effect falsity. And if it's with respect to a public figure like myself, maybe the standards are a little bit higher, but I have a right to go to court. Not so when it comes to social media so that the organizations that use your content to train or appropriated in effect misappropriated without crediting or paying for it can't be sued. They can become breeding grounds for criminality, literally, and they claim total and complete immunity. So there is a double whammy here. It's a double kind of danger and damage that's being done because of Section 230 and because of their continued insistence on protection under Section 230.

Given that point, and I want to go back to Senator Hirono's question, some may argue, well, it's not necessary to change the law, so we shouldn't do anything. But I think we have an obligation both as to Section 230 and as to the licensing provisions to act to clarify the current law and make sure that people understand there has to be licensing. It's not only morally right, but it's legally required and Section 230 does not apply. So let me just open that question to you and perhaps go down the panel beginning with you Ms. Coffey.

Danielle Coffey:

We can agree more with the hypocrisy that there would be a contradiction. So it's good to hear that they're saying that they're the creator of the content saying that in courts over copyright right now we're creating this new expressive content. We're not taking your work it's hours. So it would be contradictory to then be immune for hosting others' content if they're the ones creating it, which is why it's different from original Section 230 applications. It would lead to a healthier ecosystem here too, like you say, because they would outsource their liability to those who already carry their liability and they would license like we're hoping that they will. They would serve attributions because they would want to attach a credible brand to what they're serving, and then it would be good for users because it would be a healthy ecosystem. It would be a win-win win. We would be very supportive of that accountability and legislation and also I believe lead to licensing that we're looking for as well.

Sen. Richard Blumenthal (D-CT):

Professor Jarvis.

Jeff Jarvis:

You may have found your first person who disagrees a bit because I think the question, it's very, very subtle. I think the question is who would be liable in these cases? If we go back to Gutenberg, at first, the person running the press was liable, the bookseller was liable, and finally the author became liable. In the case of that poor lawyer who used ChatGPT, was ChatGPT at fault? Was Microsoft at fault for presenting it in a way that may have seemed as if to present fact or was the lawyer at fault for asking the question? I can go to the machine and I can have it write a horrible poem as the senator said about someone, about anyone that's bad. Is that my fault or is that the machine's fault? And so I think that the question becomes, you're going to find newspapers and TV stations that are going to be using generative AI in all kinds of ways themselves. You're going to find their contributors are going to be using it. So I think it's very difficult to try to pin liability. I went to a World Economic Forum event on AI governance, and the argument there was that we shouldn't pin liability at the model level, but at the application level because that's where people will interact with it. Or I would also say at the user level, if I make it do something terrible, then I'm the one who should be liable. Gutenberg's printing press did not cause the Reformation, Luther did.

Sen. Richard Blumenthal (D-CT):

But if Meta or Google or OpenAI takes the New Yorker logo or the Hartford Courant's headline and in effect creates some kind of false content that is defamatory?

Jeff Jarvis:

Well, in that case, they are the creator and they are liable the same way that any publication would be. If Meta created the content on their own, then Meta is indeed liable. Section 230, as you well know, and I love Section 230, I think it's a great law. I think it protects the essence of the internet. Section 230 protects the public conversation that can occur because the platform where that conversation occurs is not liable for that conversation. So in this case, in your example, if Meta created something, then they're liable. However, if someone used a tool, whether that is Adobe or whether that is ChatGPT to create something and put it on Meta, I don't think Meta should be liable. The person who created it should be.

Sen. Richard Blumenthal (D-CT):

And I don't want to get too deeply into the legalities here, but if Meta has reason to know that that content which is generated by AI could be fallible, doesn't it have an obligation to check again? The irony here is that I've been a source for stories in the New Yorker, and as I mentioned to Mr. Lynch, I'm always amazed that I get a call from someone at the New Yorker a week or so later. Here's the quote that you gave to our reporter. Is this correct? In other words, they're fact checking before the story actually appears. Now then one of the social media companies could take artificially generated trash attributed to the New Yorker, put it up, and as Mr. LeGeyt and Mr. Lynch have observed, the trust and credibility of the publication itself is undermined by artificial intelligence and then Meta using that content publicly.

Jeff Jarvis:

It's a tool, it's a printing press, and in that sense, it can be misused by anyone. And if we think that we have to make the model liable for everything that could possibly happen with that model, then we're not going to have models.

Sen. Richard Blumenthal (D-CT):